Microsoft's unveils its grand virtualization strategy

Updated 11:00 AM PST: Microsoft will formally unveil its grand virtualization strategy tomorrow at a Virtualization Deployment Summit in Bellevue, WA, highlighted by the acquisition of Calista Technologies, a deeper alliance with Citrix and initiatives for deploying virtualization from desktops to data centers (see also Mary Jo Foley's take on the announcements).

Microsoft, as well as VMware and Citrix's XenSource division, see a fertile greenfield for virtualization. "It's early--incredibly less than 5 percent of servers are virtualized, and even less on the desktop," said Larry Orecklin, general manager of System Center marketing.

VMware has been leaving Microsoft in the dust over the last few years. Microsoft hopes to expand the market and its share by offering a single set of management tools for managing physical and virtual resources and becoming a low cost leader in the space, Orecklin told me. The alliance with Citrix also feeds into Microsoft's need to compete with VMware.

"It's a flash point in the industry. We are going form interesting to really useful technology. For all the hype, it can be transformative with the right business model and economics. We've been on this journey for a while, investing across the businesses, and now we are bringing the different pieces together," Orecklin maintained.

I asked Bogomil Balkansky, senior director of product marketing at VMware, to comment on rival Microsoft's announcements. "Microsoft is probably seven or eight years late on the server side and at least a couple of years behind VMware on the desktop. It's definitely a follower strategy," he said. "VMware's first generation of hypervisor was delivered in 2001. Microsoft has not yet delivered its first generation of hypervisor. If it's taken Microsoft seven years, how fast are they going to catch up from this point on?" As a data point Balkansky added that VMware's customer base includes all of the Fortune 100 and 91 percent of the Fortune 1000.

Regarding Microsoft becoming the low cost leader, Balkansky said, "This is the Microsoft playbook. VMware has a pretty good track record of pricing and packaging."

That said, just because Microsoft is getting a late start in virtualization doesn't mean that it can't have a significant impact on the market landscape. Microsoft's playbook in this context is to be a fast follower, drafting off of VMware's pioneering work, and use all of its integrated resources and pricing power to grow the market and take share from competitors.

As part of the plan to accelerate virtualization adoption, Microsoft is dropping the annual subscription to Windows Vista Enterprise Centralized Desktop from $78 to $23 per desktop for clients covered by the Software Assurance program. In addition, Windows Vista Home Basic and Windows Vista Home Premium are now licensed for use with virtual machines.

Microsoft Office 2003 and 2007 will also be supported as a virtual application, running in both Microsoft Application Virtualization 4.5 and SoftGrid Application Virtualization 4.2.

"On the server side, you just need Hyper-V [the hypervisor in Windows Server 2008] and a System Center license. The combination is a fraction of price of alternatives and one reason or opportunity for broader option," Oreckin claimed. Windows Server 2008 is slated for RTM (Release to Manufacturing) this quarter and Hyper-V within six months or sooner, he added.

Calista Technologies gives Microsoft technology for improving the user experience of 3D and multimedia content (and running Vista) in virtualized environments, optimizing the Remote Display Protocol to reduce the network bandwidth requirement and eliminating the need for media players and codecs on the client system.

Microsoft also formed an co-marketing alliance with Citrix around the forthcoming Windows Server 2008 and Windows Optimized Desktop solutions, extended with Citrix’s XenDesktop and Presentation Server products and managed by Microsoft's System Center. Citrix XenDesktop connection broker works will be tuned to work with Windows Optimized Desktop solutions, and Citrix is developing a tool for transferring virtual machines between Citrix XenServer and Windows Server 2008 with Hyper-V.

VMware already provides a free utility for converting virtual machines from XenSource and Microsoft to VMware and vice versa, Balkansky said.

On the application virtualization front, Boettcher said that Softricity, which Microsoft acquired in July 2006, has sold over 4 million licenses of its app virtualization software in the the last three quarters. "It's fastest growing enterprise product in Microsoft history," Orecklin added.

Bob Muglia, senior vice present of the Server and Tools Business at Microsoft, is sending the following very general executive memo to 300,000 customers. The money line is: "At Microsoft, we believe that in the coming years, sever [server] virtualization will become ubiquitous." That's a signal that Microsoft will be extremely serious about conquering a marketplace.Harnessing the Power of Virtualization for Dynamic IT

The potential for information technology to drive business success has never been greater. Advances in software, devices, and networks are transforming the way companies streamline communications, automate processes, and enable employees to access the information and capabilities they need to respond to new opportunities.

At the same time, the complexity of IT has never been higher. Business success increasingly depends on providing mobile employees with easy access to corporate computing resources. People who use instant messaging, social networking sites, and other relatively new communications technologies at home expect to use similar tools at work.

The result is a growing number of contradictory requirements: ease of access vs. security and compliance; performance vs. cost; innovation and agility vs. reliability and continuity. For IT professionals, the real challenge is resolving the tension inherent in trying to create an infrastructure that provides both the flexibility to enable employees to drive business success and the control to protect corporate resources, maintain compliance, and provide continuity.

Helping companies find the right balance is one of Microsoft’s most important priorities. To do that, we are focused on technology innovation that will enable companies to build systems that have the flexibility and intelligence to automatically adjust to changing business conditions by aligning computing resources with strategic objectives. This is a vision we call Dynamic IT. Virtualization technologies that provide powerful new tools for creating more efficient, flexible, and cost effective IT systems will provide a critical foundation for bringing this new vision to life.

In previous Executive Emails, Bill Gates and Steve Ballmer discussed advances that are revolutionizing communications, improving productivity, and transforming the way companies use information. As Senior Vice President of Microsoft’s Server and Tools Business, I want to share my thoughts about how virtualization is helping IT departments reduce costs and improve business continuity and compliance, and how, over the long term, it will have a significant impact on the way businesses run IT. It is still early for this important technology; ultimately, virtualization will play an important role in improving business agility by making IT systems more flexible and more responsive to changing business needs.

Understanding Virtualization

Virtualization is an approach to deploying computing resources that isolates different layers—hardware, software, data, networks, storage—from each other. Typically today, an operating system is installed directly onto a computer’s hardware. Applications are installed directly onto the operating system. The interface is presented through a display connected directly to the local machine. Altering one layer often affects the others, making changes difficult to implement.

By using software to isolate these layers from each other, virtualization makes it easier to implement changes. The result is simplified management, more efficient use of IT resources, and the flexibility to provide the right computing resources, when and where they are needed.

There are different types of virtualization. Machine virtualization uses software to create a virtual machine that emulates the services and capabilities of the underlying hardware. This makes it possible to run more than one operating system on a single machine. On servers, this approach is called server virtualization; on end-user PCs, it is called desktop virtualization.

Application virtualization separates the application from the operating system, reducing conflicts between applications, which can simplify deployments and upgrades. Presentation virtualization enables an application on a computer in one location to be controlled by a computer in another. There is also storage virtualization, which lets users access applications and data without having to worry about where they are stored. And network virtualization allows remote users to tap into a company network as if they were physically connected. Virtualization is not new. IBM first introduced virtual machine technology for mainframe computers in the early 1960s. Microsoft Windows NT included a virtual DOS machine. Virtual PC was introduced by Connectix in 1997 (Microsoft acquired Connectix in 2003). EMC’s VMware introduced its first product, VMware Workstation, in 1999. Softricity introduced SoftGrid, the first application virtualization product, in 2001 (Microsoft acquired Softricity in 2006).

Currently, industry analysts estimate that fewer than 10 percent of servers are virtualized, despite the fact that virtualization has been around for many years. But its significance is growing as companies have introduced products that target today’s high-volume, low-cost hardware. Now, more and more companies are using server virtualization to save money by consolidating the workload of several servers onto a single machine.

Virtualization: A Foundation for Dynamic IT

As important as server virtualization can be in reducing costs, saving money is just the beginning of the value that virtualization offers. At Microsoft, we believe that virtualization will play a significant role in enabling companies to create IT systems that are not only highly efficient, but that have the self-awareness to adapt automatically as business conditions change.

By separating the layers of the computing stack, a virtualized IT environment makes it possible to quickly deploy new capabilities without having to configure components. In a virtualized environment, testing requirements and application compatibility issues are reduced, processes are easier to automate, and disaster recovery is easier to implement.

In the data center, virtualization not only supports server consolidation, but it enables workloads to be added and moved automatically to precisely match real-time computing needs as demand changes. This provides greater agility, better business continuity, and more efficient use of resources.

On the desktop, application virtualization reduces management costs. And when the operating system, applications, data, and user preferences are all virtualized, it makes it possible for users to access the computing resources they need anywhere, from any machine. The result is tremendous flexibility for employees and greater efficiency and agility for IT departments.

Microsoft Virtualization Products and Solutions for Dynamic IT

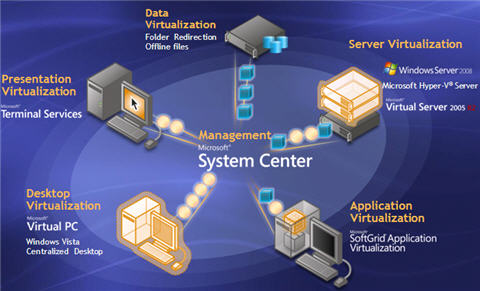

While each layer of virtualization delivers an important set of benefits, the real power of virtualization comes when companies implement an integrated virtualization strategy that extends across their IT infrastructure. Today, Microsoft provides a comprehensive set of virtualization products, tools, and services that span from the datacenter to the desktop:

Server Virtualization: With Microsoft Windows Server 2008, server virtualization will be available as part of the operating system with the new “Hyper-V” feature. Microsoft’s design approach improves virtualization efficiency and delivers better performance. (This technology is also available separately through Microsoft Hyper-V Server.) Hyper-V technology—as well as the currently available Microsoft Virtual Server 2005 R2—supports server consolidation, re-hosting of legacy operating systems and applications on new hardware, and disaster recovery based on application portability across hardware platforms.

Application Virtualization: Microsoft SoftGrid Application Virtualization transforms applications into centrally-managed virtual services that are streamed to desktops, servers, and laptops when and where they are needed. SoftGrid dramatically accelerates application deployment, upgrades, and patching by simplifying the application management lifecycle.

Presentation Virtualization: With Microsoft Windows Server Terminal Services, a Windows desktop application can run on a shared server machine and present its user interface on a remote system, such as a desktop computer or thin client.

Desktop Virtualization: Microsoft Virtual PC runs applications that are not compatible with the operating system on a desktop PC by supporting multiple operating systems on a single machine. It also accelerates testing and development of new software and systems. In addition, with the Windows Vista Enterprise Centralized Desktop license for hosted desktop architectures (also known “virtualized desktop infrastructures”), an entire desktop can be hosted on a server and remotely delivered to another desktop computer.

Our goal is to provide companies with the underlying technology they need to implement a flexible infrastructure that delivers the capabilities that employees and customers need, when and where they need them.

The Importance of Integrated Management

In a virtualized environment, a comprehensive management approach that provides the ability to monitor and track physical and virtual resources becomes critical. To achieve Dynamic IT, management solutions must also provide the foundation for automating the allocation of resources as business conditions change. It is the combination of virtualization technologies running across computing layers and orchestrated by a single set of management tools that provides the foundation for Dynamic IT.

Microsoft System Center delivers management software that enables IT professionals to manage all of their computing resources—both virtual and physical. System Center provides provisioning, monitoring, and back-up tools for virtual and physical environments across desktops and servers, and operating systems and applications. System Center enables companies to capture information about their infrastructure, policies, processes, and best practices so they can automate operations, reduce costs, and improve application availability.

Dynamic IT from the Server to the Desktop

Although virtualization has been around for more than four decades, the software industry is just beginning to understand the full implications of this important technology. Server virtualization to consolidate multiple machines into a single server is the most common form of virtualization in use today but it is still very early in the adoption cycle. At Microsoft, we believe that in the coming years, sever virtualization will become ubiquitous. Adoption of other forms of virtualization is just beginning, too, and their potential value remains largely untapped.

To help make this valuable technology more accessible, Microsoft is delivering innovations that make virtualization more affordable and less complex. We also are actively working with industry partners to develop new products and services that will unlock the power of virtualization for companies of all sizes.

Already, virtualization products from Microsoft and our partners are helping companies match computing capabilities to business needs. Imagine, for example, if your employees could access their personalized desktop, with all of their settings and preferences intact, on any machine, from any location. Or if workloads running on the servers in your data center automatically redeployed to respond to a sudden surge in demand for a specific capability. Or if your entire infrastructure could restore itself instantly following a catastrophic power outage.

Today, using existing Microsoft technologies, these Dynamic IT scenarios are already possible. Tomorrow, they will be the norm as we continue to bring new innovations in virtualization and systems management to market that help companies build truly dynamic infrastructures, from the server to the desktop.

Bob Muglia