Mix and match analytics: data, metadata, and machine learning for the win

YouTube recommendations are a prominent example of applying advanced analytics on a massive scale to improve a service, the experience users get out of it, and the bottom line of the vendor behind it -- Google. Previously, we explored the rationale behind it and pondered as to how this type of analytics could be classified. It's time to pick up where we left off and explore how it works under the hood.

Inspiration came from a hit moment for YouTube recommendations: one of those times when it succeeded in picking up the track that the person curating an ad-hoc, spur-of-the-moment playlist was about to play next. The wow effect induced by this successful prediction/recommendation of a rarity, triggered a spur-of-the-moment discussion which may serve to illuminate different aspects of analytics.

So, how could YouTube know what you want to play next before you play it?

Data: has(h) functions, will preserve similarity

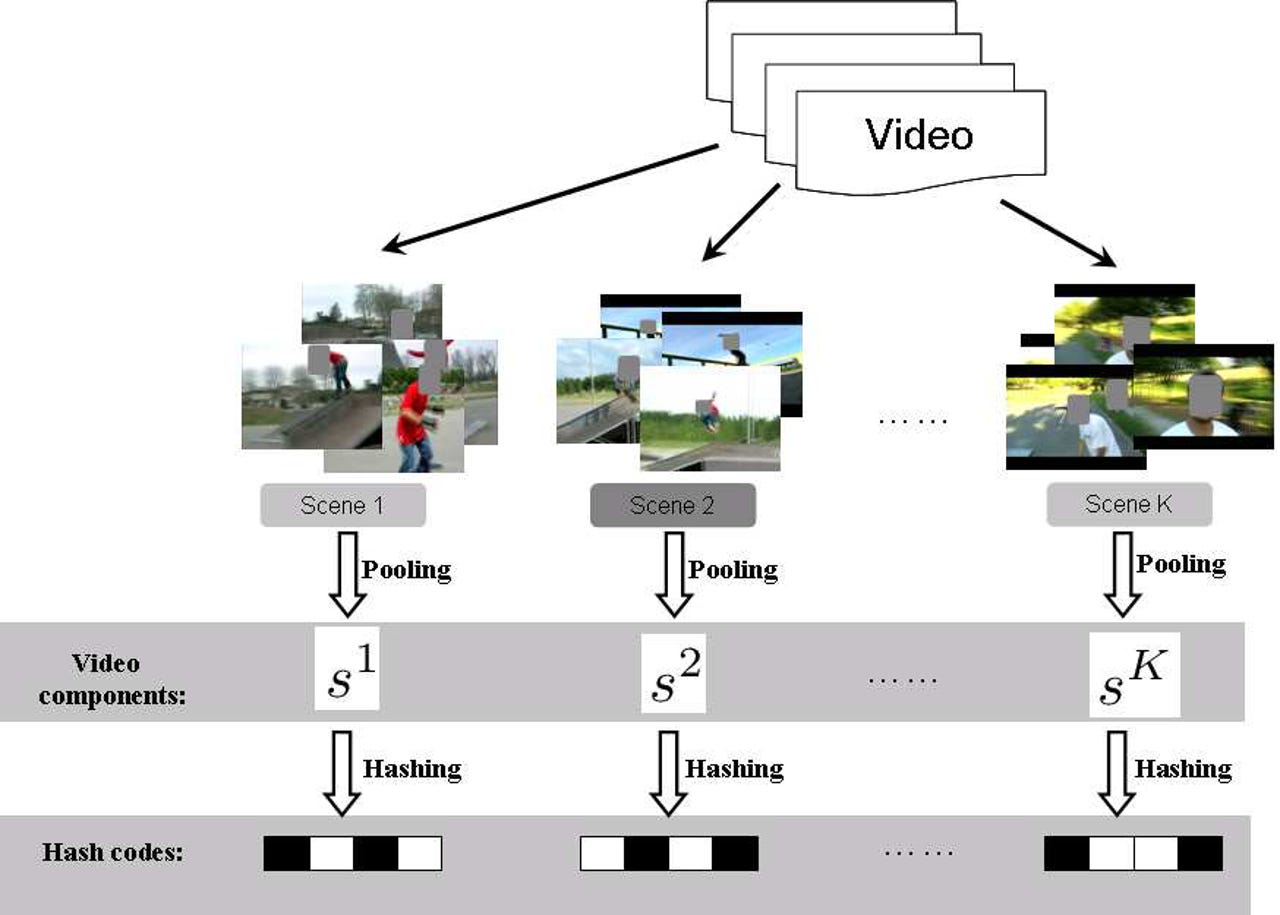

Inherent properties of data can be used for analytics, for example by applying locality-sensitive hash functions. Image: Cao/Li/Mu/Chang

One take at this would be to find a way to rank the degree to which videos are similar to each other based on their content. Strange as it may sound, this scenario is plausible. A typical application is ranking documents as to their similarity based on hashes of their content. This approach is based on a technique called Locality Preserving Hashing (LPH).

LPH's premise sounds simple: it relies on using a hash function to map content to an identifier, in such a way that similar content will result in similar identifiers. A hash is a function applied to any data input that generates an output based on the input it receives. The most obvious example and common application of hash functions is using them to organize textual input in buckets. LPH is about finding and using hash functions that preserve locality, meaning that they map similar input to similar output.

A simple example would be to apply LPH to documents. When dealing with documents, applying a hash function is more intuitive as the input is a string representing the entire content of the document and the output is another string representing a mapping of the input as per the hash function.

A naive hash function H(input) -> output could use a numeric value corresponding to each character in the input to generate an output. If we define a hash function H that works by mapping each character in its input to its order in the alphabet and concatenating output, then H("ab") -> 12.

Of course, reality is more complicated than that. Our naive H function cannot be used to rank documents as to their similarity, because it lacks the locality preserving property: it does not map similar input to similar output, at least not in the way we would intuitively define similarity.

For example H("hello world") -> 851212150231518124, H("world hello") -> 231518124085121215, H("hello buddy") -> 8512121502214425. If we use the difference between the numeric value of generated hash outputs as a (equally naive) metric for similarity, we'll end up concluding that "hello world" is closer to "hello buddy" than it is to "world hello". This goes to show that definition of similarity, as expressed in this case by hashing, is both hard and context-dependent.

For textual data, there are other techniques that can be used to determine similarity, as well as more advanced flavors of hashing. For multimedia content like video, things are even more complicated as hashing becomes less obvious and the locality preserving property is even harder to achieve. Case in point though, hashing multimedia content works fine for Shazam, the well-known music service that can identify tracks by giving it audio clips as input.

Shazam works by generating hashes ("fingerprints") of the audio clips it receives and comparing them against a database of fingerprints generated from the list of tracks it works with. Granted, identity and similarity are not the same thing, which is why applying LPH to multimedia content is hard, but apparently possible.

Whether this technique is actually used by Google in YouTube is not known at this point, but the fact that a body of published research on the topic from the likes of IBM does exist makes this a conceivable notion.

The takeaway from this superficial analysis however is that regardless of what the data at hand or the techniques used, leveraging inherent features of the data that an analytics solution works with is a valid method for building such a solution.

Metadata: is it enough, is it descriptive, is it descriptive enough?

Metadata is additional data describing the data that an analytics solution works with. In the case of YouTube videos, we have metadata such as video title, description, tags and annotations, category, date and place the video was captured, and uploader identity. Some of that metadata like uploader and upload date are generated automatically, but most are supplied by uploaders themselves.

That metadata also has differences in its nature and use. Depending on the point of view, metadata can be classified according to different taxonomies, but for our purposes we'll go with Getty's classification. According to Getty, metadata can be classified as Administrative, Descriptive, Preservation, Technical, and Use metadata. Getty's classification is the most complete one, as it stems from the cultural heritage world, one of professional content administrators.

YouTube on the other hand exemplifies a common property of many of today's data-intensive applications: the nature of its data flows makes manual management of its metadata impractical and/or non sustainable. YouTube deals with this challenge by crowdsourcing parts of its metadata management and automating others.

While presumably all available YouTube metadata are used for analytics, a big part of its administrative and descriptive metadata generation is crowdsourced, while other types of medatata are mostly handled by means of automated extraction. As Google engineers point out, not all features are created equal, so some metadata is of more importance, and descriptive metadata is among those.

But what if each and every YouTube video was annotated with perfect metadata -- would that ramp up the effectiveness of recommendations? Are not even a few ratings more valuable than metadata?

YouTube video metadata is incomplete and poorly structured, which makes this a hypothetical question. Still, we can once more turn to Google to answer it. Google relies on KnowledgeGraph to power its famous search engine. KnowledgeGraph features carefully curated descriptive metadata about a wide array of topics to power semantic search, resulting in the most powerful massively deployed search engine in the world.

KnowledgeGraph is what YouTube metadata is missing: an ontology. Ontologies express semantics in a precise way, and are on the far end of a scale of semantics constructs that also includes lexicons, taxonomies, and schemas. Ontologies can be used to state relationships such as inheritance, synonym-antonym, and every other conceivable relationship its curators wish to define.

That means that a big part of what happens with any information retrieval request depends on ontological definitions. For example, whether track A, classified in genre X, is similar to track B, classified in genre Y, depends to an extent on whether genres X and Y have been marked as having some sort of relationship between them.

So if semantics are so great, why doesn't Google use them for YouTube? Curating an ontology, or even something simpler like a vocabulary or a schema, is no easy feat. Google already does this however, so it could just reuse KnowledgeGraph for YouTube. But even with a ready-made ontology at hand, using it to annotate data is also hard.

In the case of semantic search, the gigantic corpus of web documents is automatically annotated by means of Google's indexing engine. The engine uses an array of techniques to extract the most relevant keywords out of documents and uses them to annotate each document. Using manual annotation to deal with the volume of data being added to the web daily would be sheer folly, and same goes for YouTube content.

Some metadata, however, is better than no metadata, which is why Google crowdsources the annotation of YouTube videos using a very simple schema plus some free text user-generated descriptions. Annotating data is not trivial and requires familiarity with the vocabulary used, which may partially explain why an ontology for annotating YouTube content is not available to its users.

Soon algorithmic solutions may be see the world like humans do and thus used to generate descriptive metadata for YouTube content in a way similar to how this works for text documents, and it will be interesting to see the effect on YouTube analytics.

Machine Learning: connecting the dots

Machine Learning is all about identifying patterns in data -- but is it really entirely data and metadata agnostic?

So if using inherent data properties is hard and using metadata does not scale, is using machine learning (ML) easy? ML is at the core of Google's approach to YouTube analytics. In fact, not just any ML, but deep learning (DL), a branch of ML that is seeing both great advances and hype. Although ML is being increasingly adopted and discussed, DL may deserve analysis on its own. Still, for all intents and purposes, DL is ML.

Google is one of the leaders in DL, having recently open-sourced TensorFlow, a DL library and API based on the GoogleBrain project. And the fact that Google manages to apply DL in YouTube analytics to deal with the related challenges of scale, freshness, and noise is quite an achievement. But even the most advanced of ML applications does not come without its own set of challenges.

YouTube analytics is framed by Google engineers as a collaborative filtering problem. In collaborative filtering, recommendations for content are delivered to users based on their similarity to other users. "The underlying assumption of the collaborative filtering approach is that if a person A has the same opinion as a person B on an issue, A is more likely to have B's opinion on a different issue x than to have the opinion on x of a person chosen randomly."

For example, it should not matter whether fish and bicycle have some relationship as concepts in the real world and whether that relationship is captured by an ontology or a LPH hash function. As long as there are users that for some reason tend to watch videos with fish and videos with bicycles, and those users are deemed similar to you, watching a fish video is likely to bring a bicycle video as a suggestion to be played next.

ML is about finding patterns in data. In theory, what that data and the associated metadata is bears no significance to identifying patterns. Which is why ML matches well with the collaborative filtering problem. And that's part of the beauty of ML too: not having to model all possible relations in the real world. After all, who can possibly know it all and who can possibly predict that some day for some reason people will start associating fish with bicycles?

In practice however, knowing the data and metadata for a domain ML is to be applied in matters a great deal. Remember Oren Etzioni's statement -- "ML is 99 percent human work." And as per Google's engineers, "despite the promise of DL to alleviate the burden of engineering features by hand, the nature of our raw data does not easily lend itself to be input directly into feed-forward neural networks. We still expend considerable engineering resources transforming user and video data into useful features."

Google's engineers also expand considerable intelligence in choosing what algorithms they should use and what features (metadata!) to incorporate, training their model, and applying all sorts of reported and non-reported heuristics in their DL-powered engine. Which brings us to the key point.

In the mix: the human in the data loop

Creating a winning analytics solutions means mixing and matching every available approach applicable to the domain. Image: Paco Nathan

Analytics used to be simple. When talking about analytics, the implicit assumption was that we would be referring to descriptive analytics, or what is now called rear-view mirror analytics: what has already happened, which seems to be relatively easy to keep track of and present. Business intelligence, OLAP, reports, bar charts, and dashboards.

Internet of Things

Analytics has now expanded to include scenarios that go way beyond previous definitions and capabilities. The explosion in the volume of data, in the techniques and means to store and process them, and the realization of the benefits this can accrue has led to analytics applications that border on artificial intelligence -- a topic in and by itself.

We have used a well-known, advanced example of analytics to outline a framework for examining analytics solutions: mix and match analytics. We chose YouTube as an example of a service everyone is familiar with, featuring rich and extensive data and metadata, and powered by a vendor with advanced technology and resources and far-reaching ambition. Google has published enough about its approach to allow for certainty and speculation in healthy doses.

Google is not dogmatic in its approach, and neither are other experts in the field. Xavier Amatriain is VP of engineering at Quora and was previously heavily involved in Netflix Prize, a challenge very similar in nature. Back in 2009, before ML started enjoying the kind of widespread popularity it does today, Amatriain pointed towards the fact that mixing the use of data and metadata with patterns observed in their use can lead to better results.

In the end, you could say it becomes a sort of philosophical question, with two schools of thought: curation versus emergence, rationalism versus empiricism. Cognitive science does not offer definitive answers there, AI does not either, and neither could we.

But if there is one takeaway point from this brief journey into advanced analytics, it would be that mixing and matching may lead to better results than dogmatic approaches. So no matter what advanced tools and techniques we may have in our arsenal, the human in the loop will continue to play a key role in the foreseeable future.

This simple mix-and-match framework may offer a lens to examine other analytics solutions as well, and we will be using it for this purpose.

How to implement AI and machine learning: