Report: Google's reCAPTCHA flawed

UPDATED: According to a Google representative from the Google Global Communications & Public Affairs who contacted me - "While the report is newly released, its substance is not current and seems to include some misunderstandings of the reCAPTCHA technology according to some of our engineers. Therefore, the so-called flaws described in the report, are not related to the reCAPTCHA that people use today."

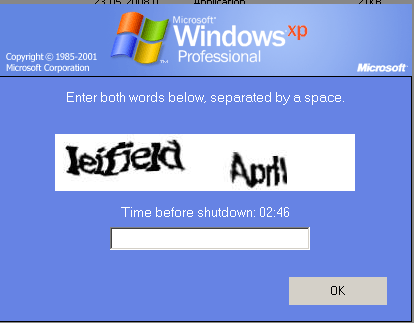

In a newly released report, security researcher claims that Google's reCAPTCHA, one of the most widely adopted free CAPTCHA services, contains weaknesses that would allow a 10,000 infected hosts botnet the ability to achieve 10 recognition successes every second, allowing it to register 864,000 new accounts per day.

In response, a Google spokesman stated that the report relies on data collected in early 2008, and doesn't take into consideration the effectiveness of the current technology used against machine solvers.

More from the report:

Running against 200 challenges, this method solved 10 correctly - a success rate of 5 percent. It further got one word correct in 25 other cases. If we presume that in half the cases the failed word would be the unknown word for reCaptcha, this gives us a total success rate of 17.5 percent. Also worth noting, ocropus alone solved 0 of the 200 challenges. When ocropus was provided with the challenge split into single word portions it was able to get 5 single words, a success rate of 1.25 percent.

For instance, with a 10,000 machine botnet (which would be considered relatively small these days), given broadband connections and multi-threaded attack code, even with only 10 threads per machine, a 0.01% success rate would yield 10 successes every second, which would provide the attacker with 864,000 new accounts per day if they were attacking a registration interface.

Here comes the actual problem posed by the real threat - on their way to emphasize on the "human factor" (Google's CAPTCHA experiment and the human factor) in the CAPTCHA recognition process in terms of usability, it becomes easier for the vendors in the CAPTCHA solving economy (Inside India's CAPTCHA solving economy) to efficiently solve them, this time with a 100% success rate. Therefore, Google's reCAPTCHA is just as flawed as any other CAPTCHA.

The underground economy has long adapted to the CAPTCHA recognition process, and the number of crowd-sourcing driven services offering access to APIs providing hundreds of thousands of recognized CAPTCHA for major Web 2.0 sites and social networks, is increasing. Naturally, it shouldn't come to as a surprise that the price for bulk orders of a million recognized CAPTCHAs is decreasing.

One such service that's promoting itself as a mainstream reCAPTCHA solver, is currently offering 1 million solved reCAPTCHAs for $800, with special prices for custom packages. On the other hand, the Koobface botnet, once a customer of such commercial CAPTCHA recognition services, is now achieving a 100% success rate by forcing the Koobface-infected users into recognizing them, who by doing so are unknowingly helping the botnet efficiently register thousands of accounts across multiple web properties. Clearly, the long-term emphasis appears to be on the 100% success rate offered by humans who knowingly or unknowingly solve CAPTCHAs for fraudulent purposes.

Is machine-learning CAPTCHA breaking an outdated approach used by spammers, in comparison to the emerging CAPTCHA solving services relying exclusively on humans, the very same humans that the CAPTCHA was originally meant to identify?

What do you think? Talkback.