Storage in 2014: An overview

Anyone who has suffered data loss — be it via a crashed hard drive, a failed network array or an external cloud service outage — knows the importance of reliable access to critical data, and the value of a suitable backup and recovery plan in the event of disaster. As we become increasingly dependent on digital data, so its storage, management, transport and governance become ever more critical. And in the post-NSA/PRISM/Snowden world, individuals and organisations will be giving extra consideration to who has access to their data, and how it can best be secured from unwelcome visitors.

As 2014 gets underway, there is more digital data than ever, stored on everything from portable USB sticks to massive data center disk arrays. In this article, we'll take a high-level look at the main trends in the storage sector, which is the building block of the digital world and an area that's currently undergoing significant transformation.

The data explosion

In December 2012, IDC and EMC estimated the size of the digital universe (that is, all the digital data created, replicated and consumed in that year) to be 2,837 exabytes (EB) and forecast this to grow to 40,000EB by 2020 — a doubling time of roughly two years. One exabyte equals a thousand petabytes (PB), or a million terabytes (TB), or a billion gigabytes (GB). So by 2020, according to IDC and EMC, the digital universe will amount to over 5,200GB per person on the planet.

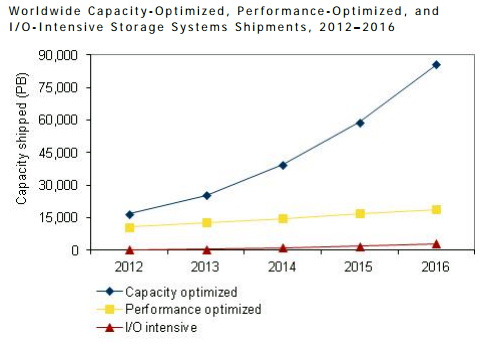

This sort of data growth clearly requires heroic amounts of storage to keep it available for as long as it's required, and to archive much of it thereafter. Accordingly, IDC estimates that annual worldwide shipments of 'capacity optimized' storage systems will rise from around 22,000PB in 2013 to just under 90,000PB in 2016:

Storage industry trends

The growing volume of data, and storage capacity to service it, comes as no surprise. Other significant trends that will affect the way IT managers plan their storage strategies in 2014 include: more data migrating to third-party cloud services; the need for data and storage management to become more efficient (employing techniques such as storage virtualisation, deduplication, thin provisioning and automatic tiering); and the appearance of new technologies (notably flash) in mainstream storage products.

Before we look at some of these trends, let's examine how the storage world appears from the front line. Leading storage vendor EMC has published an annual report on the storage industry since 2006, the latest being Managing Storage: Trends, Challenges, and Options (2013-2014). In EMC's 2013 survey, managers and IT professionals in over 800 organisations worldwide report, not surprisingly, that managing storage growth is their biggest challenge (as it was in 2012):

Also predictable is the high rating for designing, deploying and managing backup, recovery and archive solutions for all that new data. More indicative of the changes sweeping through the storage industry is the number-three 'pain point' for managers and IT professionals: the need to make informed strategic/big-picture decisions.

Some of those strategic areas are evident in the topics seeing the biggest increases in ranking between 2012 and 2013, namely: managing external cloud service providers (100% increase, from 4-8%); infrastructure for big data analytics (43% increase, from 7-10%); and designing, deploying and managing storage in a virtualised server environment (19% increase, from 31-37%). Note that the cloud and big-data rankings, although growing fast, remain low in absolute terms. Note also that a lack of skilled professionals — in both storage and cloud technology — is a significant worry for IT managers.

Cloud storage

Consumers have enthusiastically adopted public cloud storage services such as Dropbox, Box, SkyDrive and Google Drive for some time — primarily, of course, because of their convenience and low cost (all operate a 'freemium' model for consumers). Enterprises, although also attracted by potential cost savings, have proven more cautious when it comes to entrusting their data to the cloud, routinely citing security as the main blocker to wider adoption: other often-cited barriers include the complexity of migrating enterprise data to the cloud, along with worries over vendor lock-in, performance, data governance regulations and a lack of requisite skills in the IT department.

As a result, most enterprise cloud storage deployments have targeted secondary backup and archive data rather than front-line workloads such as transaction processing, batch computing, web services and high-performance computing/analytics. This may well be changing, but the cautionary tale provided by cloud storage provider Nirvanix highlights the need for careful planning when migrating enterprise data to the cloud.

Nirvanix, founded in 2007, was a leading US-based provider of public, private and hybrid cloud-based storage services that, in September 2013, abruptly informed its 1000-plus customers — some of them large partners like IBM, Dell and HP — that it was to close and that they had two weeks (subsequently extended to a month) to migrate their data to another service. Apart from demonstrating the danger of placing all your data eggs in one cloud-service basket, the Nirvanix debacle highlights the uncomfortable fact that while it's easy to upload a large amount of data to the cloud over a long period of time, it's another matter to move it elsewhere quickly over limited-bandwidth connections.

One consequence of a high-profile failure such as Nirvanix is likely to be an increased tendency for enterprises to favour top-tier cloud service providers such as Amazon, Microsoft, Google, Rackspace and Terremark. Another is a demand for service-level agreements (SLAs) to explicitly spell out how data can be migrated between different cloud service providers should the need arise.

Because of these concerns, cloud storage gateways — software or hardware appliances that sit on the enterprise network and translate data between file- and block-based network protocols and cloud service providers' object storage architectures — are also likely to see increased usage. One of the most recent products in this area is IBM's InterCloud Storage, or ICStore, which it describes as a "storage software toolkit which provides a simple interface for clients to securely migrate data across multiple clouds from any vendor, while keeping it confidential and secure".

On-premise data management

Although some enterprise data is undoubtedly moving towards the public cloud, the major current trend, according to EMC's aforementioned survey, is the transformation from traditional non-virtualised environments to consolidated virtualised environments. EMC's figures show that more storage is now allocated in virtualised than traditional environments, with the inflection point occurring during 2012:

EMC's survey results also suggest that internal private cloud storage will overtake traditional deployments by the end of 2016, with external cloud storage remaining in fourth place for the foreseeable future.

This move to consolidate and virtualise on-premise storage is part of a general trend to make more efficient use of data centre storage, in order to accommodate increasing volumes of data and reduce costs. Techniques such as deduplication (storing only one copy of a file and replacing duplicates with pointers), thin provisioning (maximising storage utilisation by creating logical units from a common pool) and automatic tiered storage (moving data between different types of storage to optimise space, performance and cost requirements) will all make a contribution here.

New storage technologies

Although the hard drive has been with us since 1956, there's plenty of life left in the spinning magnetic disk, as we shall see. Other media have since appeared, of course — most notably low-power, high-performance flash, which is now becoming affordable enough to be widely deployed in the enterprise.

Flash

Many storage pundits have noted that 2013 was the year flash 'went mainstream', becoming a regular feature within servers, in hybrid hard disk/flash storage arrays and in all-flash arrays. The latter have hitherto been prohibitively expensive, but the introduction of enterprise-class solid-state drives based on consumer-grade multi-level-cell (MLC) flash has brought the cost down. In Dell's Compellent Storage Centre 6.4, for example, expensive single-level-cell (SLC) flash drives are used for write-intensive 'tier 1' data, with more affordable, and more capacious, MLC drives handling read-intensive 'tier 2' data. In the optional hybrid model, infrequently accessed 'tier 3' data is allocated to cheaper, but slower, enterprise-class hard drives.

The flash market is currently undergoing a shake-up as pioneering startups like Fusion-io and Violin Memory come under pressure from leading incumbents of the storage world such as EMC and NetApp, which are rapidly incorporating flash into their existing product lines. We can expect plenty of activity in this area in 2014.

Hard drives

As noted earlier, there's plenty of life left in the traditional hard disk drive, whose combination of cost per gigabyte and performance makes it the workhorse storage medium of choice for cloud providers, who buy them in serious bulk every year. The price erosion for hard disk storage over the years is startling: according to statisticbrain.com, the average cost per gigabyte fell from $437,500 in 1980 to $0.05 in 2013. Here are some recent figures from online backup provider Backblaze, which also show the effect of the October 2011 floods in Thailand on hard drive supply and pricing:

New technologies also continue to extend the life of the hard disk. These include Shingled Magnetic Recording (SMR), which uses overlapping write tracks to roughly double data density, to around 3 trillion bits per square inch. High-capacity SMR drives are best suited to backup, archive and other infrequently accessed data (so-called cold storage), which is characterised by sequential writes and random reads, rather than random-access read/write cycles. SMR drives are therefore more likely to be used in enterprise and cloud-provider data centres than in client devices.

Helium-filled hard drives are another development that should prolong the spinning disk's life. Because helium has only 14 percent of the density of air, there is less drag on the spinning platters, and therefore less motor power is required to drive them. This in turn allows more platters to be fitted into a standard 3.5-inch hard drive enclosure — seven, compared to five in a standard air-filled drive. High-capacity, low-power drives are exactly what's required in an efficient data centre, which is where these new drives are likely to find a home. HGST has recently shipped the first helium drive, the 6TB Ultrastar He6.

Optical disk

Other technologies that may have more life than previously thought include the optical disk, thanks to Millenniata's '1,000-year' M-DISC. This 4.7GB DVD writes data by physically altering the recording layer, creating permanent pits in the disc. Because it's unaffected by temperature, humidity or sunlight (unlike regular optical media that use organic dyes in the recording layer), M-DISC is ideal for long-term write-once archiving purposes.

M-DISC media are readable by current DVD drives, although not all drives can write to the discs (a list of compatible drives is provided on Millenniata's website). A 10-pack of M-DSIC media costs $29.99, which equates to a per-gigabyte cost of $0.638.

Tape

Another format that's been around for decades is tape, and as with hard disks, there are interesting recent developments that should keep it relevant in the cloud computing era. Tape's affordability, longevity, reliability and low power consumption has long made it a natural medium for backup and archiving, but accessing and managing files on tape was slow and convoluted compared to hard-disk-based storage. That could change as tape technology continues to develop — 6th-generation LTO-6 tapes store up to 2.5TB (native) with a maximum uncompressed data transfer speed of 160MB/sec — and new file systems such as the IBM-developed Linear Tape File System (LTFS) make it easier to access.

Released in 2010, the open LTFS standard stores file metadata in a separate partition on the tape, providing drag-and-drop functionality under Linux, Mac OS X and Windows and effectively turning a tape library into a near-line network-attached storage (NAS) array. Technologies such as LTFS should make it easier for data centre managers to orchestrate storage across flash, hard disk and tape in the most cost-effective and power-efficient manner — a major goal of the multi-vendor Active Archive Alliance.

Outlook

Demand for storage can only increase, as ever more digital devices store ever more varieties of data. This puts a premium on technologies that promote storage efficiency — by boosting capacity and performance, reducing power consumption and cost, or any combination of these. Increased data volumes, and regulations that demand more of it be retained for possible future inspection, also highlight the vital importance of longer-term backup/recovery and archiving technologies.

These priorities are evident in EMC's 2013 survey, which asked IT managers and professionals to identify the most important storage technology segments to their organisations. Backup, recovery and archiving head up the list, while efficiency-promoting solutions like automated storage tiering and storage virtualisation are also prominent:

Although flash drives have a relatively low rating in this chart (9.6%), EMC's survey also reports that 56.5 percent of respondents already implement this technology — more evidence that flash has now entered the mainstream.