The 21st Century Data Center: An overview

The 21st century data center has its roots in the mainframe-housing computer room of the 1960s and 70s. Both are large, well-secured facilities built to house complex and expensive IT infrastructure that require sophisticated power and cooling systems. The modern data center began life in the 1990s as banks of servers within companies' own premises (often replacing mainframes in former computer rooms). Next came off-site colocation facilities where companies could house their servers in a variety of spaces — private suites, lockable cages or racks, for example. Finally we have today's purpose-built palaces of processing, storage and connectivity, which typically deliver some combination of colocation, managed hosting and (public, private and/or hybrid) cloud services.

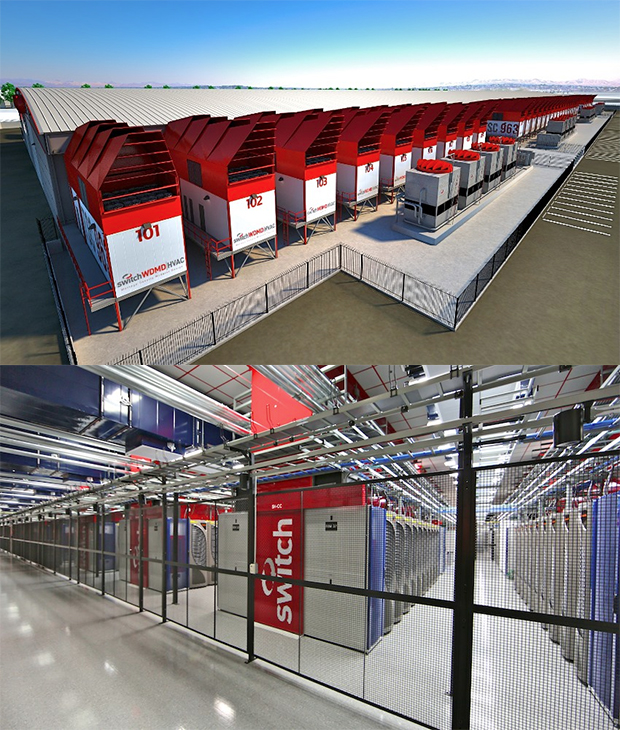

Some of these data centers are truly gargantuan. The world's largest, in terms of available power supply and cooling system capacity, is Switch Communications Group's SuperNAP-7 in Las Vegas, a colocation facility covering 407,000 square feet (37,811 square metres, or 3.78 hectares, or 9.34 acres). Such is the cloud-computing-driven demand for high-density colocation that Switch has announced expansion plans for up to 2.2 million square feet (204,387 square metres, or 20.4 hectares, or 50.5 acres) of data center space, beginning with SuperNAP-8 (expected to open in May) and then SuperNAP-9, which will break ground in 2014.

These days, the 'data center user' ranges from massive IT infrastructure and services providers like Amazon and Google to any business user or consumer who connects to the internet. However, for the purposes of this article, a typical user is an IT manager in a business with an on-premise data center running a mixture of traditional enterprise applications and virtualised workloads that's already using some cloud services — most likely SaaS applications — and is considering the next move: expand on-premise capacity, move more workloads to the public cloud, or adopt a hybrid strategy?

Data center usage is currently undergoing a transformation due to the increasing use of 'cloud' (i.e. outsourced, external) infrastructure, services and applications. According to Cisco, nearly two-thirds (64 percent) of all data center traffic will be processed in cloud facilities by 2016, rather than in 'traditional' (i.e. on-premise) data centers. In 2011, by contrast, the estimated split was 61 percent traditional and 39 percent cloud.

Data center design and construction

A typical data center is an industrial building that provides floor space for housing IT equipment, along with all of the necessary power distribution, cooling, cabling, fire suppression and physical security systems. Data centers are normally located in places where the cost of electricity and land is low, but where there's also a sufficient pool of labour to staff the facility (unless it's a so-called 'lights-out' data center that's administered remotely and has next to no on-site staff).

Data centers are classified on a four-level Tier system based on work by the Uptime Institute, a data center industry consortium, and ratified by the Telecommunications Industry Association as the TIA-942 standard. For each tier the standard specifies the architectural, security, mechanical and telecoms requirements needed to achieve a given level of availability: 99.671% (28.8 hours downtime per year) for Tier 1; 99.741% (22h downtime/year) for Tier 2; 99.982% (1.6h downtime/year); and 99.995% (0.4h downtime/year) for Tier 4.

Another widely quoted figure relating to data centers is the PUE or Power Usage Effectiveness. Developed by The Green Grid consortium, PUE is a simple ratio of the total power used by a data center to the power used by the IT equipment. The ideal PUE ratio is 1.0, with real values ranging from over 2.5 down to around 1.1 (see below). Given the size of its data center operation, it's no surprise to find Google in the forefront of power efficiency: the search giant reports a 'comprehensive' PUE of 1.12 across all of its data centers for Q4 2012 ('comprehensive' because Google includes all sources of power overhead and takes measurements all year round). Another way of expressing PUE is as its reciprocal, which is known as Data Center Infrastructure Efficiency or DCiE. Google's score on this metric is 0.893, or 89.3 percent.

To gauge the state of play in data-center-land, the Uptime Institute kicked off an annual survey in 2011. Its 2012 Data Center Industry Survey, conducted in March/April, is based on responses from 1,100 facilities managers, IT managers and C-level executives from North America (50%), Europe (23%), Asia (14%) and Central/South America (7%).

Among the survey's key findings was that 30 percent of respondents expected to run out of data center capacity in 2012, with the majority planning to keep current sites going by consolidating servers and upgrading facilities infrastructure. Backing up this point, compared to the 2011 survey, 10 percent fewer respondents planned to build a new data center, while 10 percent more planned to push more workloads to the cloud.

Key drivers towards cloud adoption identified by the survey were cost reduction (27%), scalability (23%), customer/user demand (13%) and speed of deployment (13%). The main impediments to cloud adoption were security concerns (64%), compliance/regulatory issues (27%), cost (24%) and lack of internal cloud computing management expertise (20%).

The Uptime Institute also found that organisations were making more precise PUE measurements in 2012 than the previous year, with the average reported PUE falling in the 1.8-1.89 range. Power-saving strategies revolved around hot aisle/cold aisle containment and raised server inlet air temperatures (finding the sweet spot between IT equipment fan power and cooling energy).

Other trends noted in the survey include increased interest in prefabricated modular data centers or components (9% deployed, 8% planning to deploy, 41% considering) and the beginnings of a move towards implementing Data Center Infrastructure Management (DCIM) tools (see the following pages for more on these trends).

Continued

How much data?

How much data flows through the world's data centers, and what are the trends? This question has been addressed since 2011 by Cisco, with its annual Global Cloud Index (GCI). In its 2012 report, using network data from 10 enterprise and internet data centers, Cisco forecasts that global data center traffic will reach 554 exabytes (EB) per month by 2016, up from 146EB (1 exabyte = 1,000 petabytes = 1,000,000 terabytes). In annual terms, that's a rise from 1.8 zettabytes (ZB) in 2011 to 6.6ZB in 2016 — a CAGR of 31 percent:

Where does all this data come from? Cisco divides data center traffic (both business and consumer) into three broad classes: traffic that remains in the data center; traffic that flows between data centers; and traffic that flows from data centers to users over the internet or IP WAN. According to Cisco, the majority (76 percent) of traffic is the kind that remains within the data center:

When server workloads are considered, the momentum towards the cloud is clear, with Cisco estimating 180.6 million installed workloads in 2016, split 62/38 percent between cloud and traditional data centers, compared to 71.1 million workloads in 2011 with a 30/70 percent cloud/traditional split:

The average number of workloads per physical server in cloud data centers is expected to grow from 4.2 in 2011 to 8.5 in 2016; for the less efficiently utilised servers in traditional data centers, the estimated and predicted numbers are 1.5 in 2011 and 2 in 2016 respectively.

Cisco also predicts a 'changing of the guard' in terms of the regional distribution of cloud-based workloads: in 2011 North America led the way with 37.8 percent of 21.4m workloads, with Asia Pacific on 31.3 percent; in 2016 Asia Pacific is expected to host 36.2 percent of 112.1m workloads, with North America in second place on 26.4 percent.

What's clear is that, for the foreseeable future, organisations will be employing a mix of on-premise and outsourced IT infrastructure, with the major underlying trend being increased use of services located in public cloud data centers, particularly in the Asia Pacific region.

Continued

Rebooting the data center

How can data centers be made more efficient, scalable, flexible and secure? Specifically, how can IT service providers achieve lower construction costs, a PUE nearer 1.0, more computing power per watt, lower latency for users, a smaller carbon footprint — and, above all, how can they manage these complex systems better? Here are some of the trends that will underpin the 21st century data center (or Data Center 2.0).

Microservers

For certain kinds of high-volume, low-compute-power workload — such as web page serving, search engine query execution, or parallelised data processing tasks — a new species of server, the microserver, may occupy less data center space and consume less power than the traditional Xeon- or Opteron-based enterprise server.

HP is among the leading vendors in the emerging microserver space with its Project Moonshot. The first Moonshot server, announced in November 2011, was an ARM-based SoC platform called Redstone, which HP installed as a demonstration system in its Discovery Lab. Based on an existing ProLiant SL6500 chassis with server trays that can hold a mixture of compute or storage cartridges, the Redstone Development Server Platform delivers four times the density of the traditional ProLiant server (accommodating up to 72 compute nodes per tray, or 288 per 4U chassis) while consuming a tenth of the power, according to HP.

In June 2012 HP changed course, announcing that the first production Moonshot microservers, codenamed Gemini, would use compute cartridges based on Intel's 'Centerton' Atom processors — specifically, the Atom S1200 series with TDP (Thermal Design Power) ratings of 6.1-8.5W. Originally scheduled to ship late last year, Gemini servers are now expected in Q2 2013. ARM is by no means out of the Moonshot picture though: the latest recruit to HP's Pathfinder Partner Program, which helps vendors develop for the Moonshot platform, is Texas Instruments with its ARM-based (Cortex-A15 MPCore) Keystone II SoC. Whether Keystone II-based cartridges will appear in a future generation of Gemini servers is not yet clear, however.

Other vendors are involved of course — notably Dell with its ARM-based Copper servers. And last year AMD bought microserver vendor SeaMicro to underpin its Data Center Server Solutions business. SeaMicro's Atom-supporting SM15000 server recently achieved certification for Cloudera's CDH4, a widely deployed Apache Hadoop distribution used for Big Data analytics.

Although microservers are interesting development, they are not applicable to all kinds of workloads, and are unlikely to revolutionise the data center anytime soon. Analyst IHS iSuppli, for example, expects shipments to hit 1.2 million units by the end of 2016 — just 10 percent of the total server market.

Solid-state storage

Fast, low-power, physically resilient solid-state storage is familiar in client devices — the flash memory in our smartphones, and the SSDs in our tablets and ultrabooks for example. SSD arrays are also increasingly making their way into the data center, where their performance and power efficiency are particularly appealing. However, as with microservers, solid-state storage is not universally applicable in this environment.

The main inhibitor for large-scale SSD uptake is the price, which although dropping remains significantly higher than traditional hard disk-based solutions. Another potential problem with SSDs is limited 'write endurance' — failures in blocks of non-volatile NAND flash storage after the maximum number of P/E (Program/Erase) cycles has been exceeded. This has been addressed by Intel with its High Endurance Technology (HET), which combines hardware and firmware enhancements to improve enterprise-class SSD endurance. HET includes 'background data refresh', which moves data around during inactive periods to avoid areas of heavy read activity.

In Intel's tests (see above), first in a controlled environment and then in a production data center (running a security compliance database), SSD arrays delivered the following benefits over comparable enterprise-class (15K rpm) hard-disk-based storage: up to 5x performance increase on random disk I/O tasks; reduced read/write latency (by up to 10x/7x respectively) and reduced maximum latency (by up to 8x); faster transition from idle to active state and no increase in seek time as drive capacity is approached; and 50 per cent less power consumption plus a third less heat production. Intel also suggested that the higher initial (3x) cost of an SSD solution could be justified thanks to: reduced IT time spent handling unwieldy I/O queue depths; no backlogs in recording monitoring data (eliminating a potential compliance issue); fewer delays in patching systems; performance and capacity to handle workloads for 3-5 years; and a simpler setup than a traditional SAN or NAS solution.

Enterprise-class SSD storage is not only available in SATA-based arrays such as EMC's new XtremeIO product, but also as PCI cards from a number of vendors, including Fusion-io and LSI. An alternative approach is taken by X-IO, which specialises in hybrid storage systems that combine SSDs and hard drives in a single pool of storage, with firmware (Continuous Adaptive Data Placement) that places data on SSD only if measurable performance gains will be realised. According to Mike Wills, CEO of RTW Hosting, replacing a traditional SAN with a hybrid array such as X-IO's can deliver 15-20 times the performance, along with a 60 percent improvement in power efficiency. Where an optimised balance of read/write performance and storage capacity is required, the tiered hybrid approach may be the way to go.

The software-defined data center

Server and storage virtualisation are mature concepts, but for maximum data center efficiency and flexibility, the third major IT component — networking — arguably needs to undergo a similar process of decoupling the control layer from the physical hardware. Software-Defined Networking (SDN) is a new field, and the major networking players are still working out their responses to it — (EMC-owned) VMware's purchase of SDN specialist Nicira in July 2012 was a key move in this respect.

The standard-setting and promotion body for SDN is the Open Networking Foundation (ONF) and its major vehicle is the OpenFlow protocol. In April 2012, Google — a founding ONF member — disclosed details of a large-scale OpenFlow implementation on its datacenter backbone network, which carries the search giant's internal traffic (Google's other, internet-facing, backbone network carries user traffic).

Google's SDN experience included faster deployment time, the ability to emulate the backbone network in software for testing, easy upgradability, significant improvements in network utilisation (close to 100 percent, compared to an industry average of 30-40%) and a generally high degree of stability. Echoing its strategy with servers, Google built its own network switches for this project — something that, if widely adopted, will make vendors of traditional data center networking equipment (notably Cisco) nervous.

Modular data centers

Traditional custom-built 'bricks and mortar' data centers are costly and time-consuming to build (and expand when capacity becomes limited). Prefabricated modular data centers — often fitted into standard shipping containers containing servers, storage, networking and cooling equipment — allow companies to build the capacity they currently require quickly, with the ability to deploy more modules rapidly and cost-effectively as demand grows.

The Uptime Institute survey referred to earlier noted plenty of interest among its respondents in prefabricated modular data centers. However, this is a trend that's clearly in its early stages: 52 percent of respondents said they were still considering traditional builds for future data center expansion, with 19 percent looking at prefabricated components with little or no traditional construction; meanwhile, 21 percent said that the fully containerised data center was an interesting option.

Only 11 percent of respondents professed themselves 'extremely confident' about the maturity of the modular data center market in 2012, although 71 percent agreed that 'keeping pace with changing demands of successive generations of IT equipment' was more important than 'long useful life of data center infrastructure for a constant set of IT equipment demands' (26%).

For those who are interested, there are plenty of vendors queuing up to offer modular data centers, including AST, Cisco, Colt, Dell, HP, IBM, IO and Toshiba, among others.

Continued

The green data center

Traditional data centers consume large amounts of power and other resources (such as cooling water). Since data center capacity is set to continue growing, the pressure is on for businesses and service providers to be good environmental citizens and reduce the resource footprints of their facilities.

The Green Grid-inspired PUE metric, now widely used and quoted, has focused attention on power efficiency, with state-of-the-art data centers now operating at a PUE of around 1.1 — that is, a DCiE (Data Center Infrastructure Efficiency) of 90.9 percent.

Trends noted earlier such as the move from traditional on-premise data centers (with low server utilisation) to modern cloud facilities (with high server utilisation), the increasing use of low-power microservers and solid-state (or hybrid) storage, plus power-efficient modular data centers such as HP's POD 240a (dubbed the 'EcoPOD') will all make for more power-efficient data centers. Another initiative, the Facebook-inspired Open Compute Project, which promotes simplified open-source server and other IT infrastructure designs, may also contribute to improved data center power efficiency in due course, although it's still early days for this intiative.

Data center location can be a crucial consideration when it comes to environmental credentials. Last year, for example, ZDNet visited a modular data center in Iceland, for which operator Verne Global has secured a long-term, low-cost, 100 percent green (renewable hydroelectric/geothermal) electricity supply. Iceland's climate also makes it possible to use free cooling — using outside air to cool the IT equipment rather than installing power-consuming chiller units.

Some data center cooling schemes verge on the exotic. Intel, for example, has experimented with submerging servers in vats of mineral oil (see this video). Other more conventional ideas from Intel are summarised in this infographic:

Data Center Infrastructure Management (DCIM)

In order to run an IT operation efficiently and make informed decisions about future data center capacity, you need current and predicted data on the range of application workloads in your business, their capacity requirements and the comparative costs of running them on-premise, in the public cloud or as a hybrid solution. Otherwise you run the risk of over-provisioning and wasting money, or under-provisioning and suffering performance bottlenecks or outages. Both are undesirable and, for the IT manager, job-threatening outcomes.

The tools that help you gather and interpret these data go under the general heading of Data Center Infrastructure Management (DCIM). This is a recent and rapidly evolving sector with solutions ranging from those that concentrate on managing the data center's physical infrastructure (IT hardware, power and cooling systems, and so on), to more sophisticated solutions that not only provide an overview of the physical, virtual and cloud infrastructure but also offer predictive analytics and 'what-if' scenario planning. This latter category is sometimes called DCPM (Data Center Predictive Modelling).

Forrester Research's 2012 market overview of DCIM solutions identifies eight core functions: inventory and discovery; maintenance and change control; data collection; consolidated monitoring and display dashboard; alerts; control; trend analysis; and the ability to model future solutions for implementation. Emerging functions identified by Forrester are: power planning and capacity based on actual, not rated, usage; workload-aware modelling; network capacity modelling; and integration of DCIM with conventional IT management tools.

Forrester identifies three groups of DCIM vendors: Data center facility and infrastructure vendors (example: Emerson); IT management vendors (example: CA Technologies); and Systems hardware vendors (example: HP).

For more analyst insight on the DCIM market, see the reports from Gartner (June 2012) and 451research (December 2011).

Continued

Cloud platforms and open standards

For most businesses, the path to the cloud begins in an on-premise data center, which typically runs a mixture of traditional, siloed, enterprise applications and virtualised 'private cloud' workloads. The latter are often based on VMware's proprietary vCloud technology.

When it comes to exploiting public cloud services, either for 'cloudbursting' (managing load spikes) in a hybrid cloud architecture or as a brand-new deployment, the issue of vendor lock-in raises its head. No-one wants to entrust large parts of their IT infrastructure to one vendor's cloud platform, only to find that it's impossible to migrate to another vendor's cloud should the need arise. This is the impetus behind open-source cloud platforms.

The best known is OpenStack, a Linux-based open-source software stack with a supporting Foundation that looks after promotion and governance. OpenStack was initiated in 2010 by hosting provider Rackspace and NASA, and now boasts wide industry support — including giants like Cisco, Dell, HP and IBM.

Other notable open-source cloud platforms are Apache CloudStack (originally developed by Citrix, whose implementation is CloudPlatform), Eucalyptus, OpenNebula and the DTMF's OVF 2.0.

There's a lot of interest in OpenStack, in particular, which is widely positioned as the main rival to VMware (which powers public and hybrid clouds via its service provider partners). Despite these ongoing 'cloud wars', it's worth noting that the biggest public cloud platforms — Amazon Web Services, Google Compute Engine and Microsoft Windows Azure — are all largely proprietary in nature.

An open, fully interoperable set of cloud software platforms and APIs will remove a potential barrier to the wider adoption of cloud technology, allowing more businesses to reap its associated benefits — on-demand self service, rapid scalability and transparent pricing.

Conclusion

Data centers are complex and expensive facilities, and running them efficiently requires multiple skills in the areas of IT infrastructure management, energy management and building management. Little wonder that, as we've seen, businesses are increasingly outsourcing these tasks.

Whether you maintain a traditional on-premise data center, outsource your IT to the public cloud or adopt a hybrid strategy depends on the mix of workloads involved. If you're migrating an existing on-premise workload to the cloud, or looking to handle load spikes, a hybrid cloud solution might be appropriate. If you're deploying a brand-new workload, on the other hand, it could be better to go all-in with the cloud from the start. However, if you're nervous about entrusting sensitive or mission-critical business processes and data to the cloud (for security, compliance or reliability reasons, for example), you may want to keep them under your control in your on-premise data center.

The 21st century data center, be it on-premise or outsourced, will increase its efficiency and flexibility via a combination of virtualisation and consolidation throughout the IT stack (server, storage and networking), the increasing use of low-power hardware such as microservers and solid-state storage, modular data center construction, green power supply and cooling technologies, and DCIM software that orchestrates data center management and models future capacity expansion scenarios.

If you make use of any form of outsourcing, remember that the cloud isn't some magical location where everything works perfectly all of the time. Due diligence is required: check service providers' SLAs, find out where your data will reside and how easy it is to move around, discover what security and backup provisions are available, and remain on the lookout for any hidden costs.