The many-core performance wall

Many-core chips are the great hope for more performance but Sandia National Lab simulations show they are about to hit a memory wall. How bad is it?

Some background Memory bandwidth is the limiting performance factor in CPUs. If you can't feed the beast it stops working, simple as that.

John von Neumann - your PC/Mac is a von Neumann architecture machine - made the point in his very technical First Draft of a Report on the EDVAC (pdf)

. . . the main bottleneck, of an automatic very high speed computing device lies: At the memory.

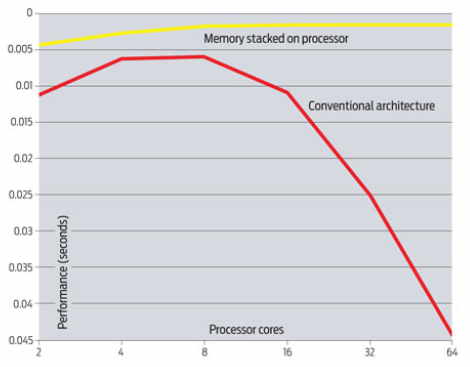

Here's the ugly results of Sandia's simulations:

Performance roughly doubles from 2 cores to 4 (yay!), near flat to 8 (boo!) and then falls (hiss!).

Did Pink Floyd forecast this? Chip packages support just so many pins and so much bandwidth. Transistors per chip double every couple of years - but the number of pins don't.

Professors William Wulf and Sally McKee named it "the memory wall" in their 1994 paper Hitting the Memory Wall: Implications of the Obvious (pdf) saying:

We all know that the rate of improvement in microprocessor speed exceeds the rate of improvement in DRAM memory speed - each is improving exponentially, but the exponent for microprocessors is substantially larger than that for DRAMs. The difference between diverging exponentials also grows exponentially; so, although the disparity between processor and memory speed is already an issue, downstream someplace it will be a much bigger one.

According to an article in IEEE spectrum that time is almost upon us. With cores per processor doubling every 2-3 years - and graphics chips moving faster - we don't have long to wait.

The memory wall's impact is greatest on so-called informatics applications, where massive amounts of data must be processed. Like sifting through petabytes of remote sensing data to find bad guys with nukes.

Can this be fixed? Sandia is investigating stacked memory architectures, popular in cell phones for space reasons, to get more memory bandwidth. But as the simulation shows, that doesn't improve performance.

Intel needs to pick up the pace. Nehalem processors are the first with an on-chip memory controller and the new Quick Path Interconnect. But server-class Nehalems are now limited to 2 QPI links for a total theoretical bandwidth of only 50 GB/sec. Faster, pussycat, faster!

The Storage Bits take Many-core is the future for computer performance. Memory bandwidth is one big problem. Software support for efficient many-core use is another. Either could bring the performance expected from Moore's Law to dead stop.

The industry is making big investments in both problems. If it is a problem for Sandia today it will be a problem for consumers in 10 years.

What if one doesn't get solved? Then the Moore's Law rocket we're been riding will sputter and die. Life on the glidepath won't be nearly so much fun.

Comments welcome, of course. If anyone wants to make the case that von Neumann was wrong, I'm all ears.