No hype, just fact: Artificial intelligence in simple business terms

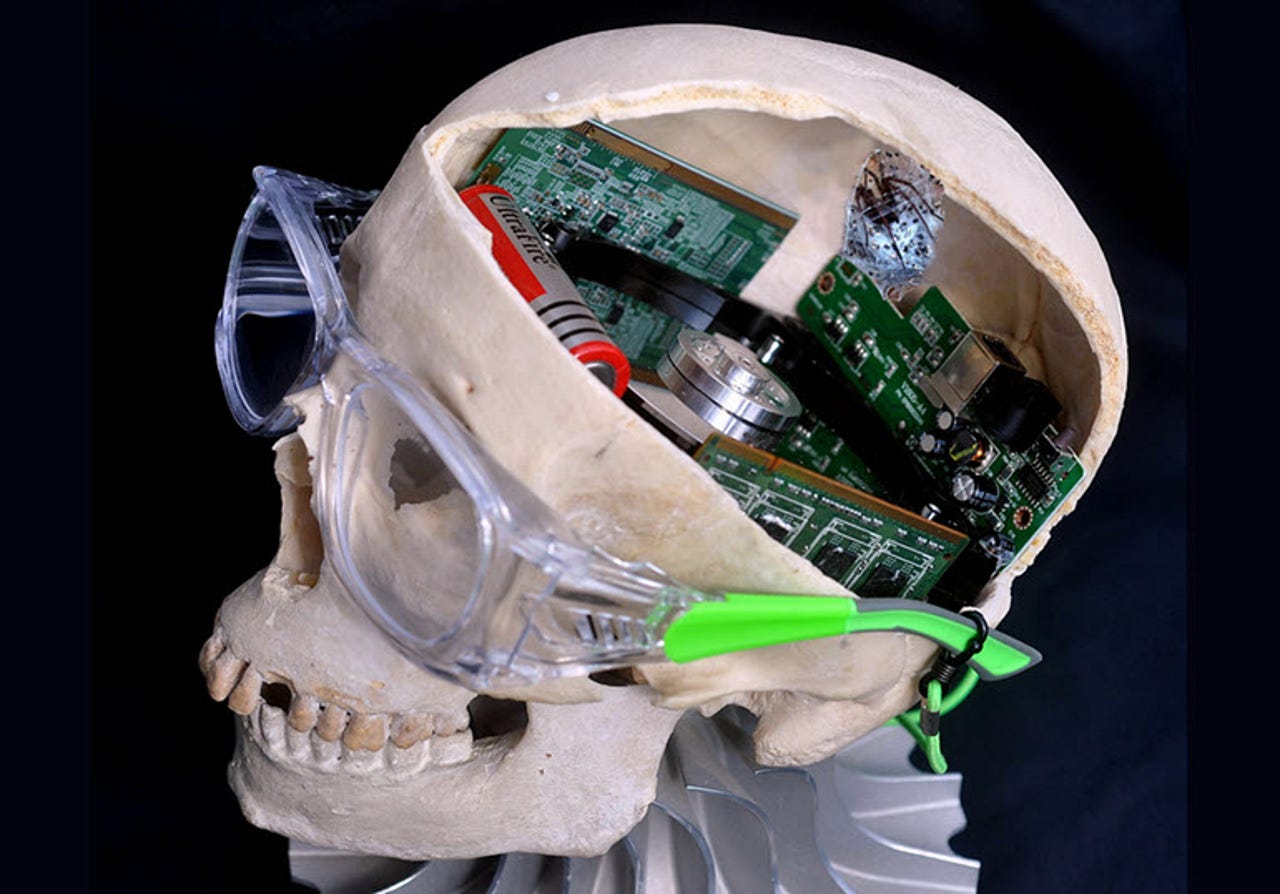

Image from Wikimedia Commons

Artificial intelligence, machine learning, cognitive computing, deep learning, and related terms have become interchangeable jargon referring to AI. Although it's hard to believe, the level of marketing hype around AI has even surpassed digital transformation.

To break through the hype and nonsense, I asked the Chief Data Scientist of Dun and Bradstreet to explain AI in straightforward business terms. It's a complicated assignment, so I went to Anthony Scriffignano, one of the smartest, most accomplished data scientists I know. Anthony is a brilliant communicator, making him an ideal candidate to explain AI.

In the short video embedded above, Anthony gives a succinct introduction to AI for business people. Watch the video and enjoy un-hyped truth about an important topic.

The conversation is part of the CXOTALK series, where you can watch the full-length, unedited discussion with Anthony Scriffignano and read a complete transcript.

Explain artificial intelligence for us

If there's nothing else that our industry is good for, it's creating terms that people can use that have ambiguous meaning, and can be taken to mean almost anything in any situation. And this is certainly one of them. So, it's one of those things that you understand, but then when you try to define it, scholars will disagree on the exact definition. But, artificial intelligence collectively is a bunch of technologies that we run into. So, you'll hear "AI." You'll hear "machine learning." You'll hear "deep learning," [or] sometimes "deep belief." "Neuromorphic computing" is something that you might run into, or "neural networks;" "natural language processing;" "inference algorithms;" "recommendation engines." All of these fall into that category.

And some of the things that you might touch upon are autonomous systems ─ bots. Sometimes, we will hear talk of... Well, Siri is probably the most obvious example that anybody runs into (or any of the other ─ I won't try to name them all because I'll forget one), but things of that nature where you have these assistants that try to sort of mimic the behavior of a person. When you're on a website, and it says, "Click here to talk to Shelly!" or "Click here to talk to Doug!" You're not talking to a person; you're talking to a bot. So, those are examples of this.

Generally speaking, that's the broad brush. And then if you think about it as a computer scientist, you would say that these are systems processes that are designed to do any one of several things. One of them is to mimic human behavior. Another one is to mimic human thought process. Another is to "behave intelligently" ─ you know, put that in quotes. Another is to "behave rationally," and that's a subject of a huge debate. Another one is to "behave ethically," and that's an even bigger debate. Those are some of the categories that these systems and processes fall into.

And then there are ways to categorize the actual algorithms. So, there are deterministic approaches; there are non-deterministic approaches; there are rules-based approaches. So, there are different ways you can look at this: you can look at it from the bottom up; the way it just ended; or regarding what you see and touch and experience.

How do terms like machine learning, AI, and cognitive computing relate to one another?

They're not synonymous. So, cognitive computing is very different than machine learning, and I will call both of them a type of AI. Just to try and describe those three. So, I would say artificial intelligence is all of that stuff I just described. It's a collection of things designed to either mimic behavior, mimic thinking, behave intelligently, behave rationally, behave empathetically. Those are the systems and processes that are in the collection of soup that we call artificial intelligence.

Cognitive computing is primarily an IBM term. It's a phenomenal approach to curating massive amounts of information that can be ingested into what's called the cognitive stack. And then to be able to create connections among all of the ingested material, so that the user can discover a particular problem, or a particular question can be explored that hasn't been anticipated.

Machine learning is almost the opposite of that. Where you have a goal function, you have something very specific that you try and define in the data. And, the machine learning will look at lots of disparate data, and try to create proximity to this goal function ─ basically try to find what you told it to look for. Typically, you do that by either training the system, or by watching it behave, and turning knobs and buttons, so there's unsupervised, supervised learning. And that's very, very different than cognitive computing.

What does "training a model" mean?

AI devices that may already be in your home

So, a model is a method of looking at a set of data in the past, or a set of data that's already been collected, and describing it in a mathematical way. And we have techniques based on regression, where we continue to refine that model until it behaves within a certain performance. It predicts the outcome that we intend it to predict, in retrospect. And then, assuming that we can extrapolate from the frame we're into the future, which is a big assumption, we can use that model to try to predict what happens going forward mathematically.

The most obvious example of this that we have right now is the elections, right? So we look at the polling data. We look at the phase of the moon. We look at the shoe sizes. Whatever we decide to look at, we say, "This is what's going to happen." And then, something happens that maybe the model didn't predict.

So, now we get into AI. The way some systems work, not all, is they say: "Show me something that looks like what you're looking for, and then I'll go find lots of other things that look just like it. So train me. Give me a webpage, and tell me on that web page which things you find to be interesting. I'll find a whole bunch of other web pages that looks like that. Give me a set of signals that you consider to be a danger, and then when I see those signals, I'll tell you that something dangerous is happening." That's what we call "training."

Why is training models complicated?

Sure. So imagine that I gave a whole bunch of people, and the gold standard here is that they have to be similarly incentivized and similarly instructed, so I can't get, you know, five computer scientists and four interns ... You try to get people that more or less have either they're completely randomly dispersed, or they're all trying to do the same thing. There are two different ways to do it, right? And you show them lots and lots of pictures, right? You show them pictures of mountains, mixed in with pictures of camels, and pictures of things that are maybe almost mountains, like ice cream cones; and you let them tell you which ones are mountains. And then, the machine is watching and learning from people's behavior when they pick out mountains, to pick out mountains like people do. That's called a heuristic approach.

When we look at people, and we model their behavior by watching it, and then doing the same thing they did. That's a type of learning. That heuristic modeling is one of the ways that machine learning can work, not the only way.

There's a lot of easy ways to trick this. So, people's faces are a great example. When you look at people's faces, and we probably all know that there are techniques for modeling with certain points on a face, you know, the corners of the eyes. I don't want to get into any IP here, but there are certain places where you build angles between these certain places, and then those angles don't typically change much. And then you see mugshots with people with their eyes wide open, or with crazy expressions in their mouth. And those are people trying to confound those algorithms by distorting their face. It's why you're not supposed to smile in your passport picture. But, machine learning has gotten much better than that now. We have things like the Eigenface, and other techniques for modeling the rotation and distortion of the face and determining that it's the same thing.

So, these things get better and better and better over time. And sometimes, as people try to confound the training, we learn from that behavior as well. So, this thing all feeds into itself, and these things get better, and better, and better. And eventually, they approach the goal, if you will, of yes, it only finds mountains. It never misses a mountain, and it never gets confused by an ice cream cone.

How is this different from traditional programming?

The original way that this was done was through gamification or just image tagging. So, they either had people play a game, or they had people trying to help, saying, "This is a mountain," "This is not a mountain," "This is Mount Fuji," "This is Mount Kilimanjaro." So, they got a bunch of words. They got a bunch of people that use words to describe pictures (like Amazon Mechanical Turk).

Using those techniques, they just basically curated a bunch of words and said, "Alright, the word 'mountain' is often associated with there's a high correlation statistically between the use of the word 'mountain' and this image. Therefore, when people are looking for a mountain, give them this image. When they're looking for Mount Fuji, give them this image and not this image." And that was a trick of using human brains and using words. That's not the only way it works today. There are many more sophisticated ways today.

Video: When does AI make sense for business?

Please see the list of upcoming CXOTALK episodes. Thank you to my colleague, Lisbeth Shaw, for assistance with this post.