Sun's new mantra: Brutal efficiency at deep scale

At a recent meeting with analysts, Sun CTO Greg Papadopoulos reiterated what has become the Jonathan Schwartz era strategy--to become the early 21st century version of the IBM mainframe (see Nick Carr's post on the topic). Sun CEO Schwartz has been harping on the idea that demand for compute power will be insatiable and Sun just needs to intercept that demand, and will do so because it invests in the critical R&D required to deliver the most "brutal efficiency" for utilization, power, security, service levels and idea-to-deploy time.

It's hard to argue with Sun's view of where computing is heading, although "brutal" efficiency sounds like a torturous path as opposed to say, "extreme" efficiency. The issue for Sun is whether the company will be able to intercept the insatiable demand for compute power with what is basically utility computing infrastructure.

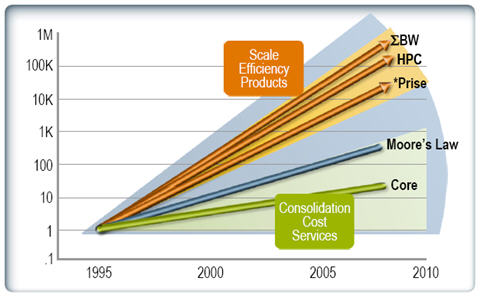

In his presentation, Papadopoulos submitted that most enterprises are over-served by Moore's Law. Consolidation, virtualization and more power efficient components are the dominant activities as enterprises try to reduce costs for core applications and services.

The big growth opportunity is for high performance computing, delivering on demand applications and filling the pipes with rich content, applications and services. These are under-served by Moore's Law, Papadopoulos said, and can only be addressed by the "Redshift"--a move to massive scale computing. "The aggregate demand to fill the pipes is growing quite a bit faster than Moore's Law," Papadopoulos said in his presentation.

Supplying scale efficient infrastructure to deal the last-mile bandwidth (BW), high-performance computing (HPC) and on-demand applications (*Prise)--the Redshift, a Big Bang, massive expansion in need and consumption of computer power--is where Sun hopes to grow its business. Source: Sun

The Redshift adherents, as it pertains to Sun, break down into two schools. Google represents the the do-it-yourself, vertical integration approach, disintermediating existing large-scale infrastructure providers. Papadopoulos juxtaposed what he called the "Google question" (Google has built its own massive compute power plant) with what would make sense for a small- or medium-sized company. "If you have a Redshift application, what would I design and how would I operate it?," he asked. "Our answer is brutal efficiency."

Brutal efficiency, according to Papadopoulos is achieved through hard engineering (Sun R&D) and playing across the entire infrastructure stack--networks services, core services and platforms, OS instances and the hardware (servers, storage and switches).

He cited Sun's Blackbox, a standard shipping container that can hold 250 Sun Fire servers, two petabytes of storage or seven terabytes of memory and Sun's software stack, as an example of Sun's brutal efficiency. "It is the shape of the future. We know we can get brutally efficient with this kind of design point," Papadopoulos said.

Sun CEO Jonathan Schwartz and Greg Papadopoulos unveiling the Blackbox in October 2006.

He also cited progress in power, scale and performance with Niagara 2 (see below) processor, and highlighted three development initiatives at Sun--Neptune, Crossbow and real-time Java. Neptune is a hardware component with an advanced network interface to take 10-gigabyte Ethernet PCI-e physical connections and virtualize them for feeding into multicore processors. Crossbow provides OS-level virtualization on top of Solaris for high speed networks. "We made some excellent progress with integrating real-time properties on top of Solaris, breathtaking results for real-time world," Papadopoulos claimed.

Source: Sun

He also managed to tie Sun's identity management, Java, security, SOA and other components of core enterprise platforms into the massive scale computing, Redshift camp. "An asset and preferred market position is the ability to bridge the two," Papadopoulos said. Solaris, and having a supported binary code stream that can generate service revenue, is also a key part of Sun's Redshift and business model.

Papadopoulos said that in the likely, or preferred, Redshift scenario (see the slide below) Sun and competitors in delivering massive scale computing will supply the big infrastructure, entities in control of software will supply services via the cloud to clients around the world and operators and carriers will provide the distribution network.

Sun also wants to "infect" the new generation of startups building Internet-based services with the brutal efficiency proposition. "We will see more of YouTube kind of thing--startups start and we want to get them infected with our stuff and get them absorbed into large system because of efficiency and scale economics of these systems," said Papadopoulos.

Source: Sun

"We are making a really big bet that this is what the future looks like," Papadopoulos said. "Systems engineering and the ability to work with brutal efficiency at deep scale is going to be defining in marketplace and is going to separate off who ultimately wins in this business."

It's not a question of if but when the Redshift as described by Papadopoulos achieves full effect, and massive compute power is served up similar to the way power utilities deliver electricity. In this scenario--the full instantiation of Sun's "the network is the computer" slogan--Sun believes that its brutal efficiency embodied today in Solaris and Niagara SMP systems will win out over other scale up systems and the scale out, let billions of cheap computers flower approach. For purchasers of compute power, brutal efficiency at brutally competitive pricing will be an attractive proposition.