Why Intel's 22nm technology really matters

The fact that Intel announced its 22nm technology, the world's most advanced process for manufacturing logic, at a press event earlier today was hardly surprising. The tick-tock cadence of Intel's technology continues like clockwork. What is very surprising, however, is how Intel got there. The 3-D transistors, known as tri-gates, that Intel will introduce at 22nm later this year are a break from the basic planar transistor that has been the foundation of integrated circuits since their invention in the 1950s.

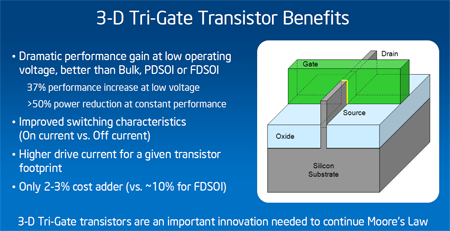

Intel will use this novel 22nm technology to manufacture its Ivy Bridge processors, which should be in volume production in the second half of this year and available in PCs and servers starting in early 2012. To demonstrate that Ivy Bridge is real, Intel showed several working systems including a server with a dual-core processor, a desktop running a driving game and a laptop playing a 1080p video. Intel said that the 22nm tri-gate transistor will deliver 37% better performance than the 32nm planar transistors used in Sandy Bridge chips--already the fastest by a wide margin. Alternatively, Intel can tune the tri-gate transistors to provide the same level of performance while using half the power of Sandy Bridge. The 22nm technology will increase the CPU performance in Ivy Bridge, but Dadi Perlmutter, a vice president and General Manager of the Intel Architecture Group, also hinted it will make a big difference in the graphics and media processing capabilities of Ivy Bridge.

(Intel has posted lots of background material on the 22nm technology.)

This transition will occur as the rest of the industry is shifting from 45nm/40nm to 32nm/28nm. Like the introduction of high-k materials and metal gates (HKMG)--where competitors are still playing catch-up three years later--the shift to tri-gates could propel Intel years ahead of AMD. And because the 22nm technology with tri-gates is not only denser, but also uses less power, it should work well in mobile devices giving Intel a fresh chance to challenge companies that design ARM-based application processors such as Qualcomm, Samsung, Texas Instruments and Nvidia. While SOCs (System-On-Chip) using Intel's 22nm process technology will come later, Atom is on an accelerated schedule and will be released around the same time as new PC processors in future generations.

The vast majority of today's integrated circuits are built using planar transistors, meaning ones in which the silicon channels that conduct the flow of electrons when the switch (the gate electrode) is turned on and off lie flat on a silicon base or substrate. For decades, the industry has been able to successively shrink the features of these transistors, packing more into a given area of silicon with each new generation of process technology--the phenomenon known as Moore's Law. But starting at around 90nm, which Intel introduced in 2004, the industry hit a roadblock. Certain elements became so small that the gates that control the switching of transistors began leaking current creating a power problem. The solution was the HKMG recipe that Intel introduced on its 45nm processors starting in early 2008. This allowed Intel to use thicker insulating layers to control gate leakage without sacrificing performance.

It's possible to build 32nm/28nm chips using conventional polysilicon oxynitride gates--most semiconductor foundries will offer this--but the benefits of HKMG are so significant that the rest of the industry is following suit. AMD's Llano processor, which is now shipping and should appear in desktops and laptops starting in June, is manufactured by GlobalFoundries using a 32nm HKMG process. TSMC, the world's largest semiconductor foundry, will start volume production of chips using a 28nm HKMG process later this year, followed by GlobalFoundries on that node in early 2012.

As chip designers scale transistors beyond 32nm, however, the features start to become so small that it creates more electrostatic problems. In other words, it is difficult to properly control the switching of the transistors. One solution to this is a 3-D or non-planar transistor structure. Most of the industry refers to this as a FinFET (Field-Effect Transistor) because the conducting channel sticks up from the substrate like a fin with a gate on either side--or a double-gate--to better control switching. The problem with FinFETs is that they require a relatively thin and tall fin, which is difficult to manufacture. Think of it like building a skyscraper versus a small office building (although you could fit perhaps 5,000 of these "skyscrapers" in the width of a human hair). Intel has a different twist on the FinFET. The tri-gate surrounds the channel on three sides so that it can effectively control a shorter and wider fin that should be easier to build, though it is still more challenging than the tried-and-true planar transistor. (The ideal transistor would have a gate that wrapped all the way around a tiny silicon nanowire, but this is impossible to manufacture using today's technology.)

When you provide more than 80 percent of the world's microprocessors, you don't just roll the dice on a completely new technology. Like HKMG, tri-gates have been in the works for a long time. In 2002, Intel gave a presentation showing why tri-gates would be easier to manufacture than other fully-depleted such as the single-gate (planar) or double-gate (FinFET). In 2004, Intel showed tri-gates could improve electrostatics and extend transistors scaling for 32nm and beyond. And by 2006, the company was discussing how tri-gates could be combined with other key technologies such as HKMG and strained silicon to produce circuits with higher performance and lower power than planar transistors on the same node (65nm at the time).

The introduction of tri-gate transistors will enable Moore's Law to continue. Intel said the tri-gate structure will work not only at 22nm, but also on the 14nm technology scheduled for production in late 2013. More important, it should allow Intel's customers to build not only laptops but a whole range of devices from smartphones to servers in large data centers that have significantly better performance and use less power.

In the meantime, Intel's competitors will have a lot of tough choices to make after 28nm. They can stick with a planar transistor structure on an exotic substrate known as ET-SOI (Extremely Thin-Silicon On Insulator), but these wafers are difficult to manufacture. Intel said its tri-gate approach will add two to three percent to the cost of a finished wafer while ET-SOI will add 10 percent to the manufacturing cost. Or the foundries may choose to switch to a 3-D double- or tri-gate structure starting at 14nm. None of these will be easy. In addition, Intel has the luxury of creating one technology optimized specifically to work on its processor design. The foundries need to come up with process technology that will work with everything from programmable logic (Altera and Xilinx) to graphics processors (AMD and Nvidia) to mobile processors and wireless basebands (Qualcomm, Broadcom and others). Intel Senior Fellow Mark Bohr said that Ivy Bridge will give Intel as much as a three-year head start versus its competition, but looking at all these factors you could argue the lead may be even greater in the next few years.