Microsoft Research parallel programming project set to go commercial in 2011

It's been a while since the Redmondians have talked up "Dryad," Microsoft's answer to Google's MapReduce and Apache's Hadoop. (I think the last time Dryad got any coverage outside the research community was when Microsoft Chairman Bill Gates mentioned it to the New York Times in 2006.)

Dryad is an ongoing Microsoft Research project dedicated to developing ways to write parallel and distributed programs that can scale from small clusters to large datacenters. There's a DryadLINQ compiler and runtime that is related to the project. Microsoft released builds of Dryad and DryadLINQ code to academics for noncommercial use in the summer 2009.

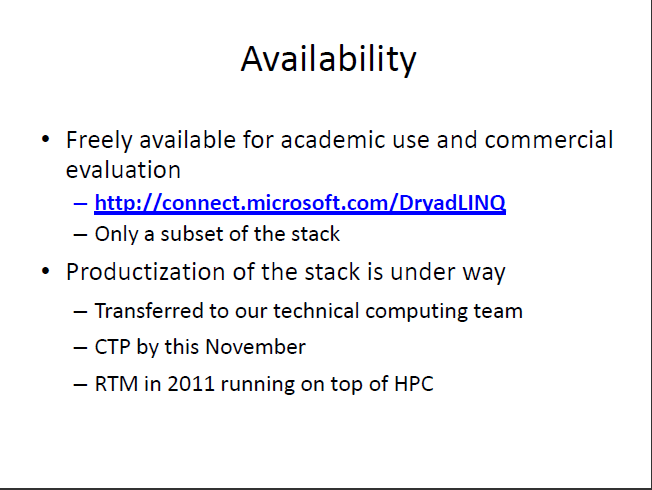

It looks like Dryad is ready to take the next step. Microsoft is planning to move the Dryad stack from Microsoft Research to Microsoft's Technical Computing Group. The plan is to deliver a first Community Technology Preview (CTP) test build of the stack in November 2010 and to release a final version of it running on Windows Server High Performance Computing servers by 2011, according to a slide from an August 2010 presentation by one of the principals working on Dryad.

The Dryad stack is getting more detailed as the researchers continue to work on it. Here's the existing Dryad stack diagram:

(click on the image to enlarge)

Here's an updated version of the stack diagram from the aforementioned August 2010 presentation by one of the Dryad team members:

(click on the image to enlarge) The Dryad layer of the stack handles scheduling and fault-tolerance, while the DryadLINQlayer is more about parallelization of programs.

The latest Dryad stack diagram includes mention of a new distributed filesystem, codenamed TidyFS, for parallel computation with Dryad. This file system "provides fault tolerance and data replication similar to GFS (the Google File System) or the Cosmos store." (Cosmo, according to the previous stack diagram, was the codename for the Dryad file system which complemented the NT File System. TidyFS is either the new name for Cosmos or its successor, I'd say.)

There's also a set of related data-management tools, codenamed "Nectar." I found a white paper from Microsoft Research on Nectar, which explains its purpose this way:

"In a Nectar-managed data center, all access to a derived dataset is mediated by Nectar. At the lowest level of the system, a derived dataset is referenced by the LINQ program fragment or expression that produced it. Programmers refer to derived datasets with simple pathnames that contain a simple indirection (much like a UNIX symbolic link) to the actual LINQ programs that produce them."

There's one more new Dryad-related codename worth noting: "Quincy." Quincy is a scheduling system for distributed clusters. (Quincy, Wash., also happens to be the location of one of Microsoft's major datacenters.)

Microsoft is continuing to step up its work in the HPC space, hoping to ice out Linux in that arena. The Softies are seemingly counting on Dryad to keep up their momentum both on premises, with Windows Server, and in the cloud with Windows Azure in its datacenters.