Computer chatbot 'Eugene Goostman' passes the Turing test

A computer program that pretends to be a 13-year-old Ukrainian boy called Eugene Goostman passed a Turing test at the Royal Society in London yesterday (Saturday 6 June) by convincing 33 percent of the judges that it was human during a five-minute typed conversation. The test was suggested by computer scientist Alan Turing in 1950, and the competition was held on the 60th anniversary of his death.

The judges included Robert Llewellyn, who played the android Kryten in Red Dwarf, and Lord Sharkey, who led the campaign for Turing's posthumous pardon last year. Llewellyn tweeted: "Turing test was amazing. Did 10 sessions of 5 minutes, 2 screens, 1 human 1 machine. I guessed correctly 4 times. Clever little robot fellow/" There were five AI programs, so presumably Goostman fooled him. [Update: Llewellyn has written about his experience in Turing test: this little Eugene Goostman was much brighter than I imagined at the Guardian.]

Eugene Goostman's success was not a surprise. In 2012, the same chatbot fooled 29 percent of the 25 human judges when winning a Turing test competition held at Bletchley Park, the World War II codebreaking centre, in Milton Keynes on June 23 — which would have been Turing's 100th birthday. Goostman was also a runner-up in the Loebner Prize tests in 2001, 2005, and 2008.

Kevin Warwick, a visiting professor at the University of Reading, which organised both tests, said it was the first time a chatbot had passed an open-ended test, rather than one where topics or questions were set in advance.

Some of Eugene Goostman's success is down to the choice of a 13-year-old boy from Odessa, who can't be expected to know everything and might be forgiven for minor grammatical or linguistic errors. Vladimir Veselov, one of the program's developers, said: "We spent a lot of time developing a character with a believable personality."

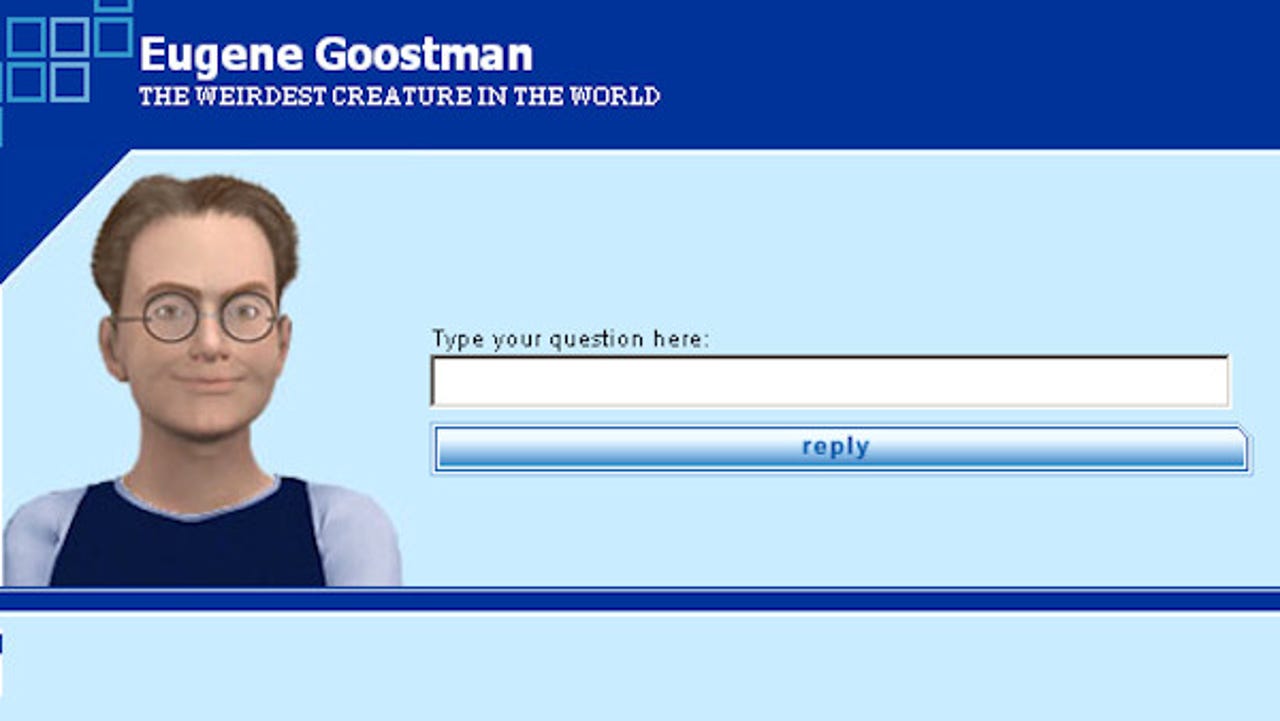

The fictional Eugene has a father who is a gynaecologist, and has a pet guinea pig. He says: "My mom's always shouting that 'this dirty pig is a PIG anyway, in spite it is 'guinea' — and wants me to give it as a gift to anyone of my friends for their birthday." You can ask him yourself at the Princeton AI (artificial intelligence) website, where Eugene chats online. ("No, I don't go to princeton, unfortunately! Maybe, let's talk about something else?")

The three Russian developers — Veselov, Eugene Demchenko and Sergey Ulasen — met up in Saint Petersburg, though Veselov now lives and works in the US. In a presentation at the Chatbots 3.0 conference in Philadelphia in 2010, Veselov said the chatbot was his hobby, and that one of the most important parts of the program was a "typo corrector". Like humans and Google Search, Goostman tries to make sense of spelling mistakes and mistypings that generally cause computers far more problems than humans.

Chatbots could be used to provide robot assistances to website visitors, or built into robots that provide human companionship, and so on. The underlying technologies could also be used to improve the automatic parsing of documents, and similar tasks.

Kevin Warwick said in a statement from the organisers: "Of course the Test has implications for society today. Having a computer that can trick a human into thinking that someone, or even something, is a person we trust is a wake-up call to cybercrime. The Turing Test is a vital tool for combatting that threat. It is important to understand more fully how online, real-time communication of this type can influence an individual human in such a way that they are fooled into believing something is true... when in fact it is not."

Of course, bots are really not hard to spot, if you're trying. But they don't have to pretend to be human in order to be useful.