Facebook played with our emotions in data experiment. So what?

Facebook has published a report showing that it played with data from 689,003 out of its 1.3 billion users in 2012. After it reported its findings, it was accused of emotionally manipulating its users.

The experiment, published in a paper in the March issue of Proceedings of National Academy of Sciences, inserted emotionally skewed posts in to people’s news feeds, without their knowledge. This was to test what effect that had on the statuses or "likes" that they then posted or reacted to.

“We take privacy and security at Facebook really seriously" ~ Sheryl Sandberg, COO Facebook

The study by the Facebook team tested emotions of users. It ran these tests for a week in January 2012.

It wanted to see whether posts with more emotional content were more engaging.

It showed a selection of users different emotional varieties of posts in their feeds. Some users received posts with positive sentiments.

Other users received posts with more negative emotions. Facebook wanted to see if this altered people’s Facebook behaviour and emotions.

The researchers wanted to test whether "emotional contagion" occurs outside of in-person interaction between individuals by reducing the amount of emotional content in the News Feed.

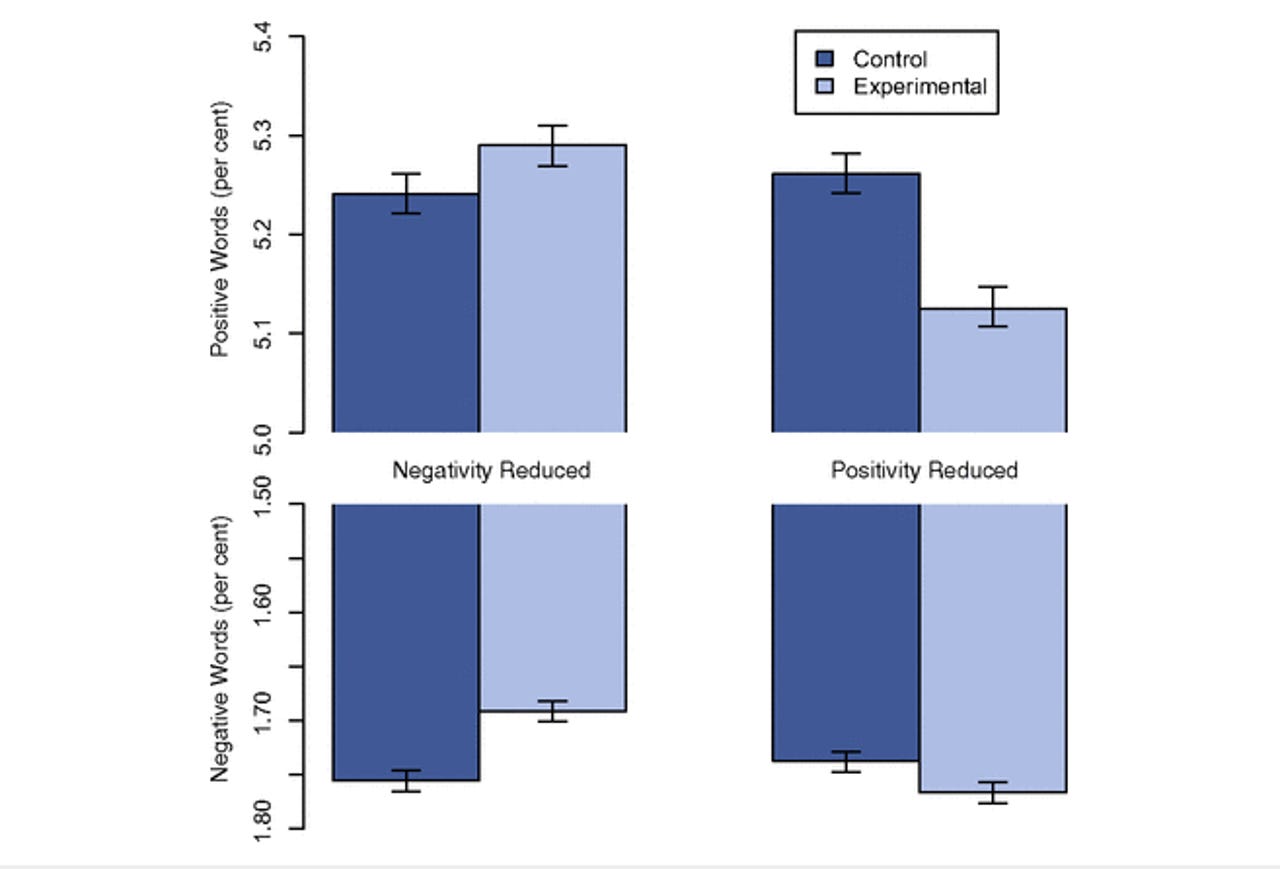

Interestingly enough, the experiment seems to work. People who saw positive posts tended to write in a more positive way, people that saw negative posts did not.

The study found that when positive expressions were reduced in people’s feeds, people produced fewer positive posts and more negative posts. However when negative expressions were reduced, users posted more positive posts.

Facebook has been accused of manipulating emotions. Well, get over it Facebook users. If you are a Facebook user, you willingly give Facebook every bit of data it has about you.

That data, as soon as you press submit, is data that Facebook can do with whatever it wants to. Whilst you might not have explicitly agreed to this at the time you signed up for the service, the agreement to use your data for research is now there in its terms of use.

Facebook’s data tests are not new. Facebook regularly manipulates what you see. It changes its hugely complex algorithm to show you less posts from people you don’t interact with often.

You see less posts from brands that have huge numbers of followers, you see different types of ads in your sidebar.

Facebook has been doing this for years.

It says it does this to give you a better experience on Facebook. These "experiments" on your emotions allow it to gauge how to best present you with the information you want.

More about Facebook's infrastructure:

The Data Science team at Facebook has a huge amount of data to play with. Facebook was experimenting with showing users items in their feeds that they really wanted to see.

The report shows that "emotional states can be transferred to others via emotional contagion, leading people to experience the same emotions without their awareness".

The report also states that it provides "experimental evidence that emotional contagion occurs without direct interaction between people".

It says that having exposure to a friend expressing an emotion is sufficient to cause this effect.

The company uses its Edgerank algorithm carefully to ensure that you see the posts from people that you are more likely to engage with – and more likely to interact with.

You will see the images and videos that you want to share, and you will return to.

The premise here is if you see something in your feed that pleases you, you are more likely to return to Facebook and spend more time there.

This enables Facebook to show you more relevant ads — ads that you are much more likely to click on, thereby increasing Facebook’s revenue.

Sheryl Sandberg assured users that Facebook could not control users and could not control emotions.

"We take privacy and security at Facebook really seriously because that is something that allows people to share opinions and emotions." said Sandberg.

A previous Facebook study showed user influencer over election votes. This led to comments that Facebook had mobilized voters to vote.

Facebook has apologised for the way that this information was released. The Internet has moved on to its next concern.

The Facebook Data Scientists will go on to their next big data crunching experiment with the data we have willingly provided as we interact with our friends and favourite brands.

With — or without our consent.