If public key cryptography were really broken

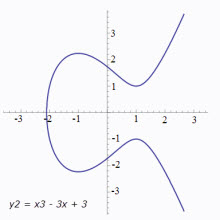

The asymmetric cryptography on which so much security on the Internet is based relies on one of two mathematical assumptions to work: that it is impossible, other than through brute force, to determine the factors of large integers when those factors are two or more large primes, or (to quote Wikipedia on the subject of Elliptical Curve Cryptography) "… that finding the discrete logarithm of a random elliptic curve element with respect to a publicly known base point is infeasible".

"Brute force" in this case means that you have to iterate through all the possibilities until you find one that works. In a good, modern algorithm, the number of possibilities is extremely large, making it time-consuming even with vast computing resources.

What if these assumptions were no longer valid? What if, for one reason or another, factoring the products of large primes and/or determining the discrete logarithm of a random elliptic curve element became something which could be done quickly with reasonable computing resources?

Such a development would really suck. It would mean that the security on which the overwhelming majority of the Internet and non-Internet computing is based would be useless.

Cryptographer general security guru Bruce Schneier considered this problem in a recent blog entry called "The Cryptopocalypse" in reaction to a presentation at the recent Black Hat conference. Schneier is not especially worried, and his reasoning makes sense to me.

With respect to factoring, which is the much more common method, he cites an observation he made in 1999:

Factoring has been getting easier. It's been getting easier faster than anyone has anticipated. I see four reasons why this is so:

- Computers are getting faster.

- Computers are better networked.

- The factoring algorithms are getting more efficient.

- Fundamental advances in mathematics are giving us better factoring algorithms.

He adds that the issues are very similar for the discrete log problem.

Computers are indeed getting faster all the time, and networks are becoming more prevalent and faster. This means that it's easier to throw computing resources at a problem like brute force key cracking. The third one happens, but not as radically or regularly as improvements in CPUs and networks.

It's the 4th one that's the real dystopian nightmare. It's the only one that can really make PKI obsolete. This is, in part, because the other 3 reasons make cracking faster, but at the same time standards are advancing to make keys larger and more difficult to crack. The Black Hat presentation Schneier refers to notes that this race may force RSA keys (the keys used with factoring) to unmanageably large sizes, but ECC remains as an escape valve, because it needs much smaller keys and exponential time to crack.

It's true that changing software is a burden and, because of that, many companies are still using old, obsolete crypto algorithms, but the fact remains that modern, strong crypto options are available to those willing to invest in them. But no matter how much you invest in PKI, if the basic mathematical assumptions behind them are broken, the security is non-existent.

Schneier is not especially worried about it. He disputes the significance of recent mathematical work claimed to address the discrete log problem and says he knows of no mathematical work that would really undermine the important assumptions.

This only makes the problem a little less scary. Progress on cracking ECC may be happening even if Schneier doesn't know about it; after all, you could sell such technique for a hell of a lot of money. The main reason I won't worry about it is that I may as well worry about a comet colliding with earth. There's nothing I can do to defend myself and it may never happen, or at least not for so long that solutions will be available. But it does give me pause to think that almost all trust on the Internet rests on the intractability of one specific mathematical problem.