Microsoft to implement 'Catapult' programmable processors in its datacenters

Microsoft researchers have been experimenting with using field-programmable gate array (FPGA) processors in an attempt to make its datacenters more efficient.

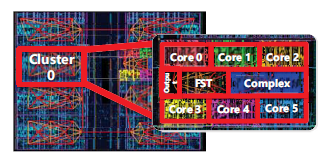

Researchers collaborated with Microsoft's Bing team to test a pilot of "Catapult," which is a programmable hardware/software "fabric" on more than 1,600 Microsoft datacenter servers running Intel Xeon processors and Altera FPGA chips. The goal of the pilot was to see if FPGA-enhanced servers could provide faster, better quality search results at a lower cost. The answer, it turned out, was yes, and now Microsoft is planning to roll out FPGA-enhanced, Bing-powered servers to process customer searches starting in early 2015.

"The system takes search queries coming from Bing and offloads a lot of the work to the FPGAs, which are custom-programmed for the heavy computational work needed to figure out which webpages results should be displayed in which order," as Wired explains in its write-up about the new technology. According to MSR Director of Client & Cloud Apps, Doug Burger, who is heading up the pilot, the FPGAs are 40 times faster than a CPU at processing Bing's custom algorithms, and the overall system will be twice as fast as Bing's existing datacenter system. Microsoft will be able to chop the number of servers it is using to dish up these Bing queries by half, as a result.

The pilot is described in a new Microsoft Research white paper, published on June 16, entitled "A Reconfigurable Fabric for Accelerating Large-Scale Datacenter Services."

Featured

Microsoft Research has been doing work in the FPGA area for at least the past several years. Microsoft Technical Fellow Chuck Thacker has been working on a project to help build FPGAs -- which are semiconductors that can be custom-configured after they're manufactured -- along with industry and academic researchers on the Research Accelerator for Multiple Processors (RAMP) consortium, for example.

The researchers predicted in the white paper that programmability of FPGAs is going to be an issue in the long term. Currently, domain-specific languages like Scala and OpenCL, plus FPGA-targeted tools, can be used. But more integrated development tools are going to be needed within the next 10 to 15 years, "well past the end of Moore's Law," the paper's authors said.

"We conclude that distributed reconfigurable fabrics are a viable path forward as increases in server performance level off, and will be crucial at the end of Moore’s Law for continued cost and capability improvements. Reconfigurability is a critical means by which hardware acceleration can keep pace with the rapid rate of change in datacenter services," the authors concluded.