DeepMind AI helps Google slash datacenter energy bills

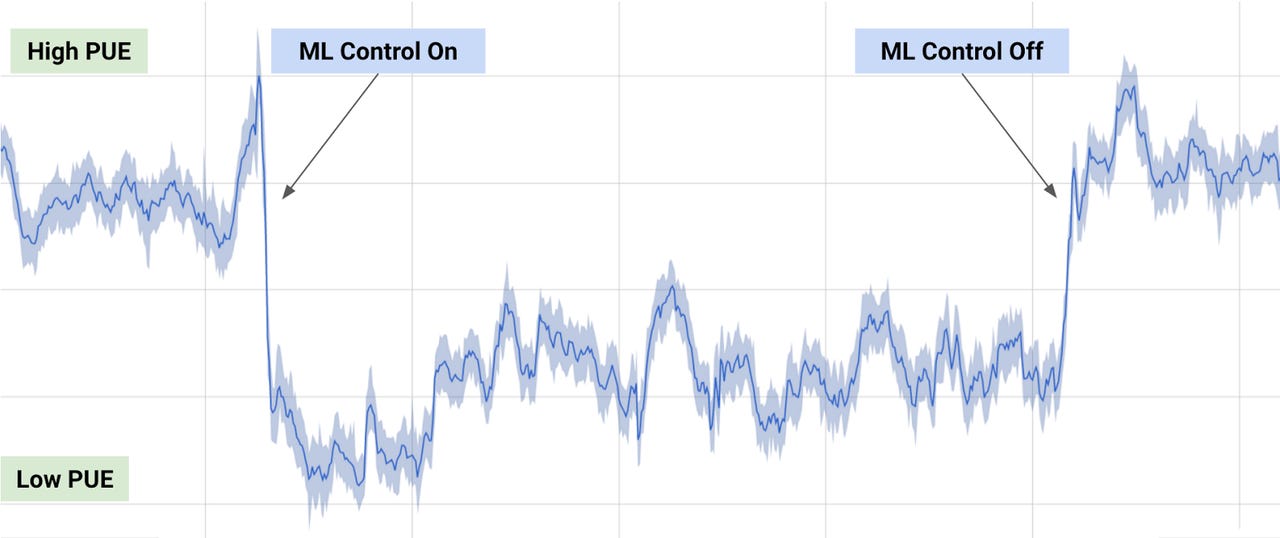

The results of Google's machine learning test on PUE at its datacenters.

Google's $500m purchase of DeepMind is paying off in more ways than one, helping the search firm slash the energy it uses cooling its datacenters by 40 percent.

The DeepMind-powered savings on cooling have resulted in an overall 15 percent reduction in its datacenters' PUE (power usage effectiveness), a measure of how much energy is used by IT as a proportion of the total energy used by a building.

With the shift to cloud computing, the tech sector has emerged as one of the world's biggest users of energy, much of it from dirty sources such as coal. To help cut its carbon footprint from the 4,402,836MWh Google's datacenters used in 2014, the company has, for example, built a datacenter in Finland where seawater is used for cooling equipment, and pays Swedish wind farms to compensate its other energy consumption.

DeepMind offers yet another answer to this challenge by allowing Google to optimize its datacenters' efficiency with a series of deep neural networks that predict future energy requirements and provide recommended actions to achieve an optimal PUE.

Over the past few months, DeepMind's researchers have helped Google's datacenter team develop a system of neural networks that are trained using data from its facilities' various sensors. DeepMind co-founder Demis Cassabas told Bloomberg the network controls over 120 variables ranging from cooling systems to windows.

"We accomplished this by taking the historical data that had already been collected by thousands of sensors within the data center -- data such as temperatures, power, pump speeds, setpoints, etc and using it to train an ensemble of deep neural networks," a joint post by DeepMind research engineer, Rich Evans, and Jim Gao, a Google datacenter engineer, said.

"Since our objective was to improve data center energy efficiency, we trained the neural networks on the average future PUE (Power Usage Effectiveness), which is defined as the ratio of the total building energy usage to the IT energy usage. We then trained two additional ensembles of deep neural networks to predict the future temperature and pressure of the data center over the next hour. The purpose of these predictions is to simulate the recommended actions from the PUE model, to ensure that we do not go beyond any operating constraints," they wrote.

Google considers its DeepMind power savings a rare and major breakthrough in its mission to becoming 100 percent clean-powered. It said that tackling cooling requirements is incredibly difficult due to the complex ways that industrial equipment such as pumps, chillers, and cooling towers respond to different, constantly-changing, and unpredictable conditions, such as weather changes. Additionally, each of its datacenters has a different architecture, making it more challenging to rollout one answer for all facilities.

However, the algorithm the two teams developed has offered Google a general-purpose framework to understand how its datacenters operate. It's only been tested in a live environment in one facility, however Google says it plans to apply the technique to other datacenter challenges in coming months.

Besides optimizing cooling requirements, Google sees potential for it in getting more energy from the same unit of input. On this count, Google says that today it gets about 3.5 times more computing power from the same amount of energy it did five years ago. It also believes it could help cut energy resource consumption in semiconductor manufacturing and other manufacturing facilities.