How Google Lookout's AI can describe images for the visually impaired

Even before the generative AI boom, the Google Lookout app leveraged AI to assist the visually impaired and blind community explore their surroundings using their phone cameras. Launched in March 2019, the app recently added a handy AI-powered feature -- Image Q+A.

Also: What is Google Bard? Here's everything you need to know

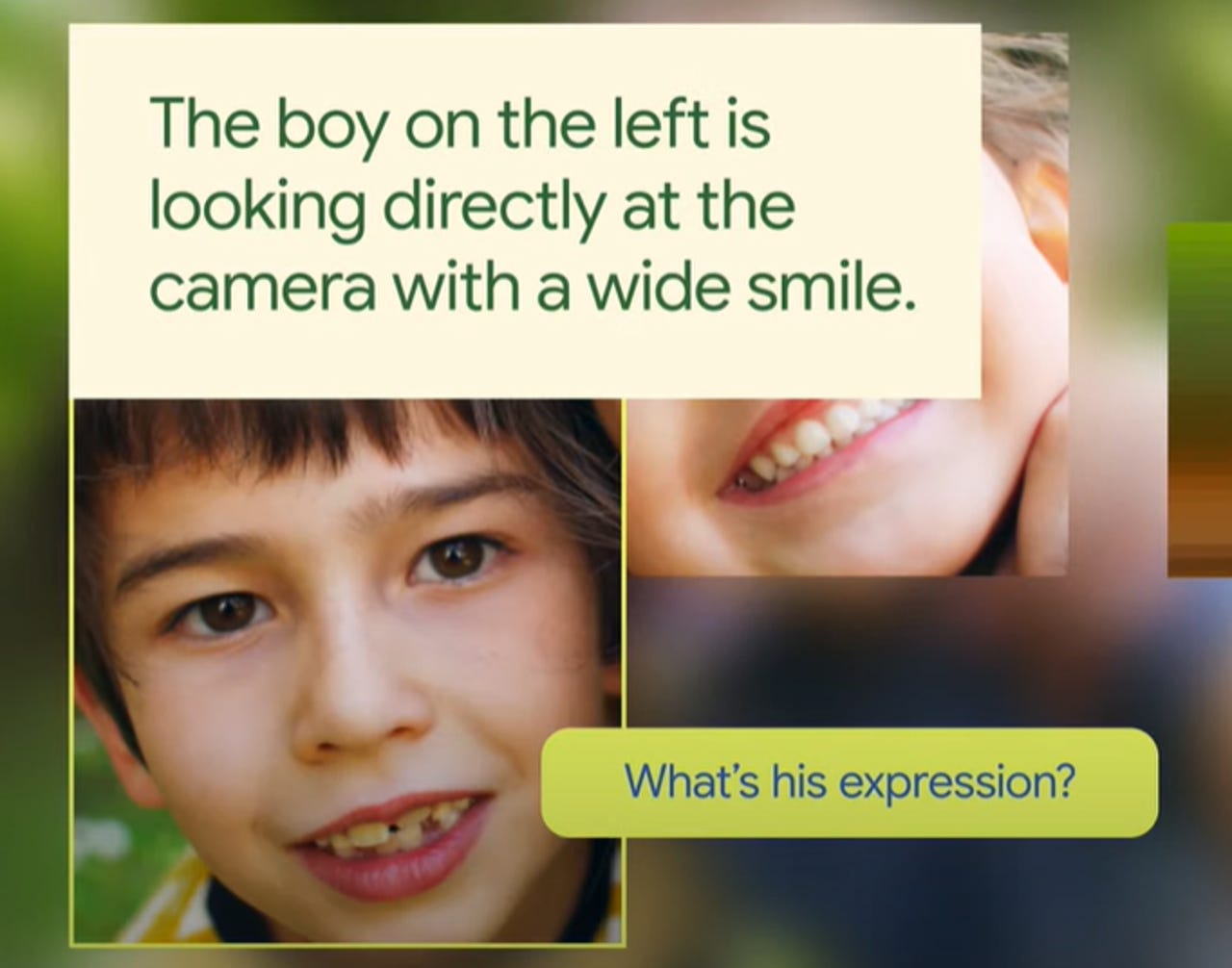

The Image Q+A feature allows users to ask questions about an image by uploading the photo and using their voice or entering text. The user will then receive a detailed description of the image addressing their concerns.

For example, you can ask questions regarding what color a subject in the image is, specific details regarding a subject, such as their facial expression, and even ask the app to read text within the image, such as what a sign says.

Although the feature was released in the fall, Google this week shared more insight about the feature via a post on X, formerly Twitter, also showcasing how users have benefited from the technology.

How can AI help blind and partially-sighted people better perceive the world? ✨

— Google DeepMind (@GoogleDeepMind) February 6, 2024

We explain how our technology powers Image Q&A: a feature in @Google's Lookout app enabling communities to ask questions about images.

Watch the Audio Described version.→ https://t.co/KNqc6KbTWo pic.twitter.com/TLzNo7Ub0o

Underlying the technology is Google's AI model, which was trained to understand and give specific descriptions of videos according to Google.

You can access the feature on the app, which is free to download. However, the feature is only available in English in the US, UK, and Canada.

Also: I just tried Google's ImageFX AI image generator, and I'm shocked at how good it is

The app also includes several other innovative features, including a Text mode, which allows users to skim the text and hear it read aloud; a Food Label mode, which can identify packaged foods by their label; a Currency mode, which can identify dollars, euros, and Indian rupees quickly; and more.