How just 30 machines beat a warehouse-sized supercomputer to set a new world record

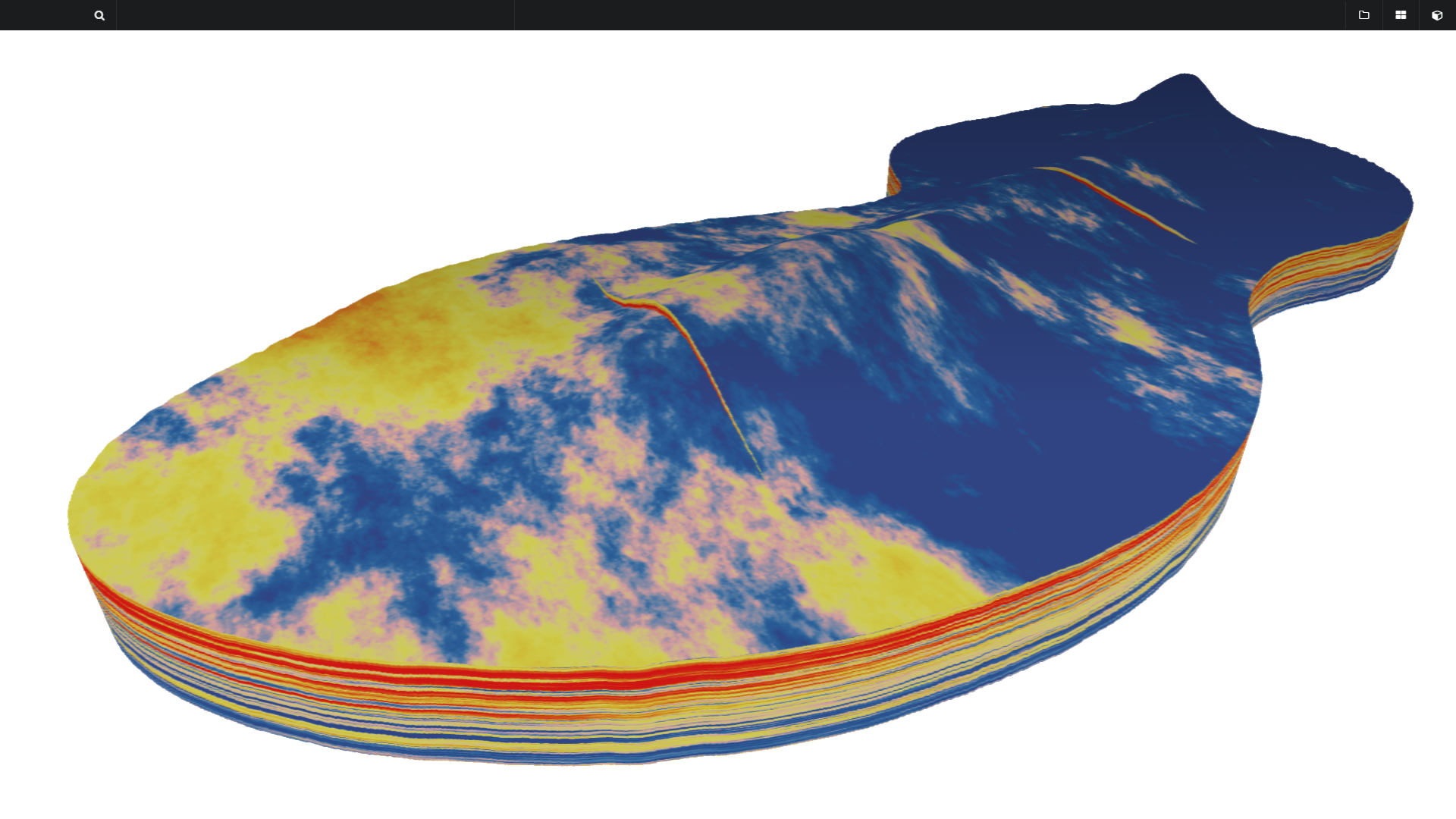

An IBM/Nvidia cluster completed a one-billion cell Echelon petroleum reservoir simulation (shown above) in 92 minutes.

A high-performance computing record set by a cluster of more than 22,000 compute nodes has been shattered by just 30 machines.

The massive reduction in computing infrastructure needed to set a new record for simulating oil, water, and gas flow was made possible by tapping into the huge parallel processing ability of graphics-processing units (GPUs).

While the original record, set by ExxonMobil just a few months ago, used a cluster of more than 716,000 CPU cores to run the simulation, this new approach by IBM and Stone Ridge Technology relied on 30 IBM OpenPower servers equipped with 120 Nvidia Tesla P100 GPU accelerators.

The IBM/Nvidia cluster completed a one-billion cell Echelon petroleum reservoir simulation in 92 minutes, faster than ExxonMobil's approach and using what IBM says is one 10th of the power and 1/100th of the space.

Each of the IBM Power System S822LC machines used in this latest test pairs two IBM POWER8 CPUs with four Tesla P100 GPUs, using the high-speed, bidirectional 40GBps NVLink.

Sumit Gupta, IBM VP of high-performance computing and analytics at IBM, said the result demonstrates the strengths of this tightly coupled GPU/CPU architecture.

"The bottom line is that by running Echelon on IBM Power Systems, users can achieve faster runtimes using a fraction of the hardware," he said.

"One recent effort used more than 700,000 processors in a server installation that occupies nearly half a football field. Stone Ridge did this calculation on two racks of IBM machines that could fit in the space of half a ping-pong table."

GPUs excel at tasks that can be broken into subtasks, which can then be handled in parallel by the thousands of power-efficient cores within each GPU. Each of the P100 GPUs has 3,584 cores that can be used for parallel compute, with more than 430,000 cores across the cluster used to run the simulation.

While the types of tasks suited to the massively parallel processing offered by GPUs are still limited, Nvidia says the number continues to grow, and today includes computational fluid dynamics, structural mechanics, climate modeling, and other tasks related to manufacturing and scientific discovery.

Nvidia recently began pushing its GPUs as a technology suited to training machine-learning models, particularly the Tesla P100 GPU and its own DGX-1 server.

This month, IBM also added the Nvidia Tesla P100 GPU to the IBM Cloud, providing the option to equip individual IBM Bluemix bare-metal cloud servers with two P100 accelerator cards.