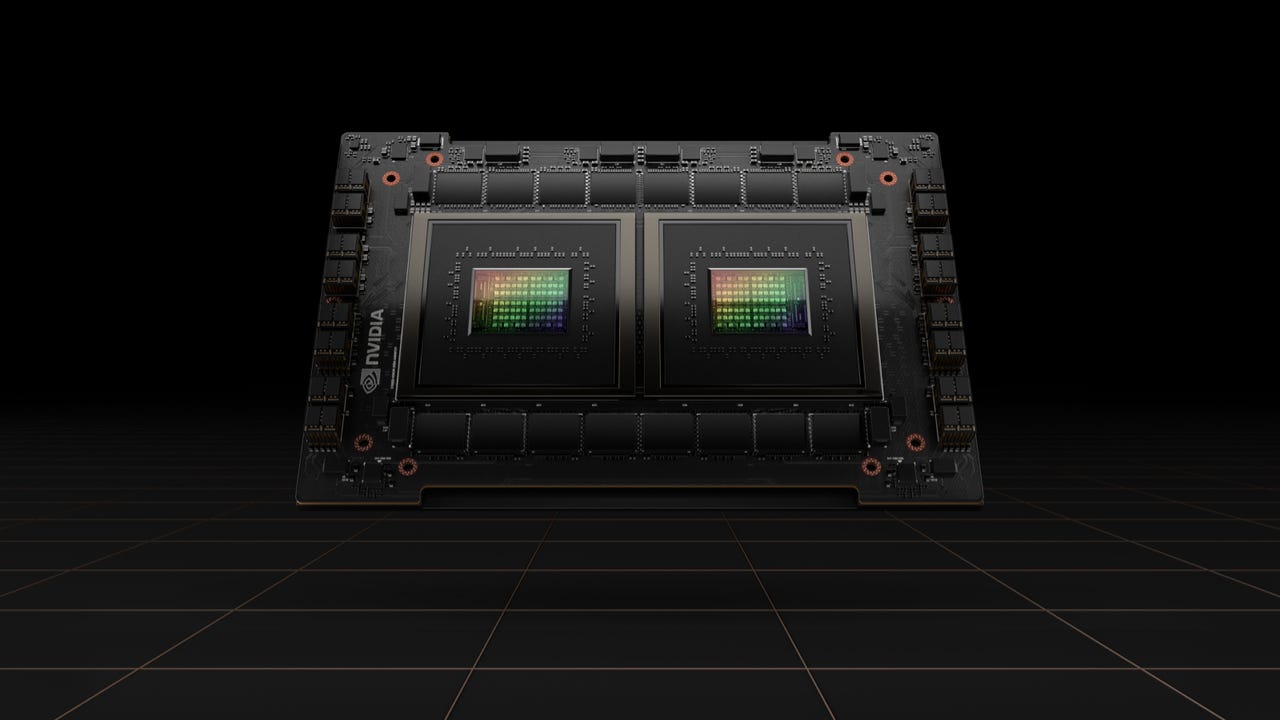

Nvidia unwraps Ampere successor Hopper and 80 billion transistor H100 GPU

Nvidia has announced its new architecture for data centre AI workloads to succeed Ampere is called Hopper, after computing pioneer Grace Hopper.

The first product based on Hopper will be the H100, which contains 80 billion transistors, is built on TSMC's 4N process, and delivers three to six times more performance than the Ampere-based A100. The GPU will support PCIe Gen5, fourth-generation NVLink HBM3 memory, and have 3Tbps of memory bandwidth.

"Twenty H100 GPUs can sustain the equivalent of the entire world's internet traffic, making it possible for customers to deliver advanced recommender systems and large language models running inference on data in real time," Nvidia said.

The GPU will also have the second generation of multi-instance technology, and be able to support seven tents on a single GPU. The company also says it will be able to do so securely, thanks to its confidential computing support.

"Hopper brings confidential computing to accelerated computing by using a combination of hardware and software. When confidential computing is enabled or trusted execution environment is created through a confidential virtual machine that encompasses both the CPU and GPU together, the data transfers between the CPU to GPU, and GPU to GPU in a node are encrypted and decrypted at the full PCIe line rate," Nvidia senior director of data center computing Paresh Kharya said.

"H100 also has a hardware firewall that secures the entire workload on the H100 GPU, and also isolates it between the memory and the computer engines, so that no one other than the owner of the trusted execution environment with the key can touch any of the data encoded inside it.

"This design guarantees total VM isolation and prevents access or modification by any unauthorised entity including the hypervisor, the host operating system, or even anyone with physical access."

Nvidia said the H100 could handle the 105-layer, 530 billion parameter monster model, the Megatron-Turing 530B, with up to 30-times higher throughput. When training the Mixture of Experts Transformer model with 395 billion parameters on 8,000 GPUs, Nvidia said rather than taking seven days using A100s, training on H100s takes only 20 hours.

The company will bundle eight H100 GPUs together for its DGX H100 system that will deliver 32 petaflops on FP8 workloads, and the new DGX Superpod will link up to 32 DGX H100 nodes with a switch using fourth-generation NVLink capable of 900GBps.

For those with more modest budgets, the GPU is set to be available from the usual cloud suspects.

Availability for the H100 will start in the third quarter.

Alongside the H100, Nvidia also unveiled its Grace Superchip, based on a pair of Grace chips connected using NVLink-C2C for chip-to-chip interconnect. The superchip has 144 Arm cores in a single socket, LPDDR5x memory with ECC, and draws down 500 watts of power.

Both the Grace Superchip, and the CPU and GPU pairing in the Grace Hopper Superchip announced last year, will be available in the first half of 2023.

Elsewhere at GTC, Nvidia announced its Drive Hyperion 9 automotive system would be available in 2026 production vehicles, and include 14 cameras, nine radars, three lidars, and 20 ultrasonic sensors for automated and autonomous driving, and three cameras and one radar for looking inside the vehicle.