How is big data faring in the enterprise?

It's certainly one of the hottest new buzzwords in technology, yet the meaning of big data typically depends on whom you ask. Yet it's also clear that big data, an important reformulation of how we store and process our digital information, continues to make a big splash as a major IT trend of this half-decade. Certainly the market estimates are optimistic, with Deloitte recently pegging the size of the market at between $1.3-$1.5 billion this year, while IDC forecasts the industry will be whopping $16.9 billion by 2015.

But these large numbers tend to obscure the fundamental changes that currently seem to be taking place under the rubric of big data.

The first of these is the data-first ethos that's embodied by trying to tap into and process ground truth (by seeking out the best raw data) and then deriving insight from what is uncovered (domain-specific business intelligence), rather that trying to find data to support one's already-completed strategic decision making.

One of the better known examples of data-first thinking is the famous "Moneyball" story, as told in the 2003 book by Michael Lewis, relating the story of how the Oakland A's bucked tradition and switched to heavy data analysis to identify their highest performers, with considerable success. Though only one data point, this story -- and a growing list of others -- are leading many believe that data-first thinking may be the solution to many long-standing problems to help combat everything from crime and disease to pollution and poverty. It's also perhaps the key to resolving somewhat more mundane challenges in our businesses as well.

The second major change is the shift away from the relational data model as the definitive standard for how to process information for the first time in over a generation. To be sure, the growing adoption in customer-facing technology of emerging platforms such as Hadoop and NoSQL-style databases, is still most prevalent in Web startups and consumer services. Yet the peta and exa-bytes of today's data volumes in many business contexts practically demands technologies that scale well in the face of unrelenting datasets and shrinking time scales that are growing exponentially.

For a variety of reasons too long to enumerate here, the relational model has at long last encountering both a serious challenge to its hegemony as well as real challengers that can frequently do better at handling todays data volumes and types. And though many organizations will continue to use relational technology to create some of their big data solutions, it's no longer the only option, particularly as the growth in unstructured data is now much faster than classical structured data.

The third change is the move towards making big data a more operational component of the way organizations work and how externally-facing products function. While data scientists are often required to get the best outcomes, the results of their work are often applications or data appliances that are usable by just about anyone. Just like Google enabled the layperson to query the entire contents of the Web with a few keywords, the next generation of enterprise big data seems to be about connecting workers with the data landscape of their organizations in a way that doesn't typically require IT wizards in white robes. Thus business solutions based on big data technology must be a readily approachable end-user technology for the average line worker in order to have a sustained and meaningful business impact.

Related: Bursting the Big Data Bubble

The data points on enterprise adoption of big data

Let's take a look at what organizations are actually reporting when it comes to big data implementation and usage today. Looking at a broad cross section of companies both large and small, the O'Reilly Strata Conference survey published a useful breakdown this year of what its attendees were doing with the technology:

- 18% already had a big data solution

- 28% had no plans at this time

- 22% planned to have a big data solution in six months

- 17% planned to have a big data solution in 12 months

- 15% planned to have a big data solution in two years

Admittedly, attendees of this particular conference were more likely than average to be adopters of big data, so these numbers are a little optimistic, even given that big data is a big tent for a great many technologies that handle large data volumes and analytics.

However, the story becomes even more interesting when we look at specific sectors. For example, the insurance industry recently reported that 15-20% of insurers are actively preparing for big data solution. Government, one of the larger potential beneficiaries of big data according to the seminal McKinsey report on the subject, is itself experiencing relatively slow adoption, with a recent survey of public sector CIOs and IT managers reporting it will take three years to start processing their data this way. If we look at function, instead of industry, we can see that sales processes are likely poised to be revolutionized by big data. A recent analysis by CSO Insights reveals that 71% of companies expect big data to have a significant impact on sales, despite only 16% currently doing so, a gap that many organizations will clearly want to close.

Related: The enterprise opportunity of Big Data: Closing the "clue gap"

However activating on the large set of changes that big data entails will clearly happen incrementally, yet broadly, in most companies. There's technology, process, infrastructure, and management that all has to be put into place, plus the hiring of data scientists that understand your business (or learn to), as well as such still-esoteric concepts such as DevOps, which will marry the operational aspects with the development aspects of big data to quickly solve business problems by applying data-first analysis combined with just-in-time R&D and deployment.

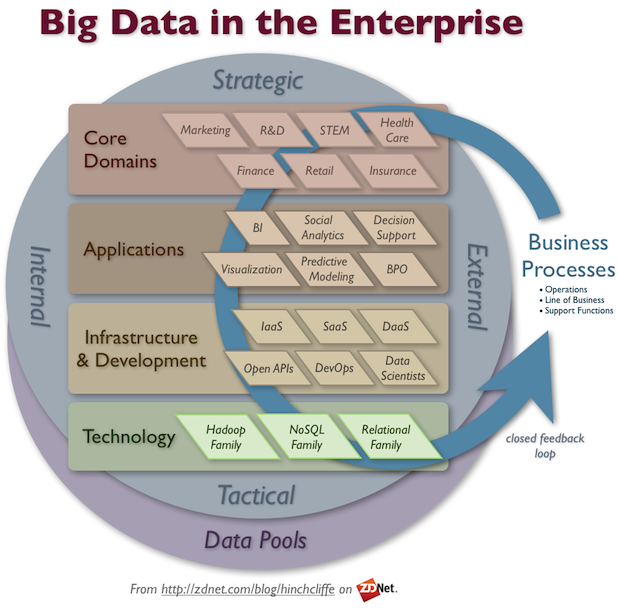

In addition, companies will also have to deliver on a big data "stack" in the enterprise. This stack will invariably consist of the following components, designed out of a conglomerate of open source software, commercial applications, on-premises and cloud infrastructure, combined with data from just about everywhere. The visual above also depicts this notional big data stack and you can see another, more technology and product-specific view I pulled together last year.

Breaking down enterprise big data

- Technology. In general, these seem to be breaking down into three major families, two of which are new and one of which is legacy. There are Hadoop and its variants, the NoSQL family, and relational databases which have added big data features.

- Infrastructure & Development. This includes Infrastructure-as-a-Service (IaaS), Software-as-a-Service (SaaS), Data-as-a-Service (DaaS), Open APIs, DevOps, and data scientists, the latter which craft solutions from an array of internal and external components from this palette.

- Big Data Applications. This list of popular application models for big data includes business intelligence, social analytics, decision support, visualization and modeling, behavioral prediction, and business process optimization (BPO), but there are many others..

- Domain-Specific Solutions. Once the big data tech, infrastructure, and app are in place, businesses must focus their efforts on extracting industry-specific value for them. Top industries and/or functions for big data (ones most likely to benefit) include marketing, R&D, scientific/technical/engineering/mathematics (STEM), health care, financial services, retail, and insurance.

- Big Data-Powered Business Processes. To be useful, big data solutions must then be incorporated into an organization's business processes including operations, line of business, and support functions. In particular, the high-value and common business processes will provide the largest ROI.

To summarize all this, it's still early days yet for this era's growing data deluge. In fact, one of the best quotes of the year about big data is from Ben Werther, who recently observed that we're still "in the pre-industrial age of big data." Most organizations aren't yet doing it at scale, but the writing is on the wall that significant competitive advantage can be had for those that want it. As I predicted earlier this year, social analytics will be one particularly bright spot in big data this year, and organizations already have a good array of tools and vendors to pick from.

Ultimately, the biggest challenge will be in integrating big data effectively into updated and revised business processes. Thus again, change itself will be the large overall obstacle as technology out-paces the ability of most organizations to absorb it. This will likely push big data into the cloud for most organizations look for strategies to speed adoption, further hastening cloud-related migration of so much of IT. This may not be a bad thing.