Hortonworks founder: Ambari 2.0 is as big a deal as Hadoop 2.0

Hadoop firm Hortonworks has been busy. After its IPO in December, and the controversy of creating the Open Data Platform with the likes of Pivotal and IBM, it's just announced a new acquisition, and submitted the Atlas security project to the Apache Software Foundation.

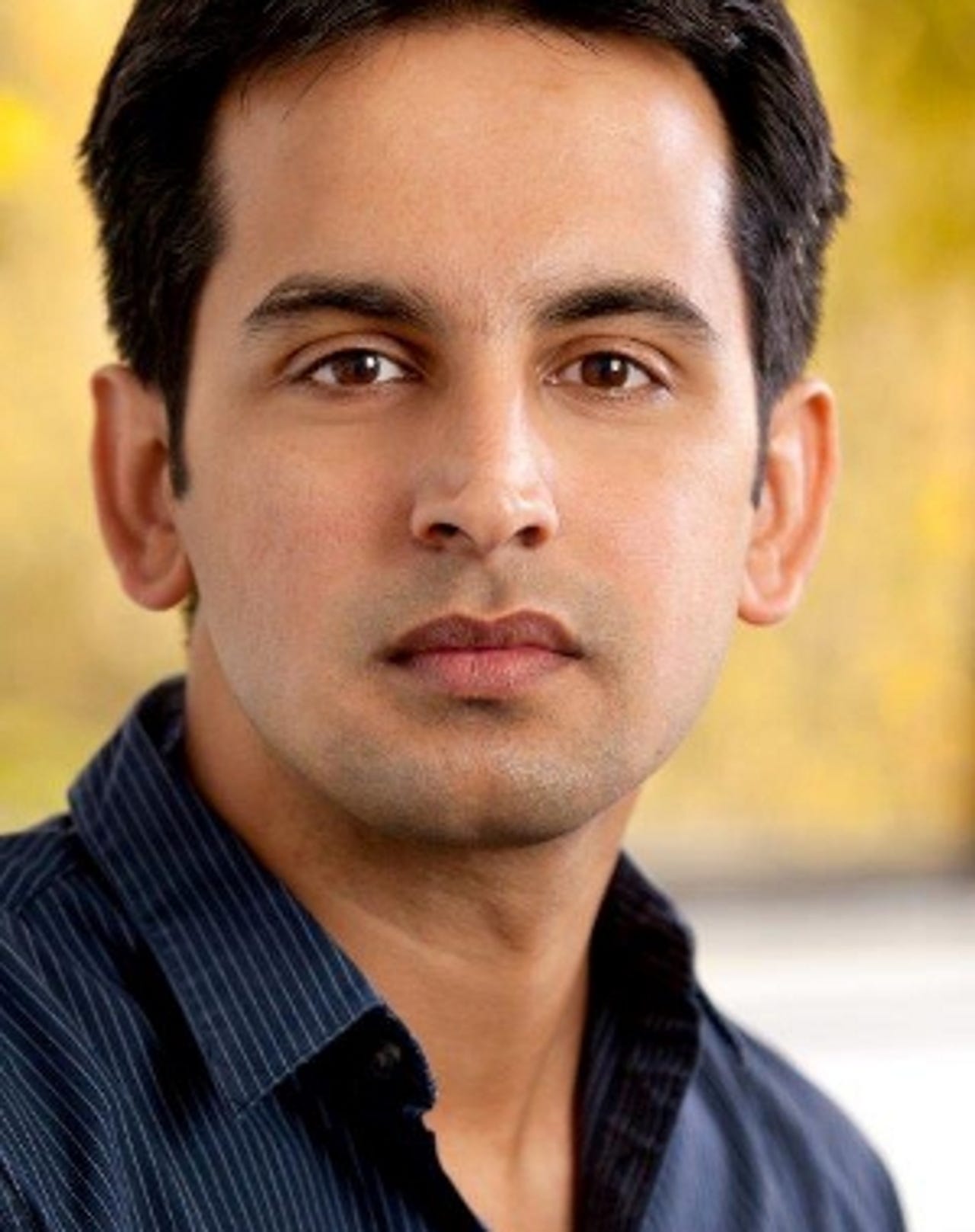

But Hortonworks co-founder and architect Arun Murthy, involved with Hadoop since its earliest days at Yahoo and the man behind the milestone YARN resource-management layer, singles out the new version of Ambari for special mention.

"At this point Ambari is easily the most comprehensive open-source management tool. That's why 2.0 is really such a big deal. It's as much a big deal for Ambari as YARN and Hadoop 2.0 were for the core itself," Murthy said.

"It's becoming the de facto open-source management platform. It's clearly the most widely adopted. It's built for Hadoop but it's not limited to Hadoop."

First among the new additions to Ambari that Murthy describes as key to enterprise users are rolling upgrades, which allow software to be updated without shutting down the entire cluster.

"With 2.0, what happens is we can do an in-place upgrade on the software while making sure your MapReduce application continues to run. It sees no difference," Murthy said.

"Frankly, it was a massive amount of engineering, which we hide from customers. From an Ambari perspective, it's just 'click a button'. But what was important was all the work in the underlying projects: HDFS, YARN, HBase, Oozie, Pig, Hive - you name it. All the 20-odd projects, we had to go fix them to be able to give you a seamless experience when you're upgrading the software.

"It was not enough just to do this in YARN and HDFS because your MapReduce job might be accessing something in HBase, or a Hive meta store."

Along with automating the process of setting up security - for example, network-authentication protocol Kerberos - also important in Ambari 2.0 is a new Hadoop metrics and monitoring system to replace Ganglia and Nagios.

"Those don't scale and we've known that for a while. In some ways monitoring Hadoop is a big-data problem, so we said, 'Why not use Hadoop to monitor itself?'. We now have all the metrics flowing into an HBase cluster, which Ambari automatically manages for you," Murthy said.

See also

"There's nothing inherent in the platform to limit your metrics collections. You can pick whether you want metrics for two years or for 20 years. We don't really care, you just make the choice - as much as you want or can afford."

Other new Ambari features include auto setup, which analyses the hardware, software, and environment where Hadoop is being deployed and automatically identifies appropriate settings.

There is also a custom view for SQL-on-Hadoop query engine Hive and the Tez framework, which allows users to analyse a SQL query and understand its performance characteristics.

"We have great UIs - great visual panes where you can dig in and do a self-service debug. In the past when you had to write a Hive query, you had to work with someone who really knew Hive to understand how to debug it," Murthy said.

"By putting all that into Ambari, you now get a view where our goal - and we'll get there in many steps - is for it to be so obvious that the user can understand why a query is fast or slow. In some ways it's a reflection of Ambari maturing. We've gone from having all these features, to now focusing on usability and debug-ability - and we'll do more of that."

Where Ambari feeds into the Cloudbreak technology gained last week with the acquisition of SequenceIQ is through its Blueprints feature, which specifies a stack, component layout, and the configurations for a Hadoop cluster instance. Cloudbreak enables multiple instances and versions of Hadoop clusters to be run in the cloud, Docker containers, or on bare metal.

"We understand that Hadoop is getting complex. We have 20-odd components. But in lots of cases you might not really want all the complexity. In IoT [Internet of Things] you probably just want Storm and Kafka. Let's say you want to do analytics. You probably just want Hive and MicroStrategy," Murthy said.

"What Ambari does with Blueprints, you can define a Blueprint - let's say an IoT Blueprint, an analytics Blueprint, a data-science Blueprint - a definition of the components you want. You pick what you want but obviously we can give you pre-built Blueprints that you can modify.

See also

"The cool part is that now you have these Blueprints, you can use Cloudbreak to deploy to the cloud if you choose to. You can either deploy to the cloud or to your internal OpenStack cluster. That really allows you to manage the complexity of your applications in a very simple way."

He added that while many people choose one cloud today, in the future multiple clouds will be commonplace.

"With this you don't really care. Do your IoT thing on Azure, do your data-science thing on Google - it doesn't really matter. It really allows us to give that simple one-click experience," he said.

The Blueprints feature also enables the replacement of certain parts of the Hadoop stack with other components, and offer customised views of that part of the stack.

While so much work has been going in Ambari, Hortonworks has also been involved with SAP and a number of other companies - Aetna, JPMorgan Chase, Merck, SAS, Schlumberger, and Target - on the proposed Apache Atlas data-governance project, which is expected eventually to reside at the bottom layer of Hadoop, with HDFS and YARN.

It will combine with a number of existing Apache projects: Knox for perimeter security; Ranger for central security policies; and Falcon for data lifecycle management. Where Atlas comes in is in managing the metadata in Hadoop.

It can, for example, be used on the audit side of data governance - enabling firms to identify who had touched certain pieces of data but also comes into play in conjunction with security policies.

"The whole data management, data governance is a piece of Hadoop that has not been solved. What's been missing in the very core of the platform at the very lowest level of the stack was a core focus on metadata," Murthy said.

"That includes things like tags. We wanted to be able to come in, tag a table or a column as sensitive information. As soon as you tag it, certain security policies should apply."

The Atlas project covers not only setting role-based access policies on data assets, but also role-based access policies on the attributes of data. That feature allows, for example, all data tagged as personally-identifiable information, or PII under US privacy rules, to have access limited by policy despite any transformations it goes through.

"Automatically, regardless of who does what to the data, the policies will inherit all the way down. In the same way that the tags will get inherited, the policies will get inherited," Murthy said.

"As people write MapReduce programs or Spark, or use SQL through Hive, those tags should get inherited as you generate new datasets."

For example, when two columns, one of which contains PII data, are joined, the output is automatically tagged as being influenced by PII, which is known as lineage on the data asset.

"You also want lineage on the processing elements you've applied. You want to know what SQL query was used to generate the dataset. Because you might want to go back in and change the processes or maybe we should write a different SQL query. You get an understanding of the exposure you have from a certain line of code," he said.

For Murthy, the mixture of businesses involved in the Atlas project is unusual and significant for its potential impact on the wider Hadoop open-source community.

"The value of collaborating with an Aetna or a Merck or SAP is that it allows us to be more mainstream in our thinking on Hadoop. Working with them means we can understand their pain points directly and bring that into the Hadoop platform - particularly in data governance and data management," he said.

"What's also interesting is it shows mainstream enterprises are not only comfortable using open-source software but now they're comfortable contributing back to it."

Murthy believes that these are exactly the type of businesses that would traditionally have worked with proprietary vendors, giving them their requirements and then waiting two years to see the resulting software, which might solve their problems but typically did not.

"Today working with the Atlas initiative, they can directly influence the roadmap by contributing their expertise in code. That's a big change," he said. "The larger the community, the larger the funnel and the better it gets faster."

Murthy points to the iPython-like Zeppelin web-based notebook interface from NFLabs for Spark as an example of the advances that the community continues to conjure up.

"If you're a data scientist, this gives you an elevated user interface where you're not looking at scripts. On a SQL query, you might want a column graph or a piechart. Those are all done automatically. The next steps are collaborate and share - you might have done some really cool data science and you want to share the notebook and collaboratively edit it," he said.

"It's really a testament to the open-source community. Innovation comes from anywhere and everywhere, and our response as stewards of the ecosystem is to curate the best of the innovation that's occurring and bring it in."

More on Hadoop and big data

- Couchbase ties into Hortonworks Hadoop for single analytics and transaction datastore

- Databricks CEO: Why so many firms are fired up over Apache Spark

- MySQL: Percona plugs in TokuDB storage engine for big datasets

- Cloudera links up with Hadoop developer Cask

- Mesosphere and MapR link up over Myriad to create one big data platform to rule them all

- Teradata rolls out big data apps, updates Loom

- MapR CEO talks Hadoop, IPO possibilities for 2015

- Teradata acquires archival app maker RainStor

- Hortonworks expands certification program, looks to accelerate enterprise Hadoop adoption

- Actian adds SPARQL City's graph analytics engine to its arsenal

- Splice Machine's SQL on Hadoop database goes on general release