IBM talks about progress on Watson, OpenPower

Five years ago IBM Watson came to the attention of the world thanks to Jeopardy. The real achievement of Watson, however, wasn't its victory over Ken Jennings, said Rob High Jr., an IBM Fellow and head of the Watson Group, during his keynote at the GPU Technology Conference. Rather it was Watson's ability to digest so much information and understand the questions thrown at it.

IBM then looked around to see where else those capabilities might be useful, and the first thing that came to mind was doctors. Since then it has turned out that there are many industries that could benefit from what it calls cognitive computing.

The difference between cognitive computing and artificial intelligence is a bit fuzzy. High conceded that there really isn't much difference, though he said IBM's cognitive computing is about amplifying human capabilities, rather than replacing them. But many researchers describe AI in similar terms, and perhaps the more relevant difference is that Watson is a collection of services that uses a mix of both deep learning and traditional hand-coded functions.

These cognitive systems have four properties: they learn, they support natural user interfaces, their value is their expertise (they need to be right most of the time), and they evolve over time based on new information. It also has to be able to do all of this at massive scale to handle the data deluge. IBM says that 2.5 exabytes of data, or what High said is the equivalent to 650 billion Harry Potter books, are being created every day. That's not just text, of course; much of this consists of images and especially video.

To evolve Watson, IBM started with the factoid pipeline for general domain knowledge ("Who was the first player to hit 40 home runs and steal 40 bases in a season?") and then developed a Watson Discovery for specific domains that could help find answers to questions you didn't know to ask.

One of the most well-known examples was IBM's work with Memorial Sloan Kettering Cancer Center on Watson for Oncology, which ingests data from clinical notes, medical journals, textbooks and millions of pages of text, and recommends treatment options. It will reportedly be used in hospitals in India stating later this year. From there IBM shifted to a Watson Engagement Advisor, which is basically a digital concierge or customer support agent.

Most recently IBM has been focused on making Watson available as a set of services for customers that want to build their own applications with natural question-and-answer capabilities. Today it has 32 services available on the Watson Developer Cloud hosted on its Bluemix platform-as-a-service.

High said that IBM has seen enormous uptake of these Watson services from both start-ups and established organizations. Some examples include The North Face, which has used it to build a smarter recommendation engine for online shopping; Bright Mind, which aims to improve physical training for amateurs and professional athletes; and Elemental Path, which has developed a toy dinosaur that can interact with kids in more natural ways. Kellogg's just launched a Bear Naked site that uses Chef Watson to help customers create better custom granola mixes.

Now IBM is working on making Watson more human. This includes a Tone Analyzer (think of this as a sort spellchecker for tone before you send that e-mail to the boss), Emotion Analysis of text, and Personality Insights, which uses things you've written to assess your personality traits (you can try it out here).

At the GPU Technology Conference, IBM has a large presence on the exhibit floor where among other things it is showing how Watson services can help SoftBank's Aldebaran NAO (pronounced "now") and Pepper robots communicate better with humans through natural language and gestures. NAO uses five Watson services: speech-to-text, text-to-speech, Dialog, Tradeoff Analytics (which can be used to weigh different factors when making a recommendation) and Personality Insights.

IBM's Alexa Wilson demonstrated how NAO with Watson can help find the right gift for a child using Personality Insights to identify the most trustworthy reviews. Hilton is testing a NAO robot named Connie as a concierge at a hotel in McLean, Virginia. In Japan, Nestle is using the larger Pepper robot to help customers select coffee in Nespresso boutiques.

All of this take a lot compute power.

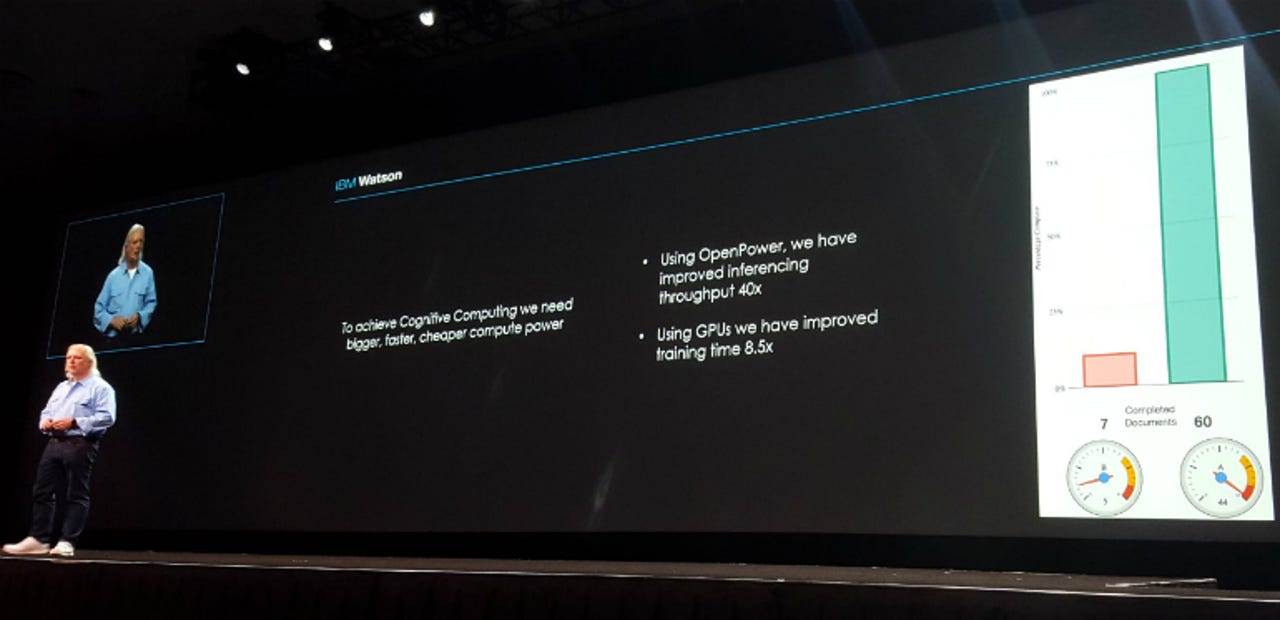

In another demonstration on the exhibit floor, IBM was showing how adding a Tesla K40 GPU to a server with two Power8 CPUs could increase the number of documents Watson Concept Insights can index concurrently from seven to 60, reducing training time by 8.5x. (This demo also used an older GPU and double-precision arithmetic, which is overkill, so the real-world boost from, say an 8-way Tesla P100 server at half-precision, should be orders of magnitude larger.) Watson can then use what it has learned to recommend similar content. In the demo, IBM uploaded a video of last year's GTC keynote, and Watson used speech-to-text and Concept Insights to recommend TED Talks on the same AI concepts. IBM said that by optimizing the OpenPower architecture, it was able to boost inferencing by 40x.

The OpenPower Consortium is holding its own summit again this year at the GPU Technology Conference where member companies made several announcements. The most significant is a partnership between Google and Rackspace to develop a server dubbed Zaius with two IBM Power9 CPUs and both IBM's CAPI and Nvidia's NVLink interconnects that it will contribute to the Open Compute Project. The server will work with Google's 48-volt rack design, which it submitted to the Open Compute Project earlier this year.

Rackspace also announced that its existing "Barreleye" Power8 server has been accepted by Open Compute. Finally IBM said it is working with Nvidia and ODM Wistron on a high-performance computing server that uses Power8 CPUs connected to Tesla P100 GPUs using NVLink, which will be available in the fourth quarter.

While all of this should accelerate things, High argued in his keynote that cognitive computing won't be about any single hardware technology. Instead it will be about how different architectures and services can be combined to create new applications. These will learn through formal and informal training, interact with humans on our terms, provide trusted expertise, and establish new kinds of relationships between humans and machines.

High said that cognitive computing was progressing more rapidly than he had anticipated, and it will eventually overtake transaction processing as the dominant computing workload. It won't be long, he said, before we move beyond gadgets such as phones to cognitive computing everywhere--at work, in our homes and in our cars.

Read more about Watson