Report slams police for using "garbage" data with facial recognition tools

In the absence of any rules limiting what images US law enforcement can use with facial recognition systems to track down suspects, police are at times relying on "garbage" data, a new report says. That includes forensic sketches, low-quality surveillance camera stills, social media photos with filters, computer-generated composite images -- and even, in some cases, pictures of a suspect's celebrity doppelgänger.

"The stakes are too high in criminal investigations to rely on unreliable—or wrong—inputs," says the report from the Center on Privacy & Technology at Georgetown Law. "Unfortunately, police departments' reliance on questionable probe photos appears all too common."

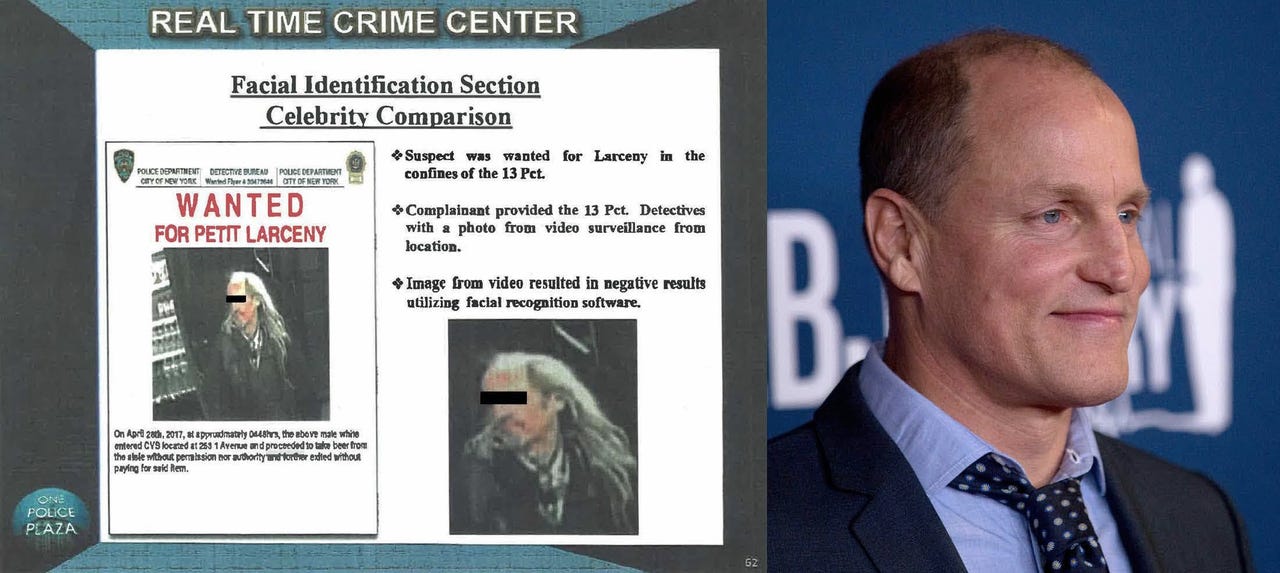

In one of the more galling examples cited by the report, the New York Police Department in 2017 ran a picture of the actor Woody Harrelson through facial recognition tools to help identify a suspected criminal. The police were trying to track down a man who stole beer from a CVS in New York City. The suspect was caught on camera, but the low-quality image of his face didn't result in any useful matches from the NYPD's facial recognition system.

After one detective noted the suspect looked like Woody Harrelson, they used Google Image Search to find a picture of the actor. They used that picture in their facial recognition system to create a list of suspects and identify a possible match. The NYPD used a suspect's celebrity lookalike - a New York Knicks player -- in at least one other instance, according to the report.

The report comes as citizens and lawmakers grow increasingly concerned about the ways new technologies -- including facial recognition -- may imperil civil liberties. On Monday, San Francisco became the first city to ban local government agencies -- including police -- from using facial recognition.

A large portion of the scrutiny has fallen on companies selling facial recognition systems to police departments and other government agencies. Last year, for instance, the ACLU and other civil liberties groups called on Amazon to stop selling Rekognition, an image recognition and analysis service, to law enforcement agencies. Meanwhile, researchers have claimed Rekognition shows gender and ethnic biases.

The Georgetown report, however, stresses that law enforcement must also be held accountable for the way they're using these tools.

"As the technology behind these face recognition systems continues to improve, it is natural to assume that the investigative leads become more accurate," the report says. "Yet without rules governing what can—and cannot—be submitted as a probe photo, this is far from a guarantee. Garbage in will still lead to garbage out."

In the absence of those rules, the report concludes, "we believe that a moratorium on local, state, and federal law enforcement use of face recognition is appropriate and necessary."

It also lays out several other recommendations:

- Prohibit the use of face recognition as a positive identification under any circumstance.

- Stop using celebrity look-alike probe images.

- Stop submitting artist or composite sketches to face recognition systems not expressly designed for this purpose.

- Establish and follow minimum photo quality standards, such as pixel density and the percent of the face that must be visible in the original photo.

- If edits to probe images are made, carefully document these edits and their results. Retain all versions of the probe image submitted to the face recognition system for production to the defense.