'Block reasoning' technique improves computer vision

Computer scientists at Carnegie Mellon University have devised a method that enables computers to better understand an image by making assumptions about the physical constraints of the scene.

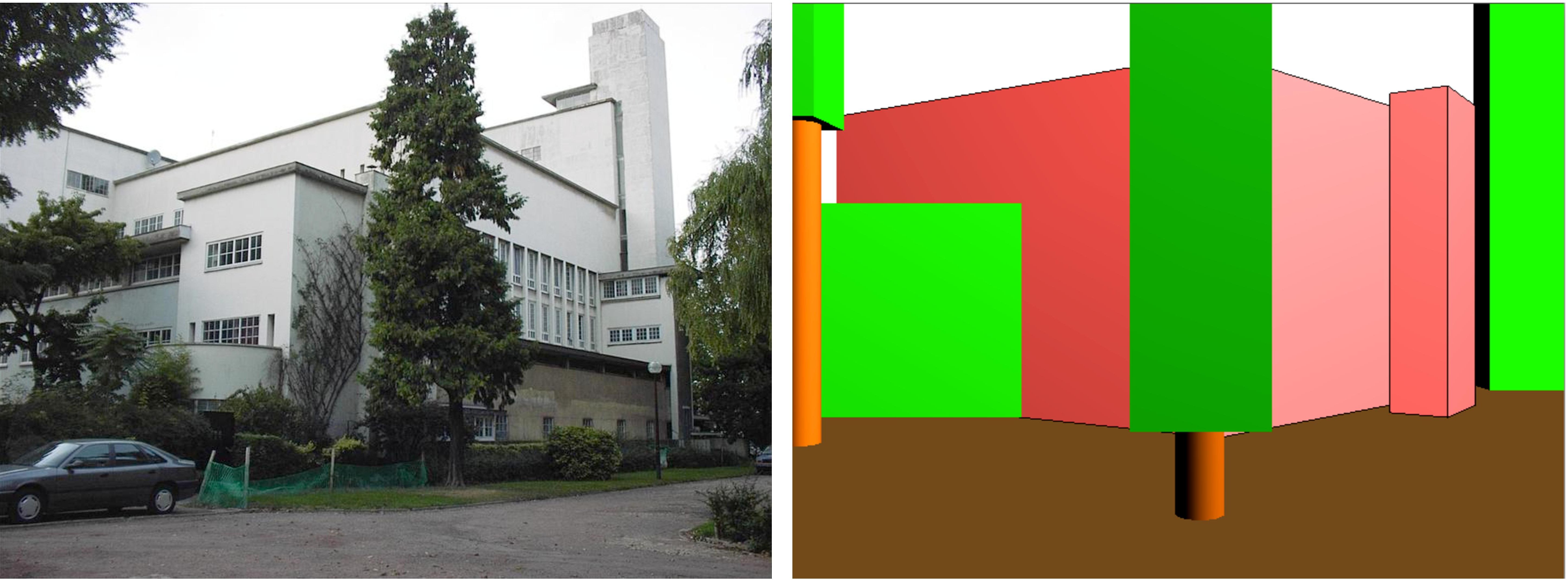

The researchers say that like a child using toy building blocks to assemble something that looks like a building, a computer could analyze an outdoor scene by using virtual blocks to build a three-dimensional approximation of the image based on parameters of volume and mass.

“When people look at a photo, they understand that the scene is geometrically constrained,” said Abhinav Gupta of CMU’s Robotics Institute. “We know that buildings aren’t infinitely thin, that most towers do not lean, and that heavy objects require support. It might not be possible to know the three-dimensional size and shape of all the objects in the photo, but we can narrow the possibilities.”

According to a university release, the new method works by first breaking down the image into various segments that correspond to objects in the image. Once the ground and sky are identified, other segments are assigned potential geometric shapes. The shapes are categorized as light or heavy, depending on appearance. So a surface that appears to be a brick wall, for instance, would be classified as heavy. The computer then tries to reconstruct the image using the virtual blocks. If a heavy block appears unsupported, the computer must substitute an appropriately shaped block, or make assumptions that the original block was obscured in the original image.

Understanding outdoor scenes remains one of the great challenges of computer vision and artificial intelligence. One approach has been to identify features of a scene, such as buildings, roads and cars, but this provides no understanding of the geometry of the scene, such as the location of walkable surfaces. Another approach, pioneered in part by the same Carnegie team, has been to map the planar surfaces of an image to create a rough 3-D depiction of an image, similar to a pop-up book. But that approach can lead to depictions that are highly unlikely and sometimes physically impossible.

The "qualitative volumetric" approach is so new according to Gupta, that no established datasets or evaluation methodologies exist for it. "In estimating the layout of surfaces, other than sky and ground, the method is better than 70 percent accurate, and its performance is almost as good when comparing its segmentation to ground truth."

Gupta presented the research, which he conducted with Efros and Robotics Professor Martial Hebert, at the European Conference on Computer Vision, Sept. 5-11 in Crete, Greece.

Related reading: