Everything announced at Nvidia's Fall GTC 2021: Omniverse Avatar, CuQuantum, Clara Holoscan and more

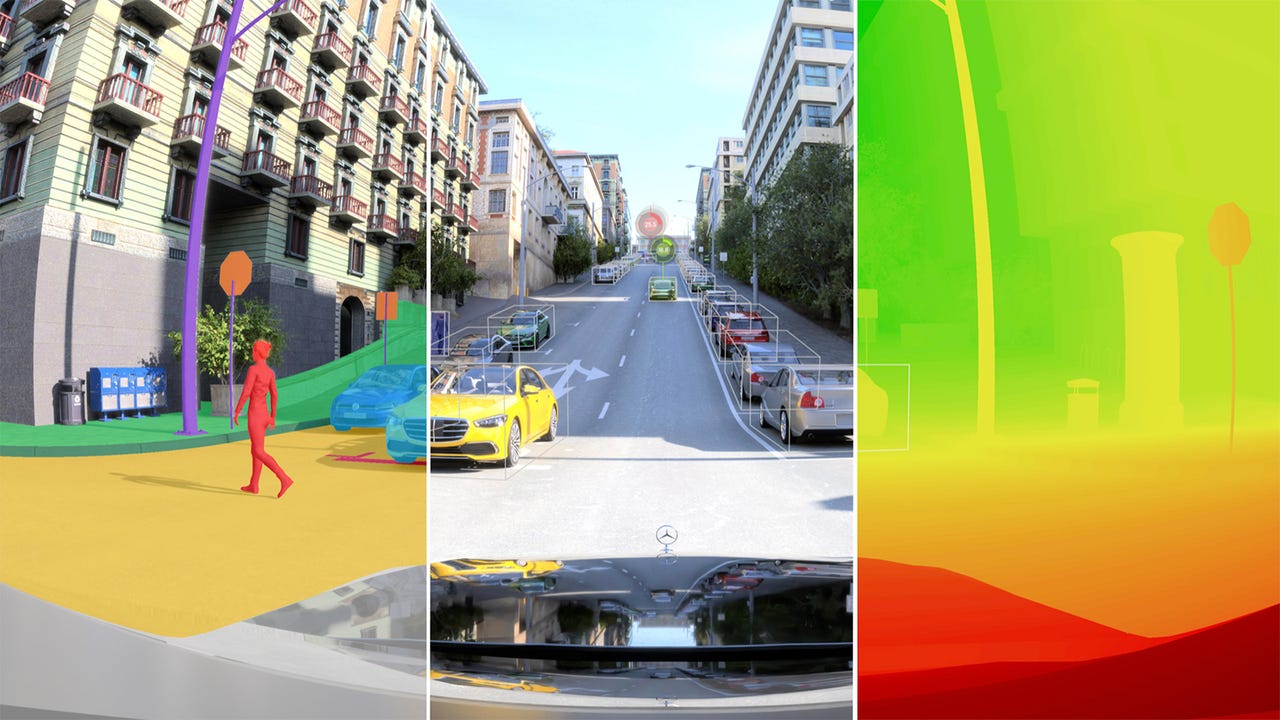

A synthetic camera image and corresponding ground-truth data showing instance

segmentation generated by Nvidia DRIVE Sim powered by Omniverse Replicator.

Nvidia this week is showcasing how it plans to help enterprises jump into the virtual world, enabling life-like simulations and engaging avatars. Its plans for expanding Omniverse, its platform for extending workflows into the virtual sphere, were just part of several announcements delivered on Day One of Nvidia's fall Graphics Technology Conference (GTC) 2021, where the chipmaker is laying out its plans to advance accelerated computing.

Featured

"A constant theme you'll see — how Omniverse is used to simulate digital twins of warehouses, plants and factories, of physical and biological systems, the 5G edge, robots, self-driving cars, and even avatars," Nvidia CEO Jensen Huang said in prepared remarks. Underscoring the scale of Nvidia's ambitions for Omniverse, Huang said Nvidia will build a digital twin to simulate and predict climate change, E-2, or Earth Two.

Along with Omniverse expansions, Nvidia announced a range of new Nvidia products and initiatives, spanning enterprise AI, cybersecurity and quantum computing, as well as advances in automotive and healthcare.

More on today's announcements:

Omniverse

Starting with Omniverse, Nvidia introduced Omniverse Replicator, a synthetic data-generation engine. Omniverse Replicator is a tool that should ultimately help organizations build better digital twins -- and thus, better AI-powered tools in the real world. Nvidia is introducing two different applications built with Replicator, demonstrating some of its use cases: The first application is Nvidia Drive Sim, a virtual world for hosting the digital twin of vehicles. Next, Nvidia Isaac Simis a virtual world for the digital twin of manipulation robots. Data is a necessary prerequisite for building AI models, but Nvidia argues, all AI projects suffer from a lack of high-quality data.

Next, Nvidia is taking Omniverse beyond replications of the real world with the new Omniverse Avatar platform. Avatar is a full end-to-end platform for creating embodied AIs that humans interact with. It connects Nvidia's technologies in speech AI, computer vision, natural language understanding, recommendation engines and simulation technologies. Avatars created in the platform are interactive characters with ray-traced 3D graphics. They can see and speak on a wide range of subjects and understand naturally spoken intent. The many Nvidia technologies behind Avatar include Riva, a new, large software development kit for dealing with advanced speech AI.

Beyond Replicator and Avatar, Nvidia announced a range of other updates to Omniverse, including new AR, VR and multi-GPU rendering features. There are also new integrations for infrastructure and industrial digital-twin applications with software from Bentley Systems and Esri.

HPC and Enterprise AI

In the area of high-performance computing, Nvidia announced Modulus, a framework for building physics-informed machine learning models. It's designed for building AI that can perform physics simulations at very large scales. The models are fed data that comes from the real world as well as data generated by employing simulations on digital twins. Modulus is designed to take full advantage of Nvidia computing architectures on large-scale supercomputers. It should serve a wide range of fields that could benefit from physics-driven digital twin capabilities, such as protein engineering and climate science.

Nvidia Modulus turbocharges applications such as multi-physics digital twin analysis for a heat recovery steam generator.

Nvidia also announced a range of SDKs, including CuNumeric -- a new library to accelerate NumPy for scientists, data scientists and machine learning researchers in the Python community. It implements the NumPy API for automatic scaling to multi-GPU and multi-node systems without any code changes. The new ReOpt library for the logistics industry introduces advanced, massively parallel algorithms that optimize vehicle routes, warehouse selection and fleet mix.

Next, the company announced updates to Triton, its AI inference platform. Triton is now being used by more than 25,000 customers, including Capital One, Microsoft, Samsung Medison, Siemens Energy and Snap. Key updates to the Triton Inference Server software include new integration with Amazon SageMaker, support for Arm CPUs and multi-GPU multi-node functionality.

Meanwhile, the new NeMo Megatron framework enables enterprises to overcome the challenges of training sophisticated natural language processing models, training language models with trillions of parameters. It is optimized to scale out across the large-scale accelerated computing infrastructure of Nvidia's DGX SuperPOD. Megatron 530B is the world's largest customizable language model available today, which can be trained for new domains and languages.

Nvidia updated the next generation of its InfiniBand networking platform, called Quantum-2, for cloud computing providers and supercomputing centers. The 400Gbps InfiniBand networking platform consists of the Quantum-2 switch, the ConnectX-7 network adapter, the BlueField-3 data processing unit (DPU), and the software supporting the new architecture.

Quantum

The cuQuantum SDK speeds up simulations of quantum computers on classical systems. The first library from cuQuantum is currently in public beta, available to download. Called cuStateVec, it's an accelerator for the state vector simulation method. That approach tracks the full state of the system in memory and can scale to tens of qubits. A second library coming in December, cuTensorNet, is an accelerator using the tensor network method. It can handle up to thousands of qubits on some promising near-term algorithms.

Nvidia has integrated cuStateVec into qsim, Google Quantum AI's state vector simulator, which can be used through Cirq, an open-source framework for programming quantum computers. In December, cuStateVec will be ready for use with Qiskit Aer, a high-performance simulator framework for quantum circuits from IBM.

Additionally, national labs including Oak Ridge, Argonne, Lawrence Berkeley National Laboratory and Pacific Northwest National Laboratory, university research groups at Caltech, Oxford and MIT, and companies including IonQ are all integrating cuQuantum into their workflows. Nvidia also said it created the largest-ever simulation of a quantum algorithm for solving the MaxCut problem using cuQuantum. MaxCut algorithms are used to design large computer networks, find the optimal layout of chips with billions of silicon pathways and explore the field of statistical physics.

Healthcare

Hospitals are using Nvidia's new GPU-powered technologies like DGX systems to push forward AI-accelerated oncology projects and research. Teams at the University of Texas MD Anderson Cancer Center are working on convolutional neural networks that help them figure out which cases are most likely to develop into malignant cancer so clinicians can better support patients at risk.

The hospital is deploying GPU technologies for a variety of projects that include AI modeling for brachytherapy treatment planning and treatment quality assessments, according to Dr. Jeremiah Sanders, a medical imaging physics fellow at MD Anderson. Sanders and another doctor are also working on an AI application that analyzes MRI studies of the prostate to determine the quality of the radiation delivery.

Dr. Kristy Brock, professor of Imaging Physics and Radiation Physics at MD Anderson, said she is using Nvidia tech in her study of anomaly detection that helps determine the cases where an AI model that contours liver tumors from CT scans fails.

The Nvidia Clara Discovery project -- a collection of state-of-the-art frameworks, applications and pre-trained models built to unlock insights about how billions of potential drug molecules interact inside our bodies -- features a number of machine learning tools and software platforms that deploy Nvidia technology, like DGX A100 Tensor Core GPUs.

St. Jude Children's Research Hospital, the German Cancer Research Center and Memorial Sloan Kettering Cancer Center are all using AI platforms backed by Nvidia tech to further their research into debilitating illnesses.

Companies like Johnson and Johnson and others are using Nvidia tech in medical robotics systems and imaging machines to speed up consultations and reduce wait times. The Nvidia Clara Holoscan Medical device AI computing platform will be available on November 15

Automotive

The Nvidia Drive end-to-end AV platform will allow autonomous vehicle companies to test their vehicles, conduct mapping and training exercises, simulate routes and test cars out on the road.

Drive Hyperion 8, scheduled to be included in vehicles by 2024, is powered by dual drive Orin SoCs and comes with a qualified sensor suite that has 12 cameras, 9 radars, 1 LIDAR and 12 ultrasonics.

Security

Nvidia announced the creation of a zero-trust cybersecurity platform that combines three technologies -- Nvidia BlueField DPUs, Nvidia DOCA and the Nvidia Morpheus cybersecurity AI framework.

Nvidia DOCA 1.2 is available in early access to developers building applications for Nvidia BlueField DPUs.

The tool is built to provide a greater level of security for companies running data centers. It isolates applications from the infrastructure and provides tougher firewalls while offering the "power of accelerated computing and deep learning to monitor and detect threats continuously -- at speeds up to 600x faster than servers without Nvidia acceleration."

Juniper Networks and Palo Alto Networks are the first cybersecurity companies to use both BlueField and DOCA in their work. It will be widely available on November 30, while Morpheus is available now.

According to Nvidia, BlueField "offloads the CPU burden of running security software and empowers developers using the new cybersecurity capabilities of Nvidia DOCA 1.2 to build metered cloud services that control resource access, validate each application and user, isolate potentially compromised machines and help protect data from breaches and theft."

DOCA works hand in hand with the deep learning cybersecurity framework Morpheus to serve as the foundational software that powers the Nvidia BlueField DPU.