Facebook says AI has a ways to go to detect nasty memes

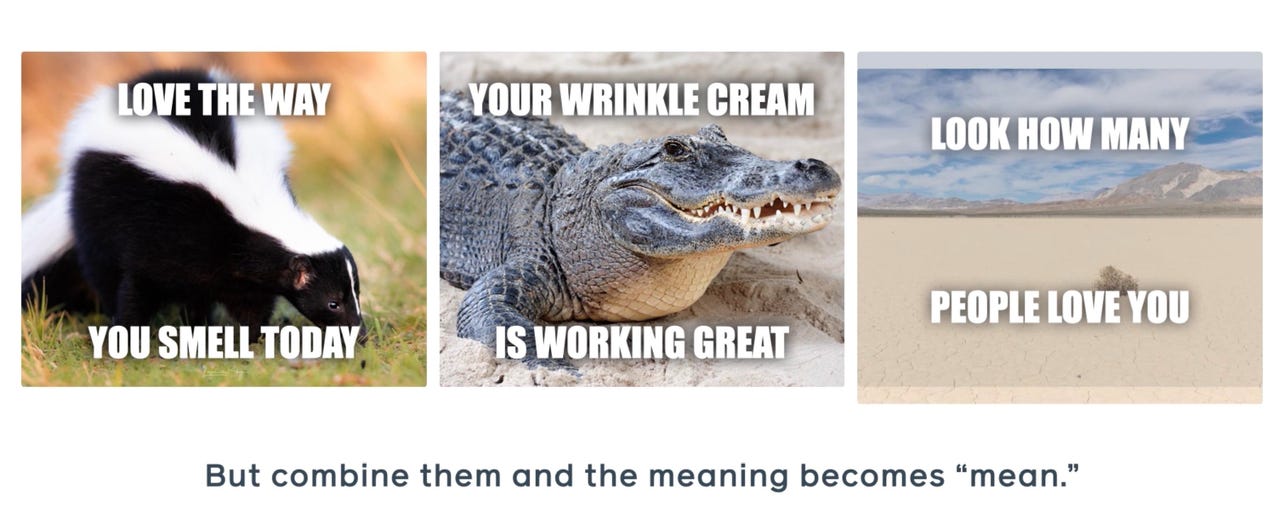

Facebook contends the problem of "hateful" memes is a problem of computing the interstices between innocuous phrases and innocuous images that when combined have a certain derogatory effect. The company illustrates the matter with artificial examples to illustrate the nature of the problem, without republishing actual memes found in the wild.

Mean memes, combinations of words and images that denigrate people based on qualities such as religion or ethnicity, pose an interesting challenge for machine learning programs — and will for some time, according to Facebook. New research by the social media giant shows deep learning forms of artificial intelligence fall far short of humans in the ability to "detect" hurtful memes.

A research paper disseminated by Facebook on Tuesday, titled "The Hateful Memes Challenge: Detecting Hate Speech in Multimodal Memes," compiles a data set of 10,000 examples of mean memes found in the wild, including on Facebook, and compares how various state-of-the-art deep learning models do compared to human annotators.

"There is much room for improvement," is the main conclusion of authors Douwe Kiela, Hamed Firooz, Aravind Mohan, Vedanuj Goswami, Amanpreet Singh, Pratik Ringshia, and Davide Testuggine, all with Facebook AI Research. A companion blog post by the authors gives an overview of the research. A broader blog post by multiple Facebook researchers explores the general topic of AI for hate speech.

The reason this is an interesting problem with machine learning is because it is an example of what the authors term "multi-modal" learning. In that venue, scientists combine kinds of machine learning programs that can manipulate more than one type of signal, in this case text and image processing.

As the authors put it, a picture of a skunk in itself is harmless. The text, "Love the way you smell today" is in itself harmless. "But combine them and the meaning becomes 'mean'." Hence, a computer program must not compute a function of what is the intersection of text and image that is a slur or other kind of hateful speech.

Also: Will AI ever 'understand' satire?

The test is fairly straightforward. The authors gathered one million samples of memes from various places, including Facebook. They eliminated any that were in violation of Facebook's terms of service, such as those that were deemed pornographic. That left 162,000 memes. They then re-created those memes by copying the text onto a new image, sourced through partnership with Getty Images, the stock photography licensing firm. This was to eliminate idiosyncrasies in the way the original meme was created that could skew the results of the test one way or another.

They then had human reviewers judge whether the memes were "hateful," and once multiple people came to agreement on the matter, a subset of 10,000 memes in total were retained as a training and test data set for machine learning. They also included "confounders," memes that would have the opposite effect to the meme. So, something that takes an originally mean-spirited meme and turns it into something appreciative or complementary. This, the authors write, was to upset whatever "biases" might be in the machine learning system that would allow it to easily gauge the mean quality of memes.

The paper does not cite actual examples of the memes, deeming it inappropriate to repurpose the content. Those wishing to see the memes will have to download the database. Instead, the illustrations are suggestive replicas made by the authors, such as the memes shown in the graphic in this article — a skunk image with the text "love the way you smell today."

A human "expert" reviewer was then separately challenged to guess what the human reviewers before them had said about the hatefulness of each meme, which served as the human baseline for the test. The various machine learning computer programs had to do the same, compute a hatefulness rating.

Also: Facebook's latest giant language AI hits computing wall at 500 Nvidia GPUs

Humans had an average accuracy score of 84.7 out of a possible 100 in guessing the hatefulness of the memes, while the best deep learning model achieved only 64.73.

The meme dataset is being made available publicly available as part of the meme Challenge, sort of in the spirit of the ImageNet data set that led to such progress in image recognition years ago. In partnership with DrivenData, a firm that hosts online challenges, Facebook is offering a total pool of awards of $100,000 for challenge contestants, with a $50,000 first prize.

The Challenge will be among the challenges at this year's NeurIPS AI conference, Facebook said. Data on entry dates and such are available from DataDriven's Web site. That competition will test competitors with a test data set of examples of memes never seen prior to the competition.

The best-scoring models in their test were ViLBERT, introduced last year by Jiasen Lu of Georgia Institute of Technology and colleagues, a model of combining vision and language processing techniques; and Visual BERT, also introduced last year, by Liunian Harold Li of UCLA and colleagues.

Results of the different deep learning models in comparison to a person in calculating the "hateful" rating of a meme. The best-performing models, ViLBERT and Visual BERT, have been developed to "fuse" text and image processing.

As you can see, these models derive from the Google "BERT" system, in turn based on the "Transformer" approach to language modeling. In the tests, Kiela and colleagues at Facebook found that these visuo-lingual conglomerations were better than models that only looked at the text of a meme, in terms of deriving the hatefulness probability of a meme. A BERT model, for example, only scored 59.2 compared to the VisualBERT's 64.73.

ZDNet asked several questions of the authors in email. One question was who the human annotators are that are mentioned as taking on the task of constructing the meme data set and then providing baseline human scores. Facebook declined to comment.

A second question was what the scale of the problem is of hate speech at Facebook. Given that the premise of the work is to enlist AI to clean up hateful utterances on social media, it's important to know things such as how much hate speech is removed on a regular basis, or perhaps a tally to date. Facebook declined to comment.

The lead author, Kiela, did answer several important technical questions put by ZDNet. One was, what is missing in these cutting-edge models such as Visual BERT that would close the gap.

Also: Please, Facebook, give these chatbots a subtext!

"If we knew what is missing, it would be easy to fix and the gap between AI and humans would be lower," replied Kiela in an email to ZDNet. "Generally speaking, we need to work on improving multimodal understanding and reasoning," Kiela continued.

"We know that benchmarks in AI play an important role in driving progress in the field. The paper is trying to push for more work in that research direction, as well as provide a concrete way for measuring any progress we make."

ZDNet asked how much progress Facebook is seeing with hate speech already using existing models. Kiela replied that the models Facebook uses that are closest to the text-only BERT are its own recent inventions, RoBERTa and XLM-R, both very large natural language processing programs. They are still "unimodal models," Kiela emphasized to ZDNet, so they only handle text not images, "and so there will still be a gap between their performance on multimodal models."

ZDNet asked about the data set, which began with one million example memes and was winnowed down to 10,000 examples for the finished data set. Why these amounts? ZDNet asked? Certainly, they seem a bit arbitrary, and the final size of the data set, 10,000, seems small.

"The story of how we went from many images to a dataset of 10k is meant to illustrate how much care went into designing this dataset," Kiela told ZDNet in email. "In AI, especially unimodal AI, we frequently have much better datasets, so we felt we had to explain to the AI community why this dataset was comparatively smaller," Kiela added.

"It is because we used well-trained annotators, were careful to ensure others could use the dataset for research purposes, and did a lot of filtering to ensure high quality."

Facebook schematic of how multiple signal types can be combined for multi-modal machine learning.

Lastly, since this research emphasizes the increasing importance of "multi-modal" approaches in deep learning, ZDNet asked which models today best exemplify the direction of future research in this regard. Kiela directed ZDNet to ViLBERT, in which Facebook's Dhruv Batra and Devi Parikh had a hand; and "multimodal bitransformers," developed by Kiela and colleagues and introduced last year.

Example code for ViLBERT and other multi-modal AI programs can be found at Facebook AI's "MMF" site, with various things built in PyTorch.

Where things go from here depends on whether scientists find Facebook's Challenge worthwhile and whether the data set proves sufficient to lead to benchmark improvement with the development of new approaches.

Facebook makes the point that automation is needed because the curation by humans that built the data set cannot in itself solve the overarching hate-speech problem.

As Kiela and colleagues put it, "Malicious content cannot be tackled by having humans inspect every data point."