Meta's latest AI model will make content available in hundreds of languages

Meta has open-sourced an AI model that can translate across 200 different languages, the company announced Wednesday -- a move that should open up different technologies and digital content to a much wider audience. The model, called No Language Left Behind, can translate across 200 languages, including 55 African languages, with high-quality results.

Artificial Intelligence

"A handful of languages — including English, Mandarin, Spanish and Arabic — dominate the web," the company noted in a blog post. "Native speakers of these very widely spoken languages may take for granted how meaningful it is to read something in your own mother tongue. NLLB will help more people read things in their preferred language, rather than always requiring an intermediary language that often gets the sentiment or content wrong."

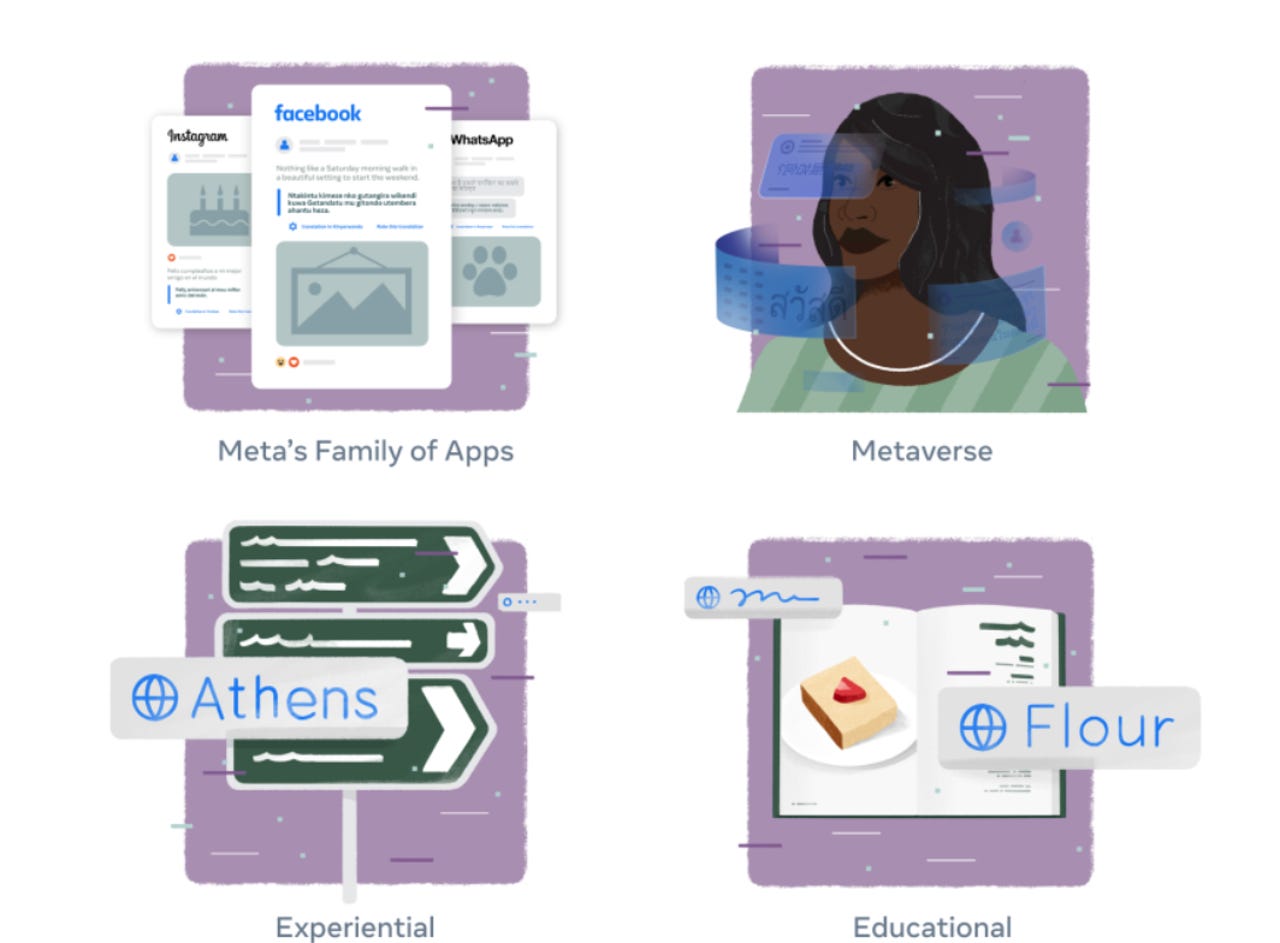

Meta is of course using NLLB to improve its own products, but by open sourcing the model, technologists can use it to build other tools -- like an AI assistant that works well in languages such as Javanese and Uzbek, or closed captioning in Swahili or Oromo for Bollywood movies.

SEE: Metaverse: Momentum is building, but companies are still staying cautious

NLLB nearly doubles the number of languages covered by a single, state-of-the-art AI model. Meta says that many of these languages, such as Kamba and Lao, were not supported well or at all by existing translation tools. Fewer than 25 African languages are currently supported by widely used translation tools.

The model also improves the quality of translations by an average of 44%, in comparison to prior AI research. For some African and Indian-based languages, NLLB-200's translations were more than 70% more accurate. To determine the quality of translations, Meta conducted both automated metric evaluations as well as human evaluations.

To help ensure the quality of translations, Meta researchers built FLORES-200, a dataset that helps them evaluate NLLB's performance in 40,000 different language directions.

In addition to open sourcing NLLB-200 models, Meta is making the FLORES-200 dataset available to developers, as well as model-training code and code for recreating the training dataset.

Additionally, the company is awarding up to $200,000 of grants for impactful uses of NLLB-200 to researchers and nonprofit organizations with initiatives focused on sustainability, food security, gender-based violence, education, or other areas in support of the UN Sustainable Development Goals.

Across its own products, Meta expects the model to support more than 25 billion translations each day. In addition to translating content and serving up better ads, the model will be used to spot harmful content and misinformation.

Meta's NLLB research is also being applied to translation systems used by Wikipedia editors. Meta has partnered with the Wikimedia Foundation, the nonprofit organization that hosts Wikipedia and other free knowledge projects, to help improve translation systems on Wikipedia. There are versions of Wikipedia in more than 300 languages, but most have far fewer articles than the more than six million available in English.

Editors can use the technology behind NLLB-200, via the Wikimedia Foundation's Content Translation Tool, to translate articles in more than 20 low-resource languages (those that don't have extensive datasets to train AI systems).