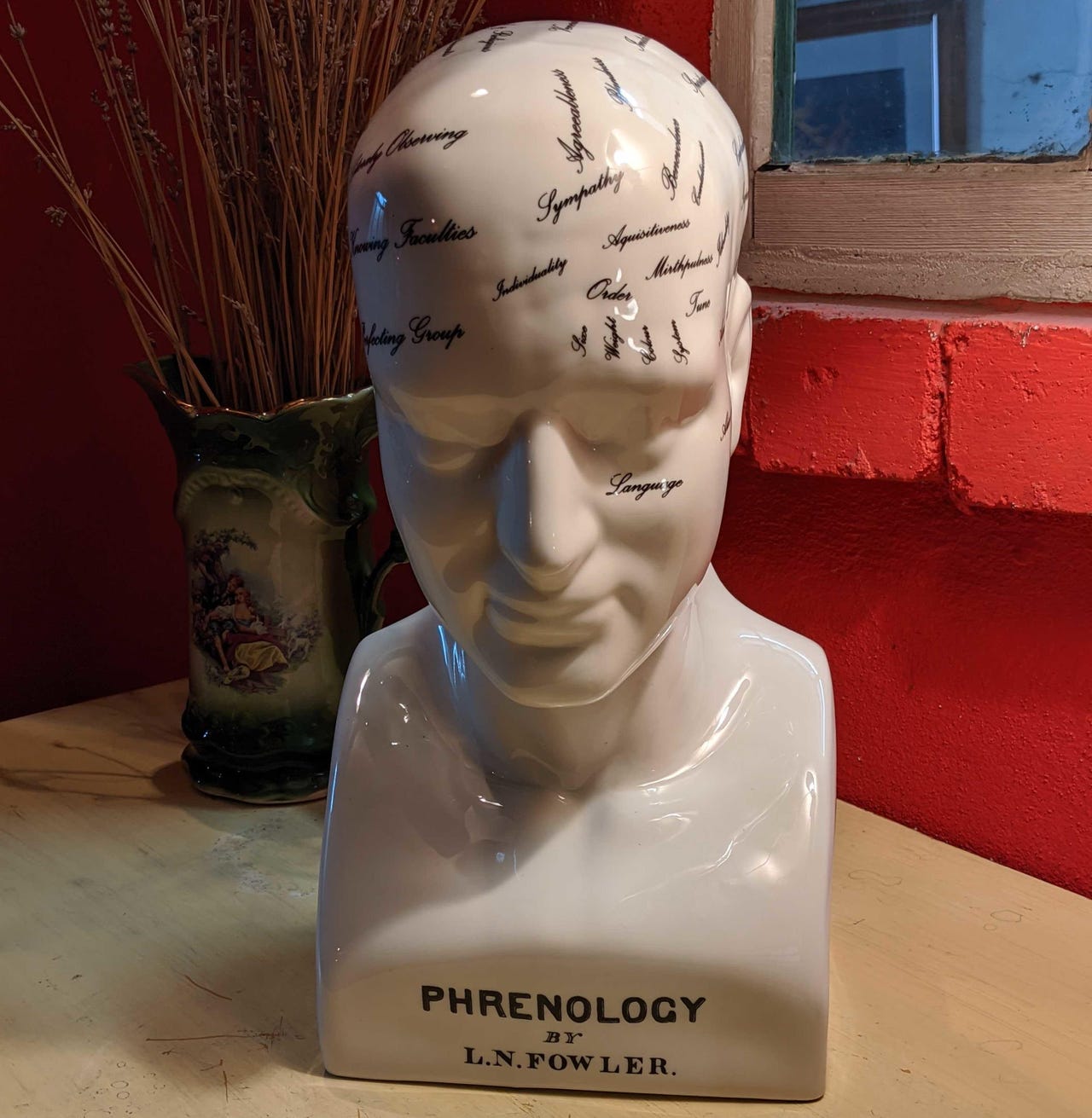

Predictive policing is just racist 21st century cyberphrenology

In 1836, the Scottish geologist, chemist, and "agricultural improver" Sir George Stewart Mackenzie was concerned about what he called the "recent atrocities" of violent crime in the British penal colony of New South Wales, Australia.

The root cause, he thought, was a failure to manage which criminals were transported to work in the colony -- especially the two-thirds of convicts who worked for private masters.

"At present they are shipped off, and distributed to the settlers, without the least regard to their characters or history," Mackenzie wrote in a representation [PDF] to Britain's Secretary for the Colonies, Lord Glenelg.

For Mackenzie it was a moral question. It was about rehabilitating a criminal regardless of "whether the individual have [sic] spent previous life in crime, or has been driven by hard necessity unwillingly to commit it".

Only convicts with the correct moral character should be sent to the colonies, to be brought back to "a course of industrious and honest habits", he wrote.

The rest could just rot in British prisons.

So how did Mackenzie propose to identify these convicts with the correct moral character? By measuring the shape of their heads.

"In the hands of enlightened governors, Phrenology will be an engine of unlimited improving power in perfecting human institutions, and bringing about universal good order, peace, prosperity, and happiness," he wrote.

Yes, in 1836, phrenology was promoted as a cutting-edge science that could predict, among many other things, a person's likelihood of criminality. Now, of course, we know that it's complete rubbish.

Here in the 21st century, predictive policing, or algorithmic policing, makes similarly bold claims about its ability to spot career criminals before they commit their crimes.

How predictive policing can entrench racist law enforcement

At its core, predictive policing is simply about using the magic of big data to predict when, where, and by whom crime is likely to be committed.

The payoff is meant to be a more efficient allocation of police resources, and less crime overall.

Increasingly, it's also about ubiquitous facial recognition technology.

An important player here is the secretive company Clearview AI, a controversy magnet with far-right political links.

Clearview's tools have already been used by Australian Federal Police and police forces in Queensland, Victoria, and South Australia, though it took journalists' investigations and a massive data breach to find that out.

The Royal Canadian Mounted Police even denied using Clearview's technology three months after they'd signed the contract.

The potential payoff to all this isn't just identifying and prosecuting criminals more efficiently after the fact.

Increasingly, it's also the idea that individuals who have been predicted to be potential criminals, or whose behaviour matches some predicted pattern for criminal behaviour, can be identified and tracked.

At one level, predictive policing simply provides some science-ish rigour to the work of the cops' own in-house intelligence teams.

"Looking at crimes like burglary, one can create quite a useful predictive model because some areas have higher rates of burglary than others and there are patterns," said Professor Lyria Bennett Moses, director of the Allens Hub for Technology, Law and Innovation at the University of New South Wales, last year.

Cops also know, for example, that drunken violence is more likely in hot weather. An algorithm could help them predict just when and where it's likely to kick off based on past experience.

According to Roderick Graham, an associate professor of sociology at Old Dominion University in Virginia, there are more innovative ways of using data.

Suppose the cops are trying to identify the local gang leaders. They've arrested or surveilled several gang members, and through "either interrogation, social media accounts, or personal observation", they now have a list of their friends, family, and associates.

"If they see that a person is connected to many gang members, this gives police a clue that they are important and maybe a leader," Graham wrote.

"Police have always done this. But now with computer analyses, they can build more precise, statistically sound social network models."

But this is where it all starts to get wobbly.

As American researchers William Isaac and Andi Dixon pointed out in 2017, while police data is often described as representing "crime", that's not quite what's going on.

"Crime itself is a largely hidden social phenomenon that happens anywhere a person violates a law. What are called 'crime data' usually tabulate specific events that aren't necessarily lawbreaking -- like a 911 call -- or that are influenced by existing police priorities," they wrote.

"Neighbourhoods with lots of police calls aren't necessarily the same places the most crime is happening. They are, rather, where the most police attention is -- though where that attention focuses can often be biased by gender and racial factors."

Or as Graham puts it: "Because racist police practices overpoliced black and brown neighbourhoods in the past, this appears to mean these are high crime areas, and even more police are placed there."

Bennett Moses gave a distinctly Australian example.

"If you go to police databases in Australia and look at offensive language crimes, it looks like it is only Indigenous people who swear because there isn't anyone else who gets charged for it," she wrote.

"So you have a bias there to start within the data, and any predictive system is going to be based on historical data, and then that feeds back into the system."

Cops don't want to talk about predictive policing

In 2017, NSW Police's Suspect Targeting Management Plan (STMP) singled out children as young as 10 for stop-and-search and move-on directions whenever police encountered them.

The cops haven't really explained how or why that happens.

According to the Youth Justice Coalition (YJC) at the time, however, the data they've managed to obtain shows that STMP "disproportionately targets young people, particularly Aboriginal and Torres Strait Islander people".

According to an evaluation of STMP in 2020 by the respected NSW Bureau of Crime Statistics and Research, "STMP continues to be one of the key elements of the NSW Police Force's strategy to reduce crime".

The roughly 10,100 individuals subject to SMTP-II since 2005, and the more than 1,020 subjected to an equivalent system for domestic violence cases (DV-STMP), were "predominately male and (disproportionately) Aboriginal", they wrote.

Yet when compared with non-Aboriginal people, the Aboriginal cohort in the sample saw a "smaller crime reduction benefit".

Victoria Police has thrown the veil of secrecy over their own predictive policing tool. They haven't even released its name.

The trial of this system only became public knowledge in 2020 when Monash University associate professor of criminology Leanne Weber published her report on community policing in Greater Dandenong and Casey.

In interviews with young people of South Sudanese and Pacifika background, she heard how, at least in your correspondent's view, racism is being built into the data from the very start.

"Many experiences reported by community participants that appeared to be related to risk-based policing were found to damage feelings of acceptance and secure belonging," she wrote.

"This included being prevented from gathering in groups, being stopped and questioned without reason, and being closely monitored on the basis of past offending."

One participant seemed to nail what was going on: "The police don't give a reason why they are accusing them. It's so that the police can check and put it in their system."

Victoria Police told Guardian Australia that further details about the tool could not be released because of "methodological sensitivities", whatever they are.

It's telling, however, that this secret tool was only used in Dandenong and surrounding Melbourne suburbs, one of the most disadvantaged and "culturally diverse" regions in Australia.

More detailed explorations of predictive policing tools put it bluntly, like this headline at MIT Technology Review: Predictive policing algorithms are racist. They need to be dismantled.

Or as John Lorinc wrote in his lengthy feature for the Toronto Star, "big data policing is rife with technical, ethical, and political landmines".

The pushback against predictive policing is underway

At the global level, the United Nations Committee on the Elimination of Racial Discrimination has warned [PDF] how predictive policing systems that rely on historical data "can easily produce discriminatory outcomes".

"Both artificial intelligence experts and officials who interpret data must have a clear understanding of fundamental rights in order to avoid the entry of data that may contain or result in racial bias," the committee wrote.

In the UK, the Centre for Data Ethics and Innovation has said that police forces need to "ensure high levels of transparency and explainability of any algorithmic tools they develop or procure".

In Europe, the EU Commission's vice president Margrethe Vestager said predictive policing is "not acceptable".

Individual cities have been banning facial recognition for policing, including Portland, Minneapolis, Boston and Somerville in Massachusetts, Oakland, and even tech hub San Francisco.

At least the phrenologists were open and transparent

Back in 1836, Mackenzie's proposal went nowhere, despite his hard sell and offer to prove his plan with an experiment.

"I now put into your hands a number of certificates from eminent men, confirming my former assertion, that it is possible to classify convicts destined for our penal settlements, so that the colonists may be freed from the risk of having atrocious and incorrigible characters allotted to them, and the colonial public from the evils arising out of the escape of such characters," he wrote.

Lord Glenelg, it turns out, wasn't convinced that phrenology was a thing, and, in any event, he didn't have the funding for it.

The irate skull-fondlers expressed their dismay in The Phrenological Journal and Magazine of Moral Science for the year 1838 [PDF], even blaming the colonial governors for the violent crimes.

"As phrenologists, we must assume (and we assume this, because we speak on the strength of undeniable facts,) that the occurrence of such outrages might be much diminished, if not wholly prevented; and consequently, we must regard those to whom the power of prevention is given, but who refuse to exert that power, as morally guilty of conniving at the most deadly crimes," they wrote.

The cops keep drinking the Kool-Aid

There are three key differences between predictive policing in 2021 and 1836.

First, the secrecy.

Mackenzie "unhesitatingly" offered a public test of phrenology in front of Lord Glenelg and "such friends as you may wish to be present". Today, it's all confidential proprietary algorithms and police secrecy.

Second, the gullibility.

Even in a time of great faith in science and reason, Lord Glenelg was sceptical. These days the cops seem to drink the Kool-Aid as soon as it's offered.

And third, the morality, or rather, the lack of it.

Whatever you may think of Mackenzie's promotion of what we now know to be quackery, his overall aim was the moral improvement of society.

He spoke out against the "ignorance of the human constitution" which led rulers to think that "degradation is... the fitting means to restore a human being to self-respect, and to inspire an inclination towards good conduct".

Among cops and technologists alike, a coherent discussion of ethics and human rights seems to be lacking. That must be fixed, and fixed soon.

Related Coverage

- Twitter introduces 'community-based' Birdwatch pilot to address misinformation

- Ethical-source movement opens new open-source organization

- Blockchain aims to solve AI ethics and bias issues

- World Economic Forum launches how-to guide on using technology ethically

- Social Services secretary apologises for robo-debt