AI is running out of computing power. IBM says the answer is this new chip

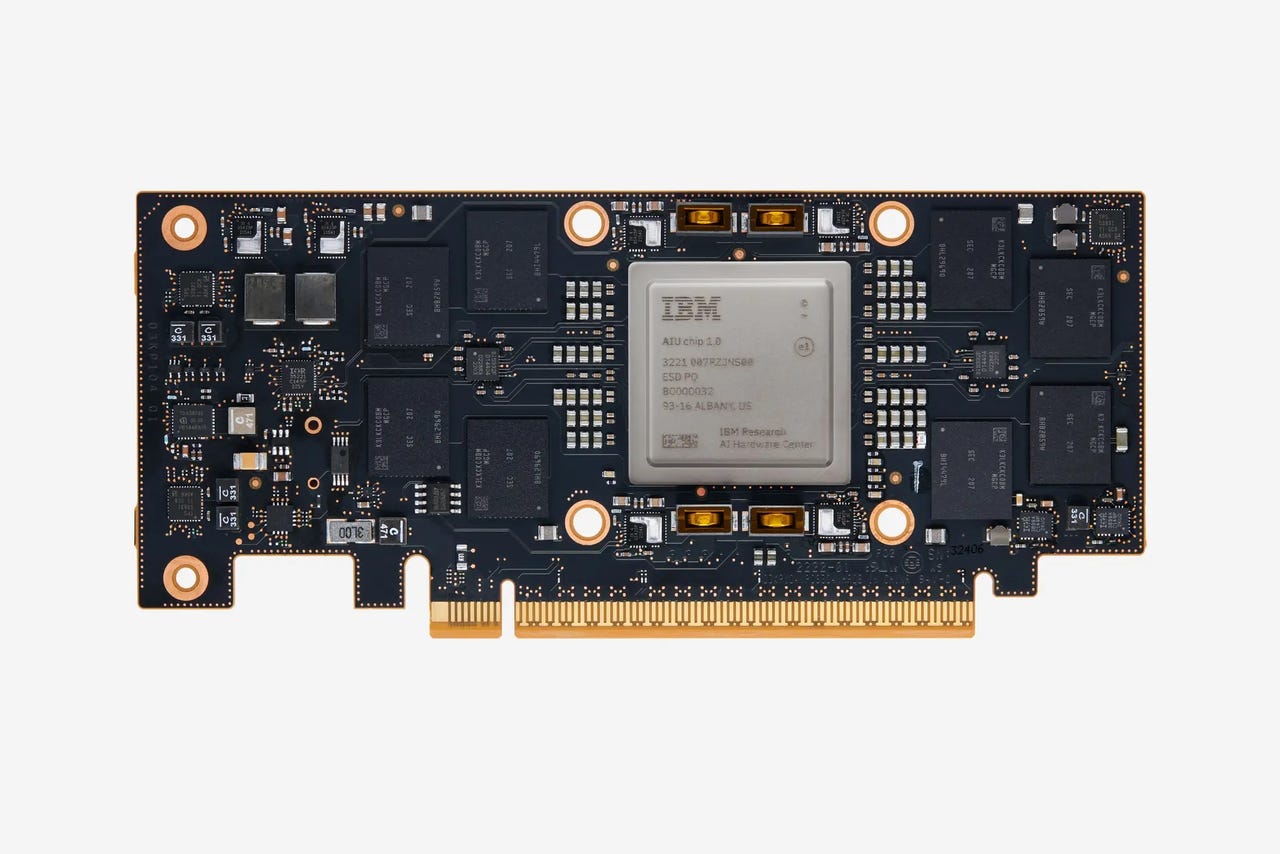

A close up of the IBM Artificial Intelligence Unit chip.

The hype suggests that artificial intelligence (AI) is already everywhere, but in reality the technology that drives it is still developing. Many AI applications are powered with chips that weren't designed for AI – instead, they rely on general-purpose CPUs and GPUs created for video games. That mismatch has led to a flurry of investment – from tech giants such as IBM, Intel and Google, as well as from startups and VCs – into the design of new chips expressly designed for AI workloads.

As the technology improves, enterprise investment will surely follow. According to Gartner, AI chip revenue totaled more than $34 billion in 2021 and is expected to grow to $86 billion by 2026. Additionally, the research firm said, less than 3% of data center servers in 2020 included workload accelerators, while more than 15% are expected to by 2026.

IBM Research, for its part, just unveiled the Artificial Intelligence Unit (AIU), a prototype chip specialized for AI.

Artificial Intelligence

"We're running out of computing power. AI models are growing exponentially, but the hardware to train these behemoths and run them on servers in the cloud or on edge devices like smartphones and sensors hasn't advanced as quickly," said IBM.

Also: Can AI help solve education's big data problems?

The AIU is the first complete system-on-a-chip (SoC) from the IBM Research AI Hardware Center designed expressly to run enterprise AI deep-learning models.

IBM argues that the "workhorse of traditional computing", otherwise known as CPU, was designed before deep learning arrived. While CPUs are good for general-purpose applications, they aren't so good at training and running deep-learning models that require massively parallel AI operations.

"There's no question in our minds that AI is going to be a fundamental driver of IT solutions for a long, long time," Jeff Burns, director of AI Compute for IBM Research, told ZDNET. "It's going to be infused across the computing landscape, across these complicated enterprise IT infrastructures and solutions in a very broad and diffuse way."

For IBM, it makes the most sense to build complete solutions that are effectively universal, Burns said, "so that we can integrate those capabilities into different compute platforms and support a very, very broad variety of enterprise AI requirements."

The AIU is an application-specific integrated circuit (ASIC), but it can be programmed to run any type of deep-learning task. The chip features 32 processing cores built with 5 nm technology and contains 23 billion transistors. The layout is simpler than that of a CPU, designed to send data directly from one compute engine to the next, making it more energy efficient. It's designed to be as easy to use as a graphics card and can be plugged into any computer or server with a PCIe slot.

To conserve energy and resources, the AIU leverages approximate computing, a technique IBM developed to trade computational precision in favor of efficiency. Traditionally, computation has relied on 64- and 32-bit floating point arithmetic, to deliver a level of precision that's useful for finance, scientific calculations and other applications where detailed accuracy matters. However, that level of precision isn't really necessary for the vast majority of AI applications.

"If you think about plotting the trajectory of an autonomous driving vehicle, there's no exact position in the lane where the car needs to be," Burns explains. "There's a range of places in the lane."

Neural networks are fundamentally inexact – they produce an output with a probability. For instance, a computer vision program might tell you with 98% certainty that you're looking at a picture of a cat. Even so, neural networks were still initially trained with high-precision arithmetic, consuming significant energy and time.

Also: I tested out an AI art generator and here's what I learned

The AIU's approximate computing technique allows it to drop from 32-bit floating point arithmetic to bit-formats holding a quarter as much information.

To ensure the chip is truly universal, IBM has focused on more than just hardware innovations. IBM Research has put a huge emphasis on foundation models, with a team of somewhere between 400 and 500 people working on them. In contrast to the AI models that are built for a specific task, foundation models are trained on a broad set of unlabeled data, creating a resource akin to a gigantic database. Then, when you need a model for a specific task, you can retrain the foundation model using a relatively small amount of labeled data.

Using this approach, IBM intends to tackle different verticals and different AI use cases. There are a handful of domains for which the company is building foundation models – those use cases cover areas such as chemistry and time series data. Time series data, which simply refers to data that's collected over regular intervals of time, is critical for industrial companies that need to observe how their equipment is functioning. After building foundation models for a handful of key areas, IBM can develop more specific, vertical-driven offerings. The team has also ensured the software for the AIU is completely compatible with IBM-owned Red Hat's software stack.