Apple's VR: A flurry of patent applications, a focus on user 'behavior'

Apple's revised claims on VR and AR headset tech emphasizes a data set of user behavior including hand, head and facial gestures.

Apple has had a busy July filing patents on virtual reality technology, three obvious applications showing up in the U.S. Patent & Trademark Office, and those are only the three most obvious.

As ZDNet's David Gewirtz related last month, speculation has been running hot for a long time now that Apple is going to offer some sort of VR, or augmented reality, or "mixed reality" headsets or goggles, rumored to be "Apple Glass."

The three patent applications describe, respectively, ways to detect which way a person is looking when wearing a headset using infrared light; adjusting the resolution of an image a person sees to mimick the eye's "foveation"; and a system for compensating motion sickness when a person is riding in a car looking into a VR screen.

All three patents are "continuations" of prior applications that were submitted in recent years, and that were subsequently awarded patents by the USPTO. A continuation is a technical term, usually designating a shift in what's claimed from what had previously been claimed.

Also: One thing Apple will for sure announce at WWDC (and four more it almost certainly will)

In that sense, a continuation patent filing can represent an important enhancement to what had previously been claimed.

The differences can be subtle but interesting.

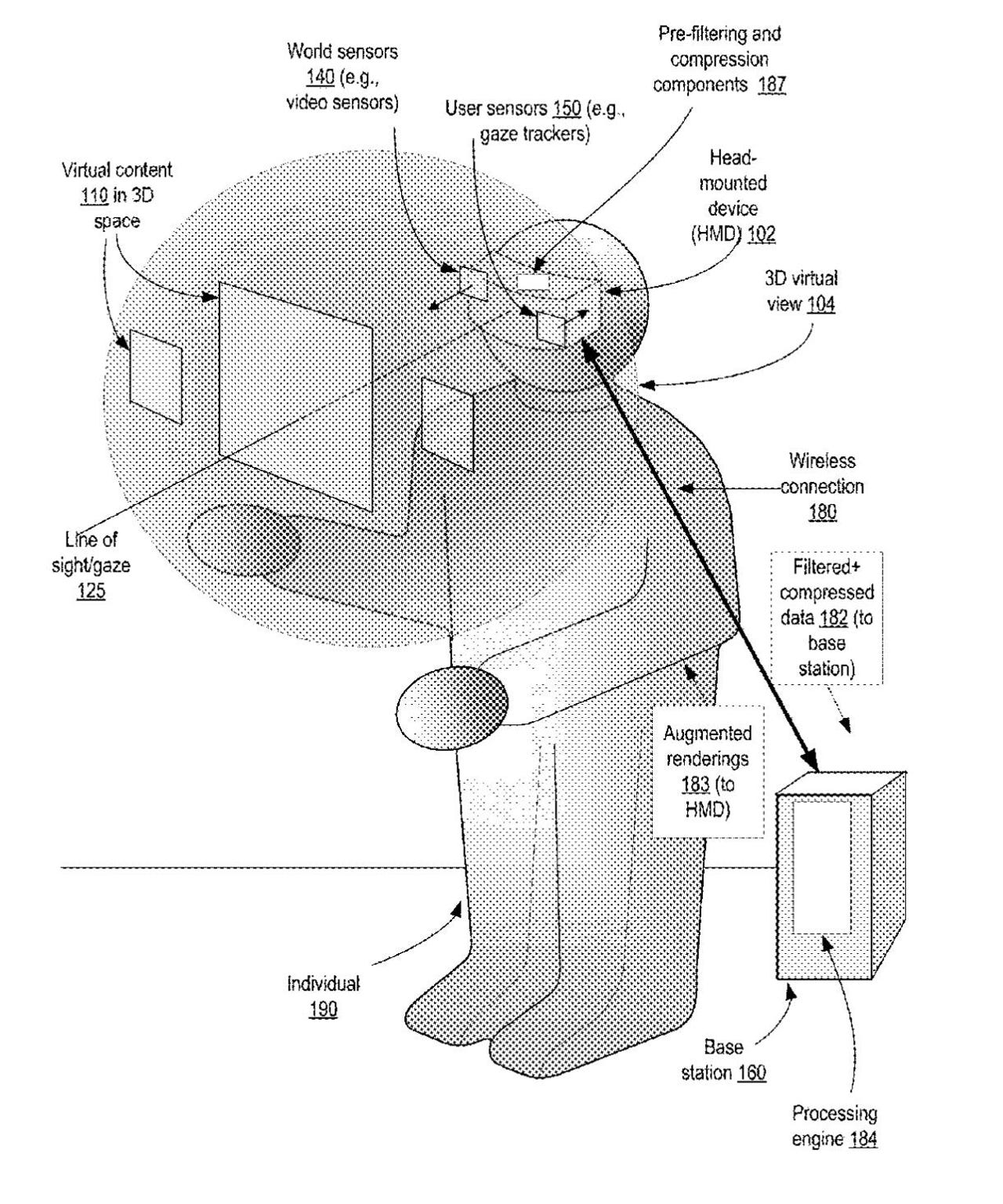

For example, patent application US 2022/0222790 AI, published July 14th, is a continuation of patent number 11,295,425 B2, awarded in April. In both cases, what's being patented is a filter that combines on screen two different renderings of a video to be seen through a VR or a mixed reality headset. The point is to achieve foveation, the shifting of resolution dependent on where a person's gaze is directed, in order to make a scene more realistic.

In the published patent, the focus was on those two layers of video. In the new application, all of the original 20 claims of that published patent are replaced with 40 brand-new claims. Much of the language is the same, however, one distinction stands out: the world "behavior."

The new application prominently mentions behavior, as in user behavior, using the term 24 times versus just 3 times in the prior version.

The behavior in this case refers to a "behavior data set," a collection of data, gathereed from sensors, about gestures that a person wearing the headset might make, including a hand gesture or face gesture or head movement. The idea is for those user behaviors to influence how the filter shifts between its different displays of the environment.

While the mention of detecting user behavior via sensors is mentioned in the published patent, the patent application now updates its claims of the total system to explicitly claim the integration of the filter and the user behavior awareness.

Compare the two versions of the language, first, the published application's claim number eight:

8. A system, comprising: one or more processors; and one or more memories; wherein the one or more memories store program instructions that when executed on or across the one or more processors perform a method comprising: filtering one or more video frames using a first set of values of parameters of a multi-layer low-pass filter, wherein the multi-layer low-pass filter comprises a first layer and a second layer with respective filtering resolutions, the filtering comprising: for individual ones of the one or more video frames, applying the first layer to a first pixel subset of the video frame, and applying the second layer to a second pixel subset of the video frame; determining one or more properties of content of the one or more video frames; and modifying, based at least in part on the one or more properties, a value of at least one parameter of the multi-layer low-pass filter.

Second, the new continuation patent application's replacement claim number 28:

28. A system, comprising: one or more processors; and one or more memories; wherein the one or more memories store program instructions that when executed on or across the one or more processors perform a method comprising: obtaining, at a first device using a first set of sensors, an environment data set comprising a first video frame corresponding to a scene visible to an individual; obtaining, at the first device using a second set of sensors, a behavior data set comprising representations of one or more behaviors of the individual; filtering at least a portion of the environment data set using a multi-layer filter at the first device, wherein the filtering comprises (a) applying a first layer of the multi-layer filter to a first subset of the first video frame and (b) applying a second layer of the multi-layer filter to a second subset of the first video frame, wherein a filtering resolution of the first layer differs from a filtering resolution of the second layer; and obtaining, at the first device, content to be displayed to the individual, wherein at least a portion of the content is generated at a second device based at least in part on (a) a result of the filtering and (b) the behavior data set.

It's possible that this more extensive integration of terms may mean that Apple is coming closer to some sort of realization of the many aspects that a headset must have.

Of the other two patent applications, application 20220236799 refers to a gaze-tracking system using infrared light pointed at the eyeball to see where a wearer is directing their gaze. It's a continuation of published patent number 11,360,557, published in June. The application updates language about the particulars of how the diffraction grating, which filters the infrared light, is combined with the lenses, the eyepieces, and the infrared detectors.

The third patent application, 20220222904, describes replacing a passenger's view of the world when they're traveling in a moving vehicle with a simulated view of the world, with the intent of shifting that simulated view in order to prevent motion sickness that can happen when one isn't looking out the window of a car. It's a continuation of published patent 11,321,923, published in May.

As with application, 2022/0222790, the original claims in the 11,321,923 patent are all stricken and replaced with new claims. Among the subtle variants, whereas the original language in the published patent says the device would obtain inputs of "three-dimensional (3D) model data of the real environment and sensor data from the vehicle," the new version says the inputs will "include indications of visual cues of an environment outside the vehicle, and wherein the environment comprises other vehicles."

That sounds like a broader kind of claim, to include stuff that's not just 3D data.