Deep Learning Software vs. Hardware: NVIDIA releases TensorRT 7 inference software, Intel acquires Habana Labs

In GTC China yesterday, NVIDIA made a series of announcements. Some had to do with local partners and related achievements, such as powering the likes of Alibaba and Baidu. Partners of this magnitude are bound to generate impressive numbers and turn some heads. Another part of the announcements had to do with new hardware.

NVIDIA unveiled Orin, a new system-on-a-chip (SoC) designed for autonomous vehicles and robots, as well as a new software-defined platform powered by the SoC, called Nvidia Drive AGX Orin. This signifies NVIDIA's interest and progress in domain-specific applications, and an attempt to foster an ecosystem.

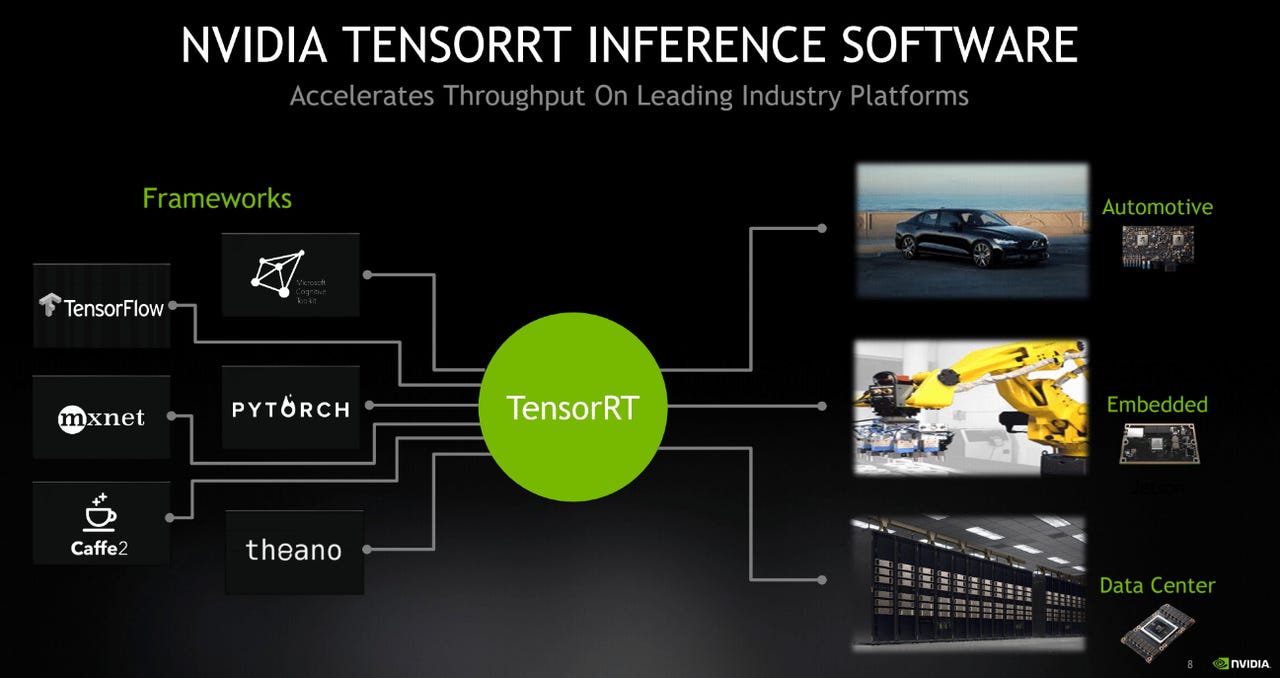

But what was perhaps the most interesting part of the announcement in terms of the bigger picture in AI chips was actually software: the new NVIDIA TensorRT 7. TensorRT is inference software developers can use to deliver applications utilizing trained deep learning models.

Deep Learning inference with TensorRT 7

In machine learning (which deep learning is a branch of), there are 2 key parts in developing applications. The first part is training a model based on existing data, which is the equivalent of developing software in traditional application development. The second part is using the model to process new data, also known as inference, which is the equivalent of deploying software.

TensorRT works on the inference part. In other words: just by keeping a previously trained model, but using TensorRT 7 for inference on models deployed on NVIDIA GPUs, you can expect to see a performance boost.

NVIDIA chose to emphasize conversational AI applications, mentioning how inference latency has until now impeded true, interactive engagement. NVIDIA's press release notes that TensorRT 7 speeds the components of conversational AI by more than 10x compared to when run on CPUs, driving latency below the 300-millisecond threshold considered necessary for real-time interactions.

NVIDIA announced improvements to its TensorRT software for deep learning. Image: NVIDIA

There is no mention of the performance gain of the new TensorRT compared to its previous version, or compared to other options for inference. We postulate the reason why NVIDIA chose to emphasize conversational AI is two-fold. First, it's a captivating application. Second, the enhancements in TensorRT 7 seem geared towards this.

In terms of qualitative features, NVIDIA notes that TensorRT 7 features a new deep learning compiler designed to automatically optimize and accelerate the increasingly complex recurrent and transformer-based neural networks needed for AI speech applications. Such models, for example Google's BERT, are the state of the art in natural language processing (NLP). Note that TensorRT 7 comes on the heels of NVIDIA's previous work on BERT, on the training part.

Discussing use cases for such models powered by NVIDIA, its spokespeople referred to Microsoft as an example. Microsoft's Bing search engine processes queries using BERT-based models running on NVIDIA GPUs. This enables Bing to deliver relevant results in real-time - 800 times faster compared to the previous implementation.

Deep Learning inference with Habana Labs Goya

Just a couple of days earlier, Intel made a long-awaited move in this space, too. Intel announced the acquisition of Habana Labs, an Israel-based AI chip startup. Intel has been focusing on CPUs for a while now, and the superior performance of other chip architectures (such as GPUs) for machine learning workloads posed a risk for its market share going forward.

As these types of workloads gain increasing share in data centers, Intel is faced with an increasing number of data center owners choosing to move those workloads to GPUs - like Alibaba and Baidu. Lately, Intel has been active making a round of AI chip vendor acquisitions: Altera in 2015, Nervana in 2016, Vertex.ai in 2019.

We posit, however, that Habana Labs is Intel's most significant acquisition. Not just because of the financial terms (the acquisition cost $2 Billion), but mostly because Habana Labs seems to be well placed to take on NVIDIA's GPUs.

As opposed to NVIDIA, Habana Labs develops separate lines of processors for training and inference, called Gaudi and Goya, respectively. Gaudi is currently being sampled by select hyperscale customers and is expected to deliver up to a 4x increase in throughput in large node training systems. Goya has been available for a while.

Developing custom architectures for training and inference is one of the key premises of Habana Labs. According to 3rd party benchmarks made available by Habana Labs in December 2018, Gaudi seems to clearly outperform both CPUs and GPUs. This brings up a couple of interesting questions.

Habana separates machine learning training from inference

The first question is whether TensorRT 7 changes things, and the brief answer is we don't know. Not only were the benchmarks released by Habana Labs a year ago, but they also refer to different workloads. Those benchmarks were performed on image recognition tasks (ResNet-50), while TensorRT 7 seems tuned for NLP workloads.

The second question is, even if Gaudi is indeed much faster than NVIDIA's GPUs on these workloads, too, will clients switch to using it? The answer is still we don't know, but even if they do, it probably won't be very soon. Besides the fact that Intel will have to go through the acquisition process, the sales cycle in AI chips is not as short as in software.

In addition, as we've noted in the past, NVIDIA's strength is not just the hardware. NVIDIA has also created an ecosystem, and software offerings to faciliate the use of its GPUs. TensorRT 7 goes to show this. Habana Labs also has its own software stack, but it's less mature, and with a smaller footprint, than NVIDIA's.

In any case, it looks like Intel is now set to become a contender for AI workloads, too. When and how exactly this will manifest remains to be seen