DeepMind AlphaGo Zero learns on its own without meatbag intervention

DeepMind has said it has created the best Go player in the world because it was able to do away with human knowledge and start with a blank slate.

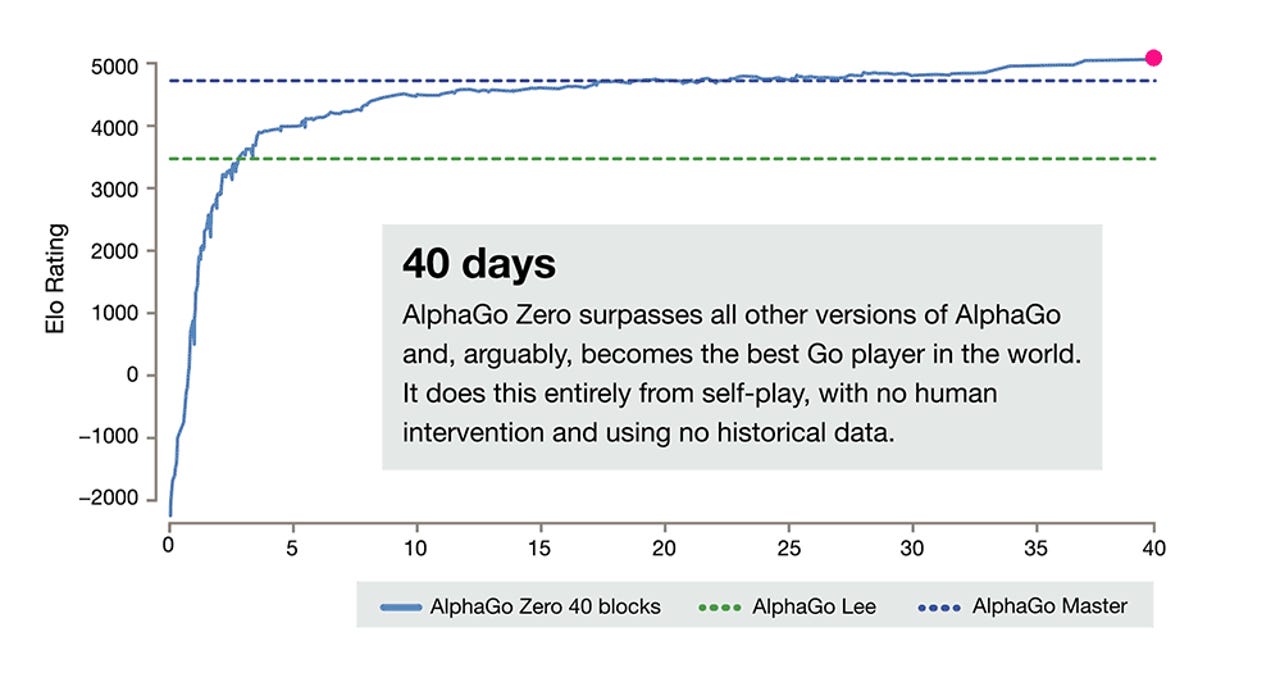

AlphaGo Zero begins by playing "completely random" Go games against itself, and in three days is able to defeat by 100 games to 0 the version of AlphaGo that defeated Lee Se-dol in March 2016, the company said in a blog post.

By the 21-day mark, it is able to defeat AlphaGo Master -- an online version that appeared in January and won over 60 straight games against top Go players -- and after 40 days is able to beat all other versions of AlphaGo.

DeepMind cofounder and CEO Demis Hassabis said the training process for the neural network underlying AlphaGo Zero was stripped back to remove some "hand-engineered features" previously used, made use of a single network rather than a pair of networks, and did away with Monte-Carlo rollouts.

"The system starts off with a neural network that knows nothing about the game of Go. It then plays games against itself, by combining this neural network with a powerful search algorithm," Hassabis said. "This updated neural network is then recombined with the search algorithm to create a new, stronger version of AlphaGo Zero, and the process begins again."

"This technique is more powerful than previous versions of AlphaGo because it is no longer constrained by the limits of human knowledge. Instead, it is able to learn tabula rasa from the strongest player in the world: AlphaGo itself."

By removing the need to learn from humans, DeepMind lead researcher David Silver said it is possible to have generalised AI algorithms.

"The fact that we've seen a program achieve a very high level of performance in a domain as complicated and challenging as Go should mean that we can now startto tackle some of the most challenging and impactful problems for humanity," he said.

Although DeepMind gained prominence by defeating human Go players, the company has also turned its attention to StarCraft II.

"We've worked closely with the StarCraft II team to develop an API that supports something similar to previous bots written with a 'scripted' interface, allowing programmatic control of individual units and access to the full game state (with some new options as well)," DeepMind said in November 2016.

"Ultimately, agents will play directly from pixels, so to get us there, we've developed a new image-based interface that outputs a simplified low-resolution RGB image data for map and minimap, and the option to break out features into separate 'layers', like terrain heightfield, unit type, unit health, etc."

The Alphabet-owned company said it chose StarCraft II because it was closer to a real-world environment than any other game it has used for testing so far, as it is played in real-time.

"The skills required for an agent to progress through the environment and play StarCraft well could ultimately transfer to real-world tasks," it claimed.

Related coverage

Maya Cakmak is pioneering ways for non-experts to program robots. Her work is opening a new field you should know about.

AI, robotics, IoT, augmented and virtual reality to bolster ICT spending

According to IDC, spending on new technologies will accelerate over the next five years and boost spending on information and communications technology overall.

Affordable robotic automation is now available to mom and pop shops, which is driving crazy growth in the robotics industry.