Devil’s in the details in Historic AI debate

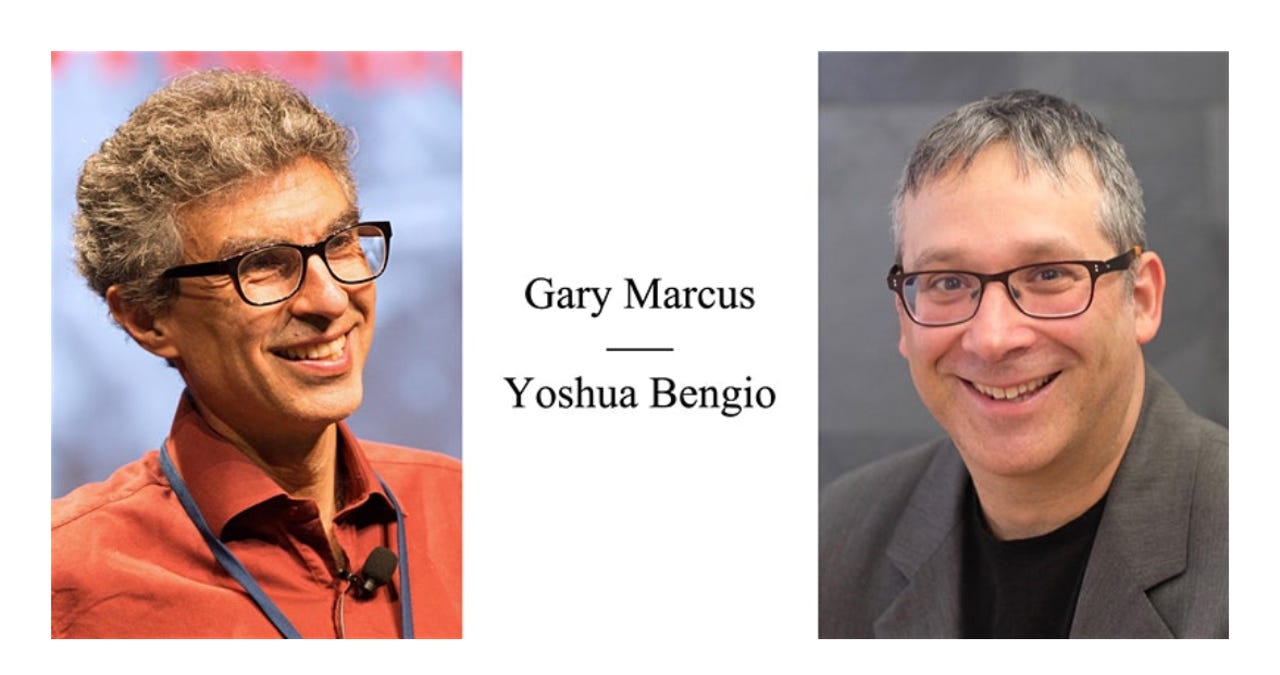

Yoshua Bengio, left, has been a machine learning researcher for decades and runs Montreal's MILA institute for AI. Gary Marcus is a psychologist at NYU and a frequent critic of the puffed-up hype around AI.

Gary Marcus, the NYU professor and entrepreneur who has made himself a gadfly of deep learning with his frequent skewering of headline hype, and Yoshua Bengio, a leading practitioner of deep learning awarded computing's highest honor for his pioneering work, went head to head Monday night in a two-hour debate Webcast from Bengio's MILA institute headquarters in Montreal.

The two scholars seemed to find a lot of common ground as far as the broad strokes of where artificial intelligence needs to go, things such as trying to bring reasoning to AI.

But when the discussion periodically lapsed into particular terminology or historical assertions, the two were suddenly at odds.

The recorded stream of the video is posted on the organization's Facebook page if you want to go back and watch it.

Marcus started with a recap of his career, noting he had done the first work on big data with early childhood learning studies, as a cognitive scientist. What struck him about neural nets, all the way back in the 1990s, was that "classic connectionism couldn't learn universals outside training space." The failures of neural nets in some cases are an argument for "richer innate priors," he said, things that seem to be built into organisms through evolution, not merely that which is learned.

Marcus clarified that he has never said deep learning should be abandoned, but that that it should be "re-contextualized as a tool among many." He said he and Bengio at one time were far apart in their thinking because Bengio "relied too heavily on black boxes," meaning, neural nets. "Recently he has taken a sharp turn to positions I've long argued for," said Marcus, meaning, the notion of "hybrid" AI systems that would combine some machine learning with some form of symbol manipulation.

Although Marcus said he "really admire[s] Yoshua" and "think he should be role model in intellectual honesty," Marcus criticized Bengio for not giving him credit. "I would like him to cite me, I think not citing me devalues my contribution."

Also: For a more dangerous age, a delicious skewering of current AI

Bengio summarized his main interest these days as being about how neural nets can respond to data that is "out of distribution," or OOD, the problem of how to generalize beyond training data. He made reference to a paper recently published, and accepted at next year's ICLR conference, "A meta-transfer objective for learning to disentangle causal mechanisms."

Bengio quickly went into articulating what might be a manifesto of how he views all AI problems. Deep learning, with which he is most associated, is "not a particular architecture or a particular training procedure," said Bengio. "It's something that's moving, it's more a philosophy as we add more principles to our toolbox."

Such programs are "inspired by the brain in many ways, and they use some form of optimization, sometimes multiple objectives, a coordinated optimization of multiple parts."

When he touched on the overlap of their interests, Bengio said that he has "been arguing for a little bit now, certainly less than Gary, about the importance of compositionality." He noted that human brains can combine existing concepts in novel ways. "People have been thinking about this at least for a few years now," he said. "We haven't solved it, but people are starting to understand."

When it came time for the interaction between the two, Marcus observed "we don't disagree on that much."

Indeed, in broad strokes, the authors seem in accord that AI systems need a hybrid approach; that some kinds of priors are needed; and that there are values that can be manipulated at a high level, with Marcus calling them "symbols" and Bengio opting for "variables." Both scholars made extensive reference to scientist Daniel Kahneman's notion, expressed in his book "Thinking, Fast and Slow," that there are two kinds of systems of thought, a structure that seemed agreeable to both Marcus and Bengio as a framework.

Also: LeCun, Hinton, Bengio: AI conspirators awarded prestigious Turing prize

But the two quickly diverged when they dipped into giving examples. On the matter of a hybrid system, for example, Marcus asked Bengio, "Why are you not satisfied that hybrids are part of the answer?" to which Bengio replied, "it depends on what you mean by hybrid…" Pressed, Bengio replied that he's not interested if hybrids are "about GOFAI [good old fashioned AI] algorithms, like rules and logic, which have a lot of value as inspiration, but trying to take them and glue them to neural nets doesn't work."

Marcus argued for symbols, pointing out that DeepMind's chess-winning AlphaZero program is a hybrid involving symbols because it uses Monte Carlo Tree Search. "You have to keep track of your trees, and trees are symbols."

Bengio dismissed the notion that tree search is a symbol system. "It's a matter of words," Bengio said. "If you want to call that symbols, but symbols to me are different, they have to do with the discreteness of concepts."

Marcus reflected that "we both think the other side is straw-manning," meaning, creating overly simplistic characterizations of each other's positions as a tactic to knock the other person down. But, insisted Marcus, "we are pushing to the same place from opposite sides."

Despite some kerfuffles, Bengio and Marcus ended on a warm note with a handshake.

Responded Bengio, "I don't care what words you want to use, I'm just trying to build something that works."

An extended exchange had to do with the notion of priors, something that is taken as a structural given that makes possible everything built after that. Marcus characterized Bengio as wanting "a soft prior," perhaps in contrast to the "rich prior" that Marcus argued for. "I want as little a prior as possible," said Bengio, to which Marcus responded, "We disagree in taste — I don't want a huge amount, I want more than you."

In fact, much of the trickiness of the discussion may have come from the fact that the two scholars were talking from rather different places. Marcus was describing a kind of system he would like to see based on observations about cognitive functioning, in broad terms, while Bengio was steeped in the details of the current scientific experiments and what they could or couldn't do.

"There is an evolution," said Bengio. "We are doing research; it's not like we have one algorithm, we are building and constantly trying to expand the set of principles."

When it came time for the Q&A portion of the debate, various intriguing queries were put forth. What is the innate knowledge needed for deep learning? Should less effort in machine learning be the "manual" part, creating architectures, and more "meta-learning"? Is quantum computing going to change AI?

As for AI obtaining consciousness, "The question of consciousness deserves more time than this debate allows," said Bengio. While no one knows what consciousness is exactly, Bengio implied that today's AI is very far from anything like it. "Philosophers ask should we eventually give person-hood" to machines, he observed. "I don't think we are anywhere close to understanding this question enough to give answers."

Toward the end of the evening, both Marcus and Bengio had a chance to address the matter of ethics in broad terms. As an audience member put it, "One thing that disturbs me is that there is nothing about checks and balances and the ethics going into creating the algorithms of all of this AI; as a human in this world, I find this incredibly disturbing."

Marcus replied that "my own version is that common sense could be a way to build a framework that would allow machines to represent values; we want robots to not do harm to people."

Bengio added the question was "very important," and that, over and above the technical question, "at least as important […] is the question of how we deploy these things, which is the responsibility of everyone in the chain."

"We have to make sure we steer our boat in the direction that's best for citizens," said Bengio.

The evening wouldn't be complete without one more kerfuffle between Marcus and Bengio. Marcus argued that deep learning has not yet achieved the kinds of dynamic re-wiring seen in the brains of humans. "No, that's deep learning," insisted Bengio. "It's just gates, we've had that since 1989," referring to a form of logic gate that could be created in a neural net.

"I'll end with the agreement," said Marcus. "Gates are the solution."