EmTech MIT: Giving machines common sense

The world has seen remarkable progress in artificial intelligence in recent years, but general AI remains science fiction. One of the keys to making this leap could be the human brain. In a talk at the EmTech MIT conference this week, MIT professor Josh Tenenbaum described a new university moonshot to build machines that can learn like children.

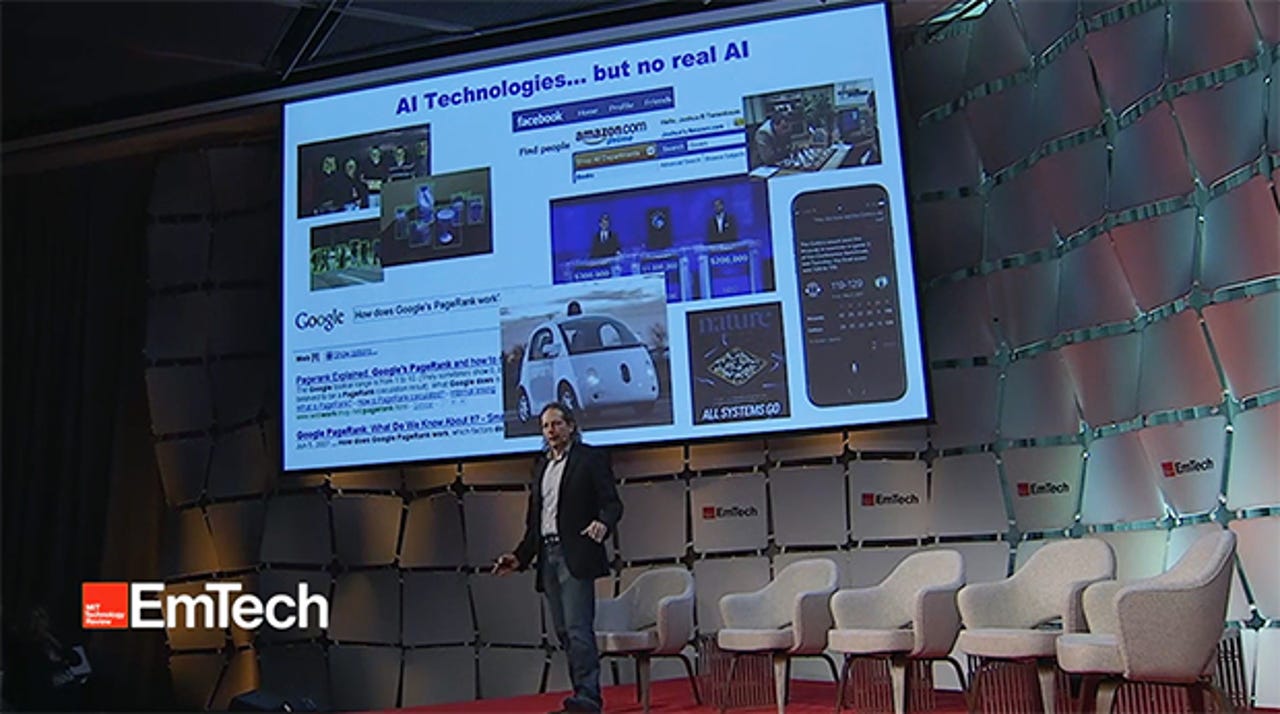

"Why do we have all these AI technologies, but fundamentally no real AI?" Tenenbaum said. "We have machines that do useful things we used to think only humans could do, but none of these systems are truly intelligent, none of them have the flexible, common sense [of] . . . even a one-year-old."

These systems are designed to do one thing very well while humans can do all of these things--and much more--well. That's because current AI technologies are based on pattern recognition, while human learning is more complex. It is about explaining and understanding things, making plans, solving problems, and imagining new things we've never seen before. The goal of the Learning moonshot is to reverse-engineer this ability to model the world to create "more human-like machine intelligence."

The idea of building machines that learn like children is hardly new. In the 1950 paper in which he proposed his test, Alan Turing suggested creating programs modeled on an infant's brain because he believed it is simpler--like an empty notebook. But we now know a lot more about how the brain works. "Turing was brilliant but we now know he got this wrong," Tenenbaum said. "Children are nothing like a blank slate and learning is nothing like just copying things down from a blackboard."

Instead we are born with much of the wiring for seeing and understanding the world, and we learn not by memorizing patterns, but rather by actively thinking and exploring. In other words, through play. The challenge is how to capture these processes to give machines some "common sense." By combining deep learning and neural networks with older probabilistic programming techniques and game engines, the group has built an "intuitive physics engine" and an "intuitive psychology" engine. These can model infant-like abilities to, for example, predict when a stack of blocks is going to fall--without any explicit training--or to observe someone's actions and infer their goals.

The "hard problem" is figuring out how to actually program these engines. For this, the team uses Bayesian program learning (BPL) methods capable of learning concepts from just one example (one-shot learning) and then generalizing like humans. In a separate talk, Brenden Lake, a former MIT PhD student now at NYU, described how the BPL framework successfully recognized handwritten characters after seeing just one example with better accuracy than humans (and much better than convolutional neural networks). It also passed visual Turing tests in which the BPL looked at a new character and generated its own examples, and then was given a new alphabet and created its own symbols that plausibly belong to that set.

This interesting, but it applies only to simple symbols. More recently, the team has developed an algorithm called DreamCoder that is designed to help machines take skills they have acquired in one domain and learn how to apply them in other areas. Inspired by the fact that much of learning takes place when we are sleeping, DreamCoder is capable of learning new concepts and even new programming languages when it is "sleeping."

Tenenbaum concedes that we are still a long way from building machines that really learn like children--if we ever get there at all. And this is just one of several moonshots under MIT's Quest for Intelligence, which also includes teams working on Creativity, Emotion, Language and Perception. But each has a realistic milestone and interim engineering steps along the way, and he notes that the small steps can often lead to bigger things. The work in his Computational and Cognitive Science Group has already produced one spin-off, a startup called iSee that is applying these common-sense engines to self-driving cars.

"We are making some progress but I want to emphasize we have a long way to go," Tenenbaum said. "If we could do this, it would be the basis for artificial intelligence that is actually intelligent."