Human Rights Commission calls for a freeze on 'high-risk' facial recognition

The Australian Human Rights Commission (AHRC) has called for stronger laws around the use facial recognition and other biometric technology, asking for a ban on its use in "high-risk" areas.

The call was made in a 240-page report [PDF] from the AHRC, with outgoing Human Rights Commissioner Edward Santow saying Australians want technology that is safe, fair, and reliable, and technology that with the right settings in law, policy, education, and funding, the government, alongside the private sector, can "build a firm foundation of public trust in new technology".

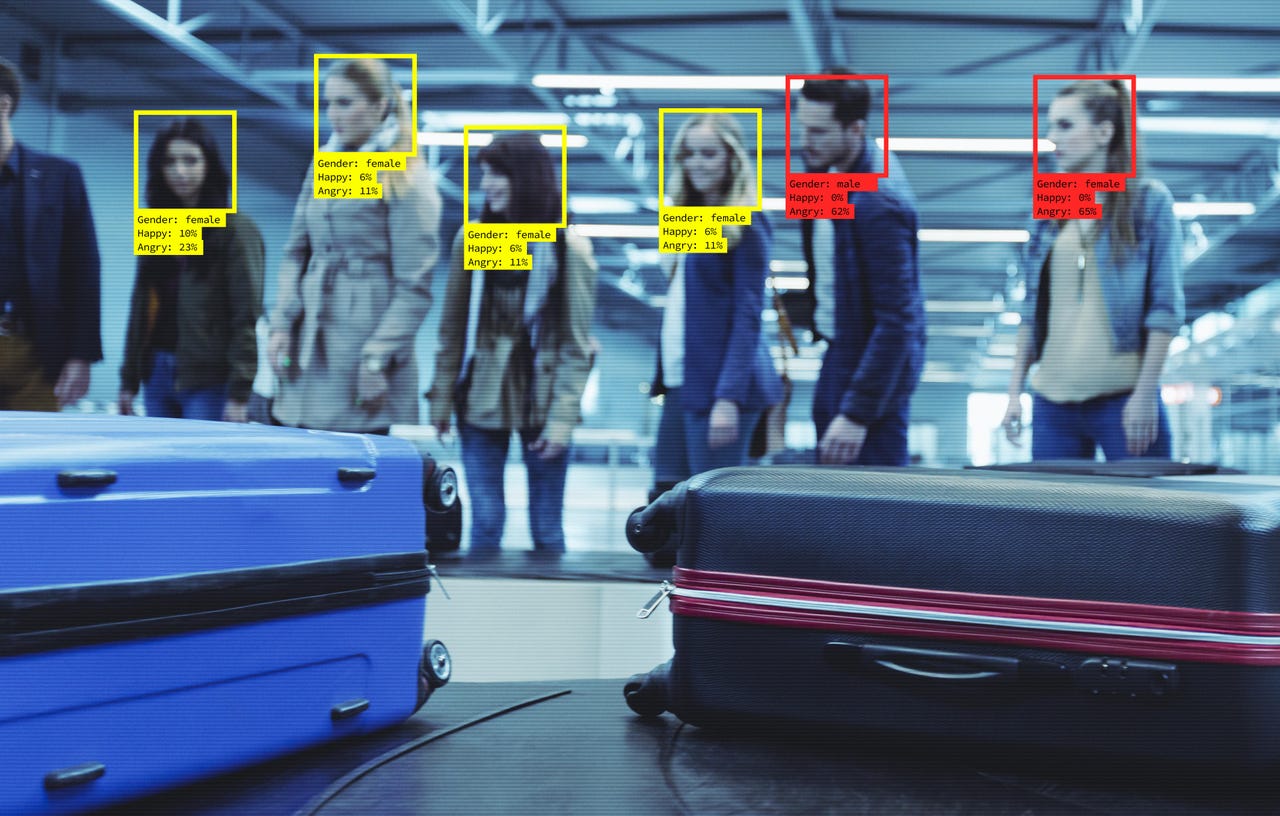

"The use of AI in biometric technology, and especially some forms of facial recognition, has prompted growing public and expert concern," the report says.

As a result, the Commission recommends privacy law reform to protect against the "most serious harms associated with biometric technology".

"Australian law should provide stronger, clearer, and more targeted human rights protections regarding the development and use of biometric technologies, including facial recognition," it wrote.

"Until these protections are in place, the Commission recommends a moratorium on the use of biometric technologies, including facial recognition, in high-risk areas."

The report details a number of concerns raised throughout the AHRC's consultation on the use of biometrics, such as the risk of profiling and errors leading to the risk of discrimination, including bias against people of colour, as well as a blanket concern over mass surveillance.

The AHRC has made a number of recommendations as a result, with the first asking federal, state, and territory governments to introduce legislation that regulates the use of facial recognition and other biometric technology.

The legislation, it said, should expressly protect human rights; apply to the use of this technology in decision making that has a legal, or similarly significant, effect for individuals, or where there is a high risk to human rights, such as in policing and law enforcement; and be developed through in-depth consultation with the community, industry, and expert bodies such as the AHRC and the Office of the Australian Information Commissioner (OAIC).

"To date, existing legislation has not proven to be an effective brake on inappropriate use of facial and other biometric technology," the report says. "Without effective regulation in this area, it seems likely that community trust in the underlying technology will deteriorate."

It has urged all governments across the country to work together.

The AHRC has asked the moratorium on the use of facial recognition and other biometric technology in decision making -- which has a legal, or similarly significant, effect for individuals, or where there is a high risk to human rights, such as in policing and law enforcement -- be continued until such time as legislation is in place.

The moratorium, however, would not apply to all uses of facial and biometric technology.

"Particular attention should be given to high-risk contexts, such as the use of facial recognition in policing, in schools and in other areas where human rights breaches are more likely to occur," it adds.

It also said the government should introduce a statutory cause of action for serious invasion of privacy where biometrics are concerned.

Calling for a modernised regulatory system to ensure that AI-informed decision making is "lawful, transparent, explainable, responsible, and subject to appropriate human oversight, review, and intervention", the AHRC has also requested the creation of a new AI Safety Commissioner to help lead Australia's transition to an "AI-powered world".

Desirably operating as an independent statutory office, the AI Safety Commissioner should focus on promoting safety and protecting human rights in the development and use of AI in Australia, such as through working with regulators to build technical capacity regarding the development and use of AI in their respective areas, as well as be responsible for monitoring and investigating developments and trends in the use of AI.

See also: Ethics of AI: Benefits and risks of artificial intelligence

It has also asked the government to convene a multi-disciplinary taskforce on AI-informed decision making that could perhaps be led by the AI Safety Commissioner.

"The taskforce should consult widely in the public and private sectors, including with those whose human rights are likely to be significantly affected by AI-informed decision making," it said.

The report has also asked the government resource the AHRC accordingly so that it can produce guidelines for how to comply with federal anti-discrimination laws in the use of AI-informed decision making.

To that end, another recommendation is that the government introduce legislation to require that a human rights impact assessment (HRIA) be undertaken before any department or agency uses an AI-informed decision-making system to make administrative decisions, as well other legislation that requires any affected individual to be notified when AI is materially used in making an administrative decision.

It has also asked for an audit on existing, or proposed, AI-informed decision making.

Making a total of 38 recommendations, the AHRC also touches on legal accountability for private sector use of AI, asking the legislation flagged for government use of AI also be extended to non-government entities.

Elsewhere, it has asked the Attorney-General develop a Digital Communication Technology Standard under section 31 of the Disability Discrimination Act 1992 and consider other law and policy reform to implement the full range of accessibility obligations regarding Digital Communication Technologies under the Convention on the Rights of Persons with Disabilities.

Additionally, it wants federal, state, territory, and local governments to commit to using digital communication technology that fully complies with recognised accessibility standards.

"We need to ask a crucial question: Can we harness technology's positive potential to deliver the future we want and need, or will it supercharge society's worst problems? The decisions we make now will provide the answer," Santow said.

He labelled the report as setting out a roadmap for achieving this goal.

SEE ALSO

- Human Rights Commission wants privacy laws adjusted for an AI future

- Human Rights Commission publishes guide to recognising and preventing AI bias

- Genevieve Bell and what the future of AI might look like

- 'Booyaaa': Australian Federal Police use of Clearview AI detailed

- Who is responsible for responsible AI?

- AIs are getting smarter, fast. That's creating tricky questions that we can't answer

- AI and ethics: One-third of executives are not aware of potential AI bias (TechRepublic)