Intel unveils next-gen Movidius VPU, codenamed Keem Bay

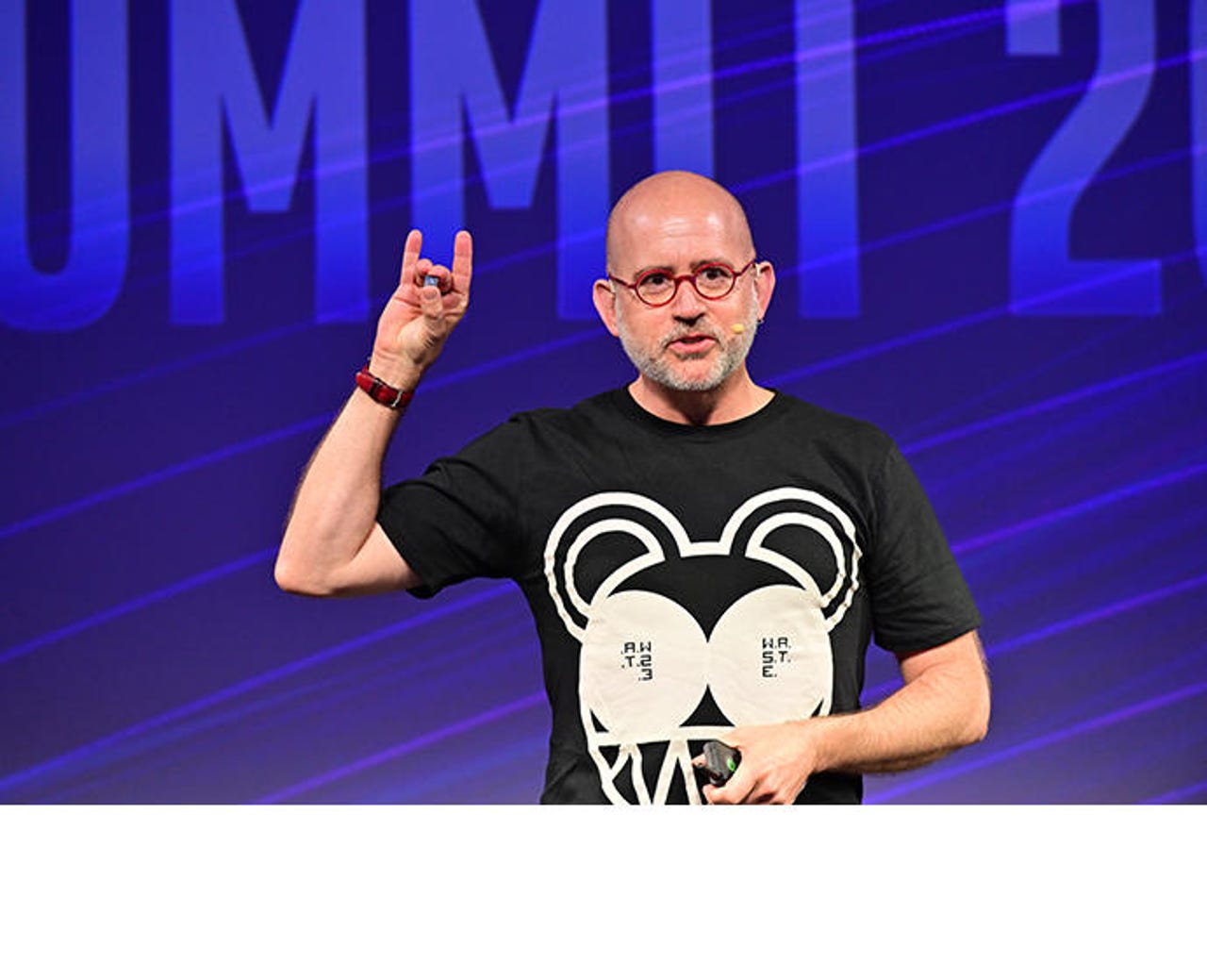

Jonathan Ballon, Intel vice president in the Internet of Things Group, speaks Tuesday, Nov. 12, 2019, displaying the new Gen 3 Intel Movidius VPU during Intel's AI Summit in San Francisco.

Intel on Tuesday unveiled the next-generation Movidius Vision Processing Unit (VPU), codenamed Keem Bay, promising it can deliver leading performance with up to 6X the power efficiency of competing processors. At its AI Summit in San Francisco, the chipmaker also demonstrated the Nervana Neural Network Processor for Training (NNP-T) and the Nervana NNP for inference.

With the expansion of its hardware portfolio purpose-built for AI, as well as new software offerings, Intel intends to open up new opportunities for AI in the cloud, the data center or at the edge. AI is already bringing in $3.5 billion in yearly revenue for Intel, Naveen Rao, VP and GM of Intel's AI Products Group, said at the Tuesday's event.

Just as it's now a given that every business leverages the internet, "the same thing is going to happen with AI," Rao said. However, he said, computational hardware and memory have reached a breaking point, creating the need for more specialized hardware.

Intel debuted the Movidius VPU in 2017. Keem Bay, launching in the first half of 2020, adds unique architectural features to improve effiency and throughput, Intel's Jonathan Ballon said Tuesday.

The next-gen VPU delivers raw inference throughput performance 10X greater than the first generation, Intel says. Additionally, according to early testing, Keem Bay will offer more than 4x the raw throughput of Nvidia's TX2 SOC, at one-third less power. It can also offer nearly the same raw throughput as Nvidia's Xavier SOC, at one-fifth the power.

"At the edge, performance is important, of course," said Ballon, VP in Intel's Internet of Things Group. But "customers also care about power, size and increasingly, latency."

Designed for edge media, computer vision and inference applications, the VPU is just 72mm, compared with Xavier's 350mm. Ballon called it a "workhorse" suitable for a variety of form factors, from robotics and kiosks to full-power PCIe cards.

Meanwhile, Intel's NNPs for training (NNP-T1000) and inference (NNP-I1000) are its first purpose-built ASICs for complex deep learning, for cloud and data center customers.

The Intel Nervana NNP-T for training

The NNP-T is designed for high-efficiency training of real-world deep learning applications -- with scaling of up to 95 percent with Resnet-50 & BERT as measured on 32 cards, Intel said. There's no loss in communications bandwidth when moving from an 8-card in-chassis system to a 32-card cross-chassis system, Intel said.

The NNP-I, meanwhile, is designed for running near-real-time, high-volume, low-latency inference. Intel recently reported its MLPerf results for two pre-production Intel Nervana NNP-I processors on pre-alpha software, achieving 10,567 images/sec in Offline scenario and 10,263 images/sec in Server scenario for ImageNet image classification on ResNet-50 v1.5 when using ONNX.

The NNP-I will be supported by OpenVINO, a toolkit designed to easily bring computer vision and deep learning inference to vision applications at the edge.

To complement OpenVINO, Intel on Tuesday also announced the new Intel DevCloud for the Edge, which allows developers to prototype and test AI solutions on a broad range of Intel processors before they buy hardware. Intel has more than 2,700 customers already using the DevCloud.

The Intel Nervana NNP-I for inference