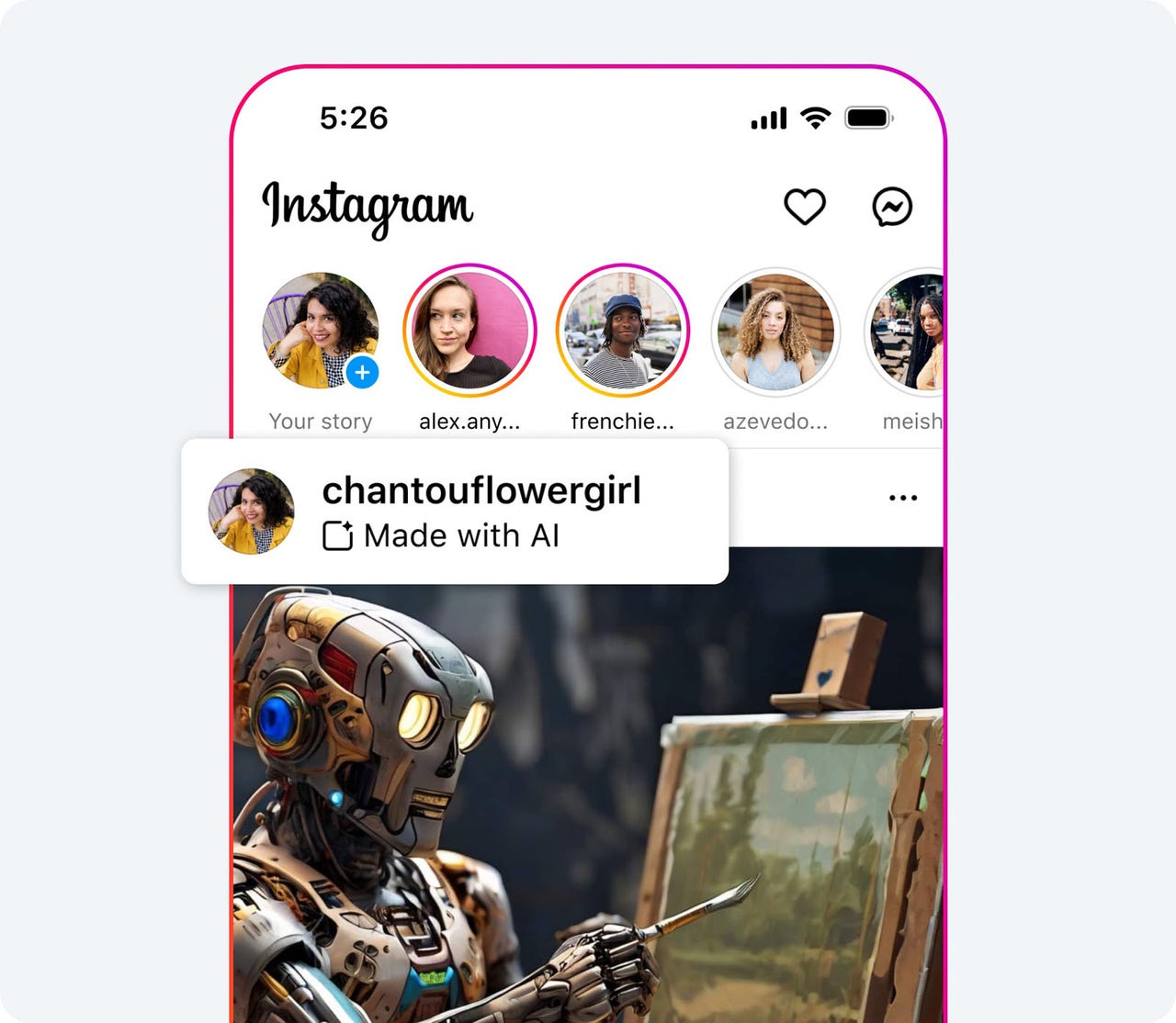

Meta promises to better label AI-generated videos, images, and audio

In February, Meta announced plans to add new labels on Instagram, Facebook, and Threads to indicate when an image was AI-generated. Now, using technical standards created by the company and industry partners, Meta plans to apply its "Made with AI" labels to videos, images, and audio clips generated by AI, based on certain industry-shared signals. (The company already adds an "Imagined with AI" tag to photorealistic images created using its own AI tools.)

In a blog post published on Friday, Meta announced plans to start labeling AI-generated content in May 2024 and to stop automatically removing such content in July 2024. In the past, the company relied on its manipulated media policy to determine whether or not AI-created images and videos should be taken down. Meta explained that the change stems from feedback from its Oversight Board, as well as public opinion surveys and consultations with academics and other experts.

"If we determine that digitally-created or altered images, video, or audio create a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label so people have more information and context," Meta said in its blog post. "This overall approach gives people more information about the content so they can better assess it and so they will have context if they see the same content elsewhere."

Also: I tested Meta's Code Llama with 3 AI coding challenges that ChatGPT aced - and it wasn't good

Meta's Oversight Board, which was established in 2020 to review the company's content moderation policies, found that Meta's existing AI moderation approach is too narrow. Written in 2020, when AI-generated content was relatively rare, the policy covered only videos that were created or modified by AI to make it seem like a person said something that they didn't. Given the recent advances in generative AI, the board said that the policy now needs to also cover any type of manipulation that shows someone doing something they didn't do.

Further, the board contends that removing AI-manipulated media that doesn't otherwise violate Meta's Community Standards could restrict freedom of expression. As such, the board recommended a less restrictive approach in which Meta would label the media as AI generated but still let users view it.

Meta and other companies have faced complaints that the industry hasn't done enough to clamp down on the spread of fake news. The use of manipulated media is especially worrisome as the US and many other countries are holding 2024 elections for which videos and images of candidates can easily be faked.

"We want to help people know when photorealistic images have been created or edited using AI, so we'll continue to collaborate with industry peers through forums like the Partnership on AI and remain in a dialogue with governments and civil society – and we'll continue to review our approach as technology progresses," Meta added in its post.