Nvidia, Stanford U propose thin and light holographic glasses

The ultra-thin display of Holographic Glasses is made possible both by a clever assemblage of components, such as modulator, waveguide and lens, but also a novel machine learning algorithm that optimizes phases of light for pupil size.

Arguably the failure of virtual reality, and its cousin, augmented reality, to light the world on fire is in no small part a result of what you might technically call The Dork Factor. Smart goggles necessary to see in a virtual world look and feel mostly terrible to the anyone but the hardest-core of nerds.

Goggles from Facebook's Oculus unit have improved over the years, but the latest, the Meta Quest 2 model, is still not something most people want to wear around town. Glasses from Vuzix may come closer to being fashion statements, but their display capability is primitive and not anything a person wants to gaze at for extending lengths of time.

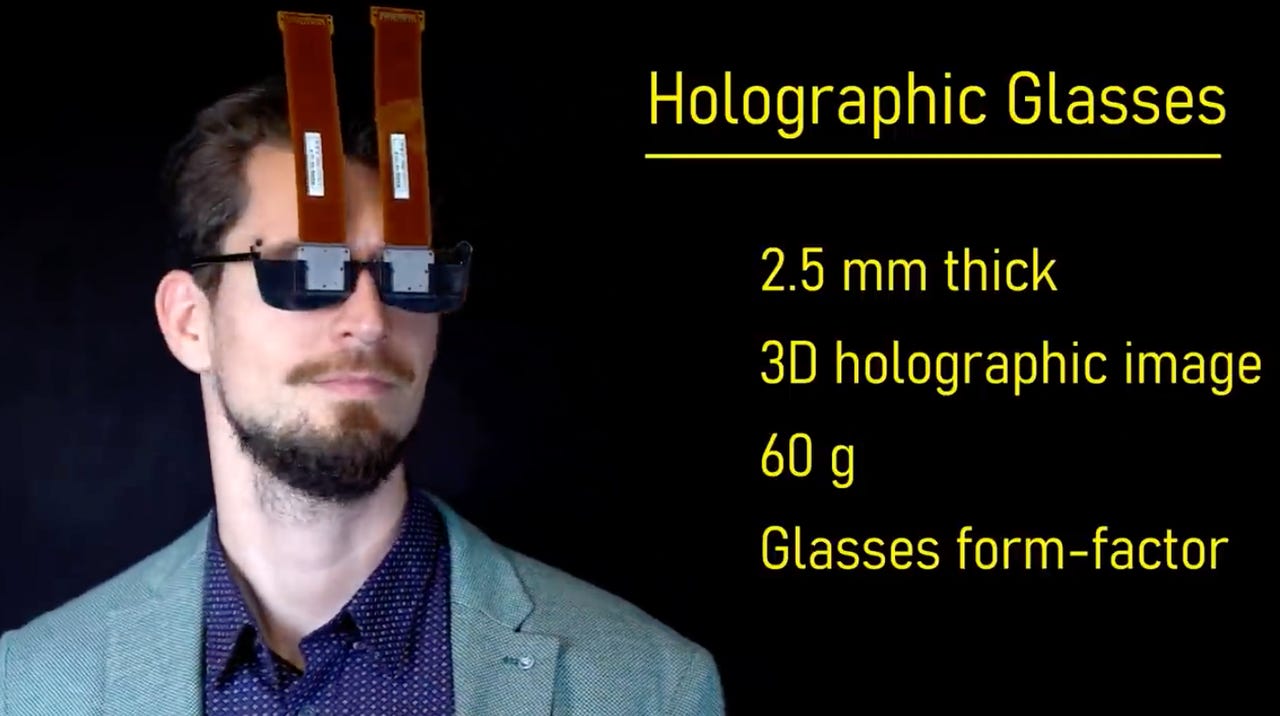

Fear not, though, help may be on the way. Nvidia, with help from Stanford University, last month proposed what it calls Holographic Glasses, a prototype of which is just a tenth of an inch thick and weighs sixty grams, and can fit in normal glasses-like frames. It also goes beyond typical 2-D graphics to produce 3-D images that allow for a focus on certain areas of a scene, and that adjust as the wearer moves.

The technology is a combination of several cutting edge hardware components along with a novel software approach.

The authors hope to do away with the cumbersome VR devices of today such as Oculus Quest.

As described in a blog post and accompanying paper and video by authors Jonghyun Kim of Nvidia and Stanford, along with Manu Gopakumar, Suyeon Choi, Yifan Peng, and Gordon Wetzstein of Stanford, and Ward Lopes of Nvidia, the Holographic Glasses are "a holographic near-eye display system that offers 2D or 3D image presentation to each eye with a device thickness of just a few millimeters."

"A true glasses-form factor holographic VR was presented for the first time," they write.

The hardware, which was constructed by Kim and team first as a bench-top set-up, and then as a 3-D-printed wearable glasses prototype, focuses first and foremost on how to slim down headsets.

The key is to reduce the big space between a user's eye and the screen that's sitting in front of their eyes. The reason there is a space is that a certain "focal length" is needed between the screen and the lens that sits between the lens and the eye.

"Commercial VR displays are based on the magnifier principle," they write, "where a lens enlarges the image of a microdisplay to create a virtual image that the user perceives at some distance." That creates a trade-off: "Magnifiers are successful in offering wide fields of view with a large eyebox, but the large distance between lens and display results in bulky device form factors."

Instead, the authors put together three essential components: a waveguide, which takes a laser light beam and diffracts the light inside a tight space; a spatial light modulator, which projects the image from the beam of light coming out of the waveguide; and a special kind of lens called a geometric phase lens that focuses the light coming from the modulator to suit the human eye.

A diagram of the arrangement of components and the operating principle of the glasses. Light at the top enters the waveguide from a laser, exits the waveguide and strikes the spatial light modulator, where it is manipulated by the liquid crystals and sent back to the waveguide, and a portion of light makes it out the waveguide again to pass through the geometric phase lense to the pupil of the eye.

By using a narrow waveguide sandwiched to the modulator, and the geometric phase lens, the components are all thinner those those used in things such as Quest, and also don't require the large air gap between lens and image.

The components are all fairly standard. For example, the spatial light modulator is from a company called Holoeye, which makes numerous optical devices. The principle of the the modulator is to control a series of liquid crystals so that they rotate in space. As the crystals rotate, they modulate the phase of a beam of light coming out of the waveguide. That results in the light forming a holographic image on the display surface.

The novel design not only reduces thickness, it has "two distinct features which are not observed in conventional VR displays."

Those two aspects are a "dynamic eye box" and a software-based optimization called "high diffraction orders," both of which can improve what the person sees in novel ways.

The dynamic eye box refers to being able to steer the direction of the projected holographic image. By changing the angle of light as it enters the waveguide, the angle at which the holographic image strikes the eye can be made to shift as well, to simulate the shape of an object in space for that 3-D effect.

The high diffraction orders allow for an optimization of the image the person sees. Quality can be affected by how large a person's pupil is in relation to the intervals between the diffraction orders, a function of the angle at which the light reaches the pupil. If those intervals are too small, they cause all kinds of extra considerations about the distance of the lens from the image, which again introduces the problem of the bulk of the device.

To avoid that problem, the authors sought to optimize the intervals between high diffraction orders to be wider than pupil diameter, meaning, larger than 8 millimeters, by shaping them with an algorithm.

The authors modify an existing algorithm, introduced last year by Nvidia colleague Manu Gopakumar and others known as high-order gradient descent, or "HOGD," which "uniquely optimizes phase patterns."

To Gopakumar and team's HOGD algorithm, Kim and team added a variable to represent pupil size, creating "Pupil-HOGD."

As Kim and colleagues put it, "The novel Pupil-HOGD algorithm, which models the pupil aperture, produces the best image quality at all pupil sizes.

Although the sample specs look nifty, there are numerous caveats here. One is the issue of power consumption, which is not addressed at all. Wearables have always been limited not just by fashion fails but also by either being tethered to wall power or having insufficient battery life.

As the authors write, their prototype could seriously benefit from custom-designed components. For example, the waveguide "provides non-uniform illumination as well as a somewhat scrambled phase, which degrades the quality of wearable prototype results," they write. "With a waveguide designed for Holographic Glasses, the image quality of the wearable prototype could be improved," they write.

In addition, the field of view of the wearable, just 22.8 degrees, is a very narrow field of view, "far smaller than commercially available VR/AR displays." That narrow field of view is a limitation of the commercially available phase modulators from Holoeye, and the focal length of the geometric phase lens. The authors believe that some improved solutions, such as stacking two geometric phase lenses on top of one another, could increase that field of view to 120 degrees without increasing the bulk too much.

And, of course, one wants to know just how those ribbon connectors emerging at the top of the device like bunny ears will be integrated in an unobtrusive way.