Why hardware is eating software

We tend to think of the x86 instruction set architecture (ISA) as long settled. (An ISA defines instructions, as well as registers, memory, and other key resources.)

But Intel keeps changing the x86 ISA. Smart compilers hide much of it, but some of the ISA additions are quite complex.

Read also: AMD's EPYC server chips beat Intel Xeon 2-socket performance at every price point | Intel's Compute Card: Will this tiny device power a new generation of mini PCs? | Computex 2017: Intel rediscovers the desktop

In a recent paper, Microsoft Researcher Andrew Baumann asks if it makes sense to keep adding ever more complex extensions to the ISA.

Why?

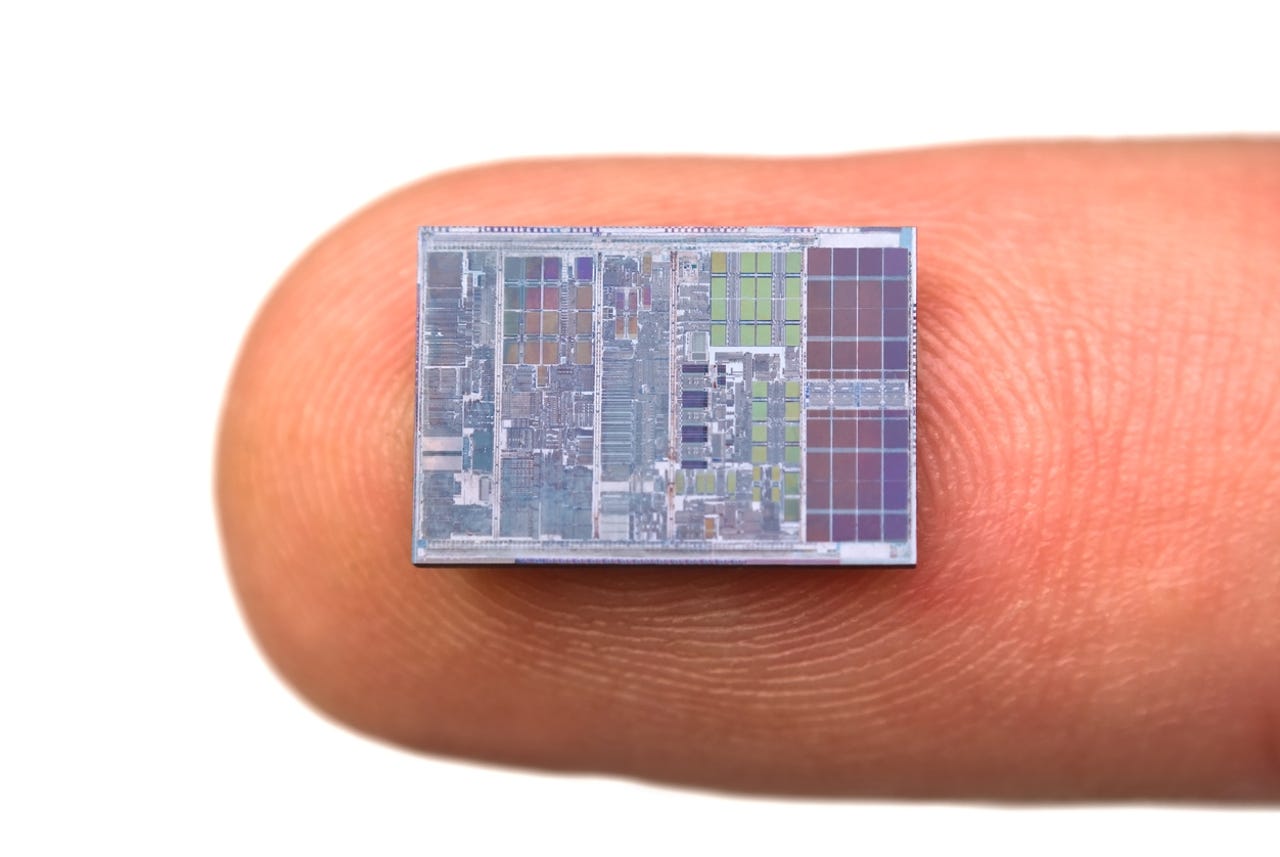

While Moore's Law is slowing, process shrinks keep increasing the number of transistors on a chip of a given size. In the last 20 years, x86 processors have gone from less than 10 million transistors on a chip to almost 10 billion.

Up until 2010, clock speeds kept increasing too, meaning the more complex chips also ran faster. Since 2010 though, clock speed increases have been minimal. So what shall we do with the added transistors?

A major part of Intel's answer has been to add new features to the x86 ISA. Some are obvious, such as 256 bit vector operations (512 is coming), a hardware random number generator, or HEVC support. Since 2010, Intel has added over 200 new instructions to the x86 ISA.

Intel's -- and the rest of the market's -- motivation is simple: Without new features, people have no incentive to buy new computers.

RISC versus CISC

But there's a downside to Intel's strategy. It recapitulates the 1980s war between CISC (Complex Instruction Set Computing) and RISC (Reduced Instruction Set Computing).

Minicomputers -- like the DEC VAX and IBM mainframes -- had CISC ISAs. When they were designed, software was much slower than hardware, so it made sense to put complex instructions into hardware.

But these instructions might require a dozen or more CPU cycles to complete, reducing the hardware advantage. More importantly, as systems migrated to single chip implementations, the CISC chips were too complex to speed up.

David Patterson, a UC Berkeley professor and master of snappy acronyms (see RAID), coined the term RISC to describe an ISA with a small set of simple instructions and a load/store memory interface. Long story short, most CISC architectures died out as MIPS, ARM, and x86 adopted RISC concepts, x86 less purely than the others, but good enough to win the desktop, notebooks, and servers.

The upshot

The extra complexity of x86 meant that when the mobile revolution came along -- happy 10th iPhone! -- Intel was unable to compete with ARM. That didn't matter too much, as long as PC sales were growing, but now Intel is hurting.

The Storage Bits take

As a hardware company, Intel has always had a bias for fat, power-hungry chips, and adding over 200 new instructions to the x86 ISA is right in character. But it's hard to see how this is positive for Intel in the long term.

More transistors use more power. Intel has done great work getting x86 to lower TDP -- thermal design power -- but that's only protecting its core markets, not winning new ones. Intel needs a hard, high-level rethink of its strategy.

Microsoft's Baumann has a radical suggestion that could be a great starting point. ISAs are:

. . . no longer the boundary between hardware and software, but rather just another translation layer in the stack.

In other words, it's all software, even the CPU.

Courteous comments welcome, of course.