Nvidia Ampere, plus the world’s most complex motherboard, will fuel gigantic AI models

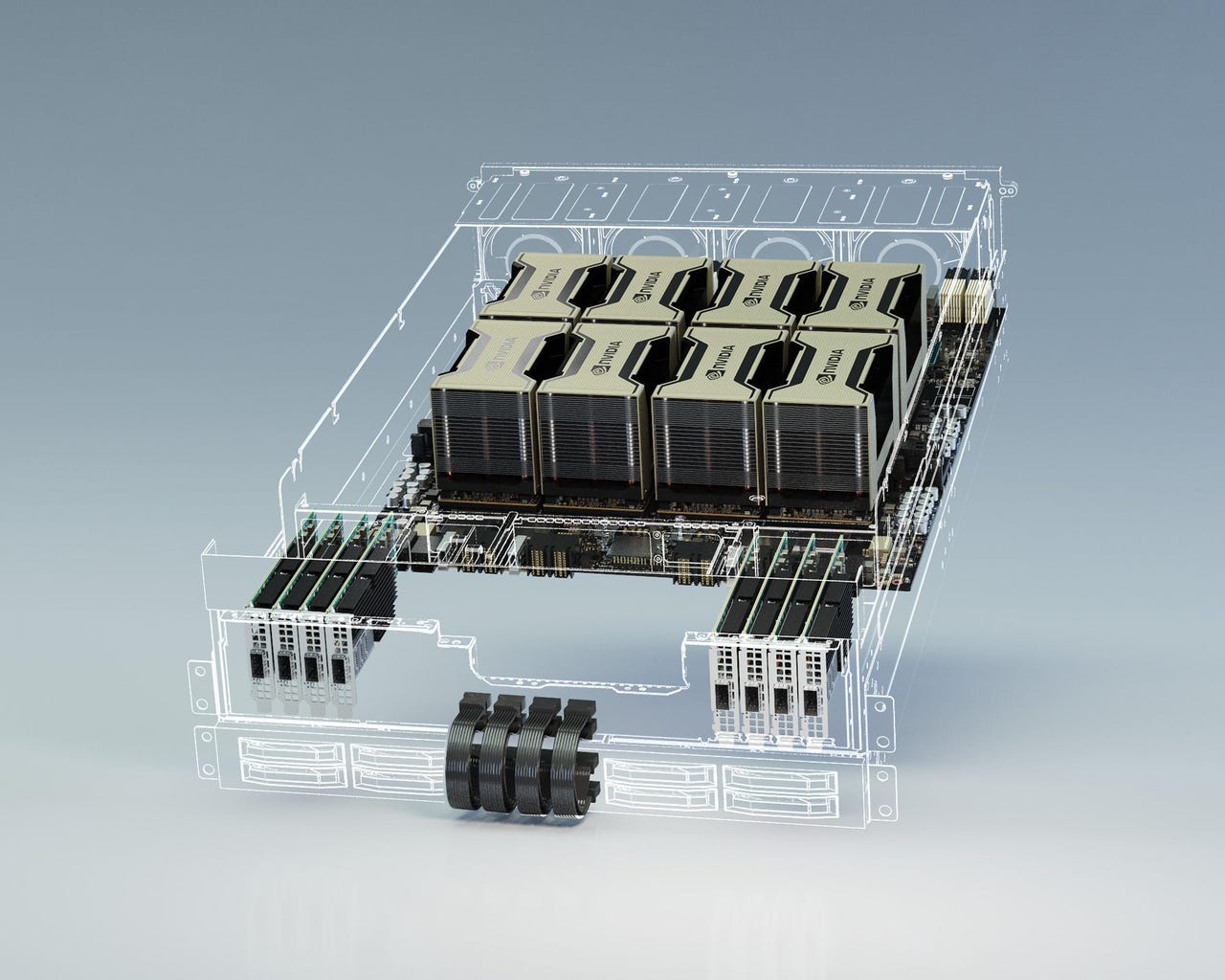

The "HGX" is the world's most complex motherboard, according to Nvidia CEO Jensen Huang, able to accommodate eight of the company's "A100" GPU chips, shown here as eight giant heat sinks.

Nvidia chief executive officer Jensen Huang on Thursday held the virtual version of the company's GTC annual conference and unveiled the latest architectural innovations for the company's flagship data center graphics processing unit, or GPU chips. As in past, the company has dipped into names of famous scientists, in this case, French scientist André-Marie Ampère, following on previous branding exercises that have included Volta and Pascal and Maxwell in recent years.

Primers

The first chip manufactured using the new architecture, A100, is already shipping to customers. Huang said all cloud providers, including Microsoft's Azure, Google GCP, and Amazon AWS will be using the new part, in servers of various sorts made for the thing. The US Department of Energy's Argonne National Laboratories, outside of Chicago, a big consumer of supercomputers, will be using the chips to research COVID-19. Nvidia is promoting, as with past data center parts, a DGX server of its own design to house multiple A100 chips as a multi-processor system, and multiple computer companies including Dell will build such machines.

Nvidia is also providing what Huang billed as "the most complex motherboard in the world," the HGX, to serve as the basis for DGX machines.

Also: AI on steroids: Much bigger neural nets to come with new hardware, say Bengio, Hinton, and LeCun

Nvidia has made HGX motherboards for prior chips, but Huang emphasized the complexity of this latest circuit board in a talk with reporters. "It has a million drill holes. 30,000 components. A kilometer of traces. And it's got all these copper heat pipes," Huang described the HGX board.

Making the board able to plug in eight A100 GPUs would vastly simplify the work of computer makers, Nvidia's partners, he said. "The NPN partners are thrilled," he said, referring to the Nvidia Partner Network.

"They're delighted that we engineered the whole thing, put together the whole thing for them, and yet they still have the ability to design and configure the overall computer and server differently."

ZDNet's Natalie Gagliordi has more on the Ampere unveiling.

As with past devices such as Volta, there's a big focus on artificial intelligence in the data center, and in this case, a bit of a twist: The intention with the new generation of chips is to provide one chip family that can serve for both "training" of neural networks, where the neural network's operation is first developed on a set of examples, and also for inference, the phase where predictions are made based on new incoming data.

That's a departure from today's situation where different Nvidia chips turn up in different computer systems for either training or inference. Nvidia is hoping to make an economic argument to AI shops that it's best to buy an Nvidia-based system that can do both tasks.

The HGX slots into the chassis of Nvidia's own "DGX" multi-processor server. Companies such as Dell will be making comparable multi-processor systems.

Servers using Ampere can be configured as eight-way machines, with eight physical A100 chips inside a DGX, for training; or, those eight GPUs can each be split into seven virtual GPUs. Each virtual GPU has more power than the company's T4 parts for AI inference. Hence, a DGX machine can be effectively eight times seven, or 56, chips for the inference task. (Training is typically more compute-intensive, requiring multiple chips in parallel, whereas inference can run on a single GPU.)

"The Ampere server could either be eight GPUs working together for training, or it could be fifty-six GPUs for inference," explained Huang. He said the "really exciting" thing is how the same machine that does training can displace 56 individual servers that do inference, thereby saving on the cost of the electronics of fifty-six individual machines.

Also: 'It's not just AI, this is a change in the entire computing industry,' says SambaNova CEO

"And so all of the overhead of additional memory and CPUs and power supplies, and, you know, all that stuff, of 56 servers basically collapsed into one [server]; the economics, the value proposition is really off the charts."

Nvidia has for years been looking to extend its dominance in AI training to the realm of AI inference, where Intel has traditionally dominated with its CPUs. Nvidia also faces new competition for its training dominance from a raft of startups making ever-large AI chips. Competitor Cerebras Systems last August unveiled the world's largest computer chip, made from a single 12-inch wafer of silicon. Nvidia said the 54 billion transistors contained in the A100 part make it the largest of all chips made with the so-called 7-nanometer process technology.

In addition to training and inference, Nvidia is making the argument that DGX machines can also take on the task of data preparation that precedes the training of AI. For that purpose, Ampere-based parts have special functions for Apache Spark, as described by ZDNet's Andrew Brust.

Huang said the A100 and HGX board and DGX systems, as well as clusters of DGX machines, called SuperPODs, would make possible much larger models of neural networks.

Will Knight of Wired magazine asked Huang, "Do you think the new platform will lead to a new generation of larger ML models such as much bigger versions of BERT?" referring to Google's broadly popular bi-directional Transformer, commonly used for natural language processing.

The kinds of neural net models that AI chips have to plan to handle, especially in the domain of natural language, are scaling to very, very large numbers of parameters, over a trillion, argues Tenstorrent founder and CEO Ljubisa Bajic.

BERT has almost a billion parameters, meaning mathematical transformations that act as a weighting of the individual data points; the adjustment of these weights collectively shape the response of the neural network to data. But the Transformer on which BERT was built has morphed into ever-larger language neural nets, including Nvidia's own Megatron LM, with 16.6 billion parameters, and Microsoft's Turing NLG. AI chipmakers such as Tenstorrent have said such models will increase to over a trillion parameters in the not-too-distant future.

Pioneers of deep learning forms of AI have suggested neural network models will expand greatly in the coming years, aided by greater computing horsepower.

"It is a foregone conclusion that we're going to see some really gigantic AI models because of the creation of Ampere and this generation," said Huang, in answer to Knight's question. He emphasized that models will grow in complexity as they combine multiple kinds of data, such as images and text -- a trend that certainly can be in some recent deep learning research.

"Most of the AI models we have today are single modality," said Huang. "They're either looking at images or they listen to sound, or you know some are starting to learn how to understand videos."

"But in the future, whether it's on healthcare, or whether it's in user interaction, whether it's robotics, in the future, the groundbreaking work will be multiple modality, multi-modal AI. And you're gonna have to learn from data of all different modalities at the same time."

Huang also said AI will increasingly be employed to design the larger neural networks as they escape the bounds of what can be designed by hand.

"Those kinds of models, the discovery of the architecture is extremely complicated," said Huang. "And so we're going to use AI to go discover AI models."