Artificial intelligence in business: The state of play and future prospects

Artificial Intelligence (AI) has often been popularly envisaged in super-smart humanoid robot form. In fact, it's more commonly implemented as behind-the-scenes algorithms that can process 'big' data to accomplish a range of relatively mundane tasks far more efficiently than humans can.

Few of us, yet, interact with bipedal robots or take a ride in a driverless car, but our daily lives are increasingly affected by AI systems that can recognise speech or images, or analyse patterns of online behaviour (to detect credit card fraud or serve up appropriate adverts, for example).

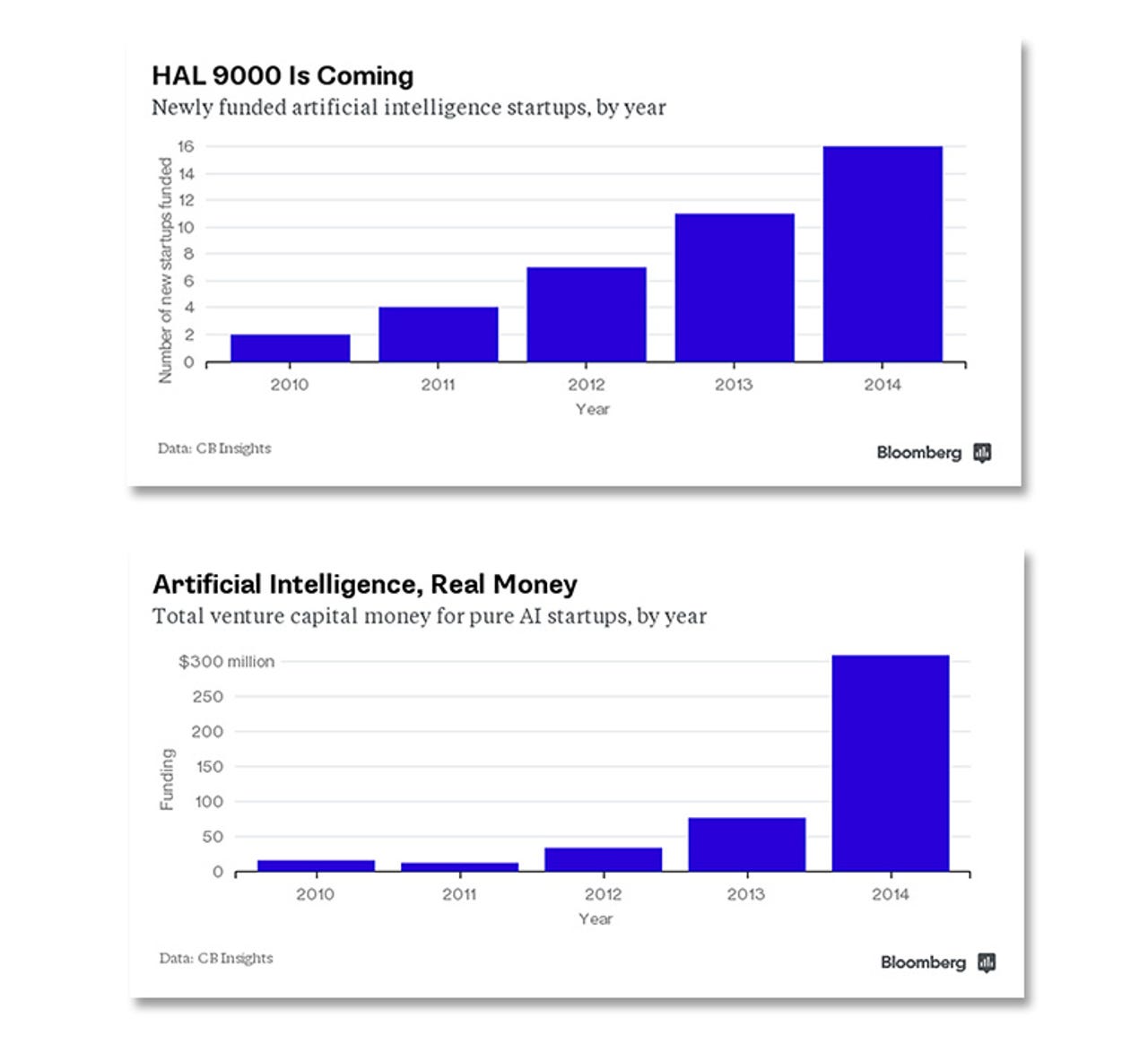

Little wonder that the recent boom in this kind of AI is being spearheaded by internet giants like Google, Microsoft, Amazon, Facebook, Baidu and others, and that there is currently a resurgence of startup activity in the sector:

Nor is it any surprise to find AI and automation featuring prominently on analyst firm Gartner's 2015 Hype Cycle for Emerging Technologies, which places autonomous vehicles, for example, right at the hype-driven 'Peak of Inflated Expectations':

Gartner also identifies 'Autonomous' as the sixth and final stage of an organisation's journey to becoming a 'digital business', highlighting a range of emerging technologies as particularly relevant: Autonomous Vehicles, Bioacoustic Sensing, Biochips, Brain-Computer Interface, Digital Dexterity, Human Augmentation, Machine Learning, Neurobusiness, People-Literate Technology, Quantum Computing, Smart Advisors, Smart Dust, Smart Robots, Virtual Personal Assistants, Virtual Reality, and Volumetric and Holographic Displays.

What follows hype, of course, is disillusionment: this has happened to AI before, and it's unlikely that all of the technologies flagged up in Gartner's Hype Cycle will make it to the mainstream. But there's enough current interest and activity to ensure that some will -- and when they do, there will be serious implications for businesses.

Last month's ZDNet special report explored the potential for job losses resulting from task automation. This month we're taking a closer look at why AI is currently a hot topic, how it is currently implemented, and how it's likely to develop.

Why AI is taking off

Solving AI-type problems requires, first and foremost, large amounts of processing power -- something that has been supplied in recent decades courtesy of Moore's Law, which codifies the observation that if you continue to shrink the size of transistors on an integrated circuit, you can cram ever more of them onto a given area of silicon. Although the number of transistors per chip is still rising thanks to ever smaller feature sizes (we are now at 14nm, with 10nm and 7nm on the roadmap), heat and power consumption issues mean that metrics like clock speed and single-thread performance have not scaled accordingly since around 2005:

Intel's response in recent years has been to build more CPU cores into its general-purpose chips -- a strategy that's also central to makers of specialist, massively parallel, graphics processors (GPUs) such as Nvidia. As well as delivering ever-increasing graphics performance, it turns out that GPU acceleration is particularly suitable for training neural networks running machine-learning, or deep learning, algorithms. This is because large amounts of training data -- sets of images requiring classification, for example -- can be analysed in parallel using GPUs, or clusters of GPUs, with thousands of computational cores. Here's an example of the typical performance boost GPU acceleration offers over general-purpose CPUs:

The Nvidia Tesla K40 referenced in the above chart has 2,880 CUDA cores and is a costly component. Nvidia's far more affordable Jetson TK1 'embedded supercomputer' is widely used by startups in this area: it's a Raspberry Pi-sized board with a Tegra K1 SoC at its heart, comprising a 192-CUDA-core Kepler GPU and a '4-Plus-1' quad-core ARM Cortex-A15 CPU, which comes with a library of primitives for deep neural networks called cuDNN.

One of the most ambitious neural networking projects is IBM's SyNAPSE chip, a non-von Neumann design that seeks to emulate the human brain in hardware. Built from 5.4 billion 28nm transistors, the latest SyNAPSE chip has 4,096 cores arranged in a 64-by-64 array supported by mesh networking, and has 1 million programmable neurons plus 256 million programmable synaptic connections. Because computation, memory and communication are closely integrated, and because it's an event-driven chip, it consumes much less power than conventional (von Neumann) designs: IBM claims just 70mW for the current SyNAPSE chip in real-time operation.

IBM's goal is to build a neuro-synaptic chip with 10 billion neurons and 100 trillion synapses, creating "a synthesis of software and silicon inspired by the brain," as IBM Chief Scientist for Brain-inspired Computing Dharmendra Modha puts it. But even that doesn't approach the level of the actual human brain, which contains some 100 billion neurons and up to 1000 trillion synaptic connections, and about whose detailed operation much remains to be discovered. The 'singularity' may be a way off yet, but the current pace of development puts it firmly on the horizon:

Apart from processing power, the other key driver of the recent AI boom has been the availability of cheap, plentiful storage for the large data sets needed to train neural networks and keep them fed with information for analysis. The Moore's Law equivalent for storage is called Kryder's Law, which in 2005 stated that the areal density of hard disk drives would more than double every two years, leading to similar exponential increases in capacity and decreases in cost per gigabyte. This observational 'law' held up well until around 2010, since when the pace of hard disk development has slackened somewhat.

According to blogger David Rosenthal, this reduced Kryder rate is due to a series of successively longer technology transitions -- most recently to HAMR (Heat Assisted Magnetic Recording) and BPM (Bit Patterned Media). As a result, storage may not prove to be quite as cheap and infinitely capacious as some cloud service providers would have it.

AI in business

As a recent report from Deloitte makes clear, technology advances have always eliminated some jobs and created others, leaving social change -- in the form of displaced 'legacy' workers -- in their wake. There's no reason to suppose that this trend will not continue, says Deloitte: "We cannot forecast the jobs of the future, but we believe that jobs will continue to be created, enhanced and destroyed much as they have in the last 150 years."

This is broadly the view of another August 2015 report, from analyst firm Forrester, which contends that: "While automation will lead to a net loss of 9.1 million US jobs by 2025, that's nowhere near the 69 million that many pundits have predicted." Furthermore, Forrester foresees a partnership rather than an adversarial relationship: "Advances in automation technologies will mean that humans increasingly work side by side with robots, software agents and other machines."

Right now, it seems that fear of technology-related job loss is concentrated at the lower end of the income scale, as this recent YouGov survey in the US shows:

However, if the current AI boom continues, there's every likelihood that white-collar workers, and even the denizens of the C-suite, will be affected by the rise of 'smart machines'.

For example, Kensho is a startup that claims to be "the world's first computational knowledge engine for the financial industry" -- a system that uses massively parallel statistical computing, natural-language inputs, big data and machine learning to answer complex financial questions posed in plain English. It's a development that could, potentially, threaten the job security of highly-paid 'quants' who are employed to model market dynamics in Wall Street, the City of London and other financial centres.

Another white-collar job that could be affected is that of the jobbing journalist, thanks to startups like Narrative Science, whose advanced natural-language generation (NLG) platform Quill can query multiple data sources and apply business rules to produce content which, the company claims, is indistinguishable from that written by a human. Reports -- examples include company financials and sports results -- can be produced at scale, and are adaptable in terms of tone, style and length depending on the target audience. A similar service is provided by Automated Insights and its Wordsmith NLG platform.

Even medicine, the ultimate white-collar (or white coat) profession, is putting AI to use: IBM's MSK-trained Watson for Oncology system, for example, can parse patient information written in plain English and draw on multiple big-data sources to deliver ranked treatment recommendations with links to supporting evidence.

At the moment, these kinds of systems seem more likely to augment rather rather replace humans, as Forrester's aforementioned report ( The Future of Jobs, 2025: Working Side By Side With Robots) suggests.

This view is supported by a survey from Narrative Science of 200 managers, directors and C-level executives entitled 2015 State of Artificial Intelligence & Big Data in the Enterprise. The headline finding is that 80 percent of respondents believe AI improves worker performance and creates jobs. Survey respondents characterised AI most often as 'Technology that thinks and acts like humans' and reported that voice recognition and response systems were the most commonly implemented AI solutions in their enterprises:

Analyst firm Gartner looked at the business implications of AI in its Top 10 Strategic Predictions for 2015 and Beyond: Digital Business Is Driving 'Big Change' report. Gartner's predictions fall into three groups: 'Machines are taking a more active role in enhancing human endeavours'; 'Digitalized things are making assisted economic decisions'; and 'Renovating the customer experience is a digital priority'. Here are the key predictions relating to the first two groups, arranged in chronological order:

| YEAR | PREDICTION |

| 2016 | More than $2 billion in online shopping will be performed exclusively by mobile digital assistants |

| 2017 | A significant and disruptive digital business will be launched that was conceived by a computer |

| 2018 | Digital businesses will require 50% fewer business process workers and 500% more key digital business jobs, compared with traditional models The total cost of ownership for business operations will be reduced by 30% through smart machines and industrialized services |

| 2019 | |

| 2020 | Developed world life expectancy will increase by a half-year, due to the widespread adoption of wireless health monitoring technology |

Where AI is going

Following the recent exposé of Amazon's challenging workplace culture, there has been much debate about work-life balance in data-driven enterprises such as the pioneering online retailer. Although Amazon's CEO Jeff Bezos refutes the grim picture painted by The New York Times, it's important that, as smart machines take an ever bigger role in the enterprises of the future, managers remember to factor the happiness of the remaining humans into their business processes.

What's certain is that developments in the AI/machine intelligence space are already reshaping industries, enterprises and human-computer interactions, as this useful infographic from Bloomberg Beta investor Shivon Zilis makes clear:

Zilis's informative blog also notes that: "People say that data scientists are unicorns in short supply. The talent crunch in machine intelligence will make it look like we had a glut of data scientists." If you're looking for a tech-related career option, take note.

And what about the much-vaunted 'singularity', where machine intelligence finally reaches human, or even super-human, levels? In a May 2015 interview, neural networking pioneer Geoffrey Hinton, who divides his time between being a Distinguished Researcher at Google and a Distinguished Emeritus Professor at the University of Toronto, took an optimistic line: "We're a long way off, so I don't think it's something I'm going to have to worry about, but it's very exciting -- and I think the consequences of that depend entirely on politics, on what people decide to use it for."

Responding to warnings about autonomous self-supporting AIs from the likes of Stephen Hawking and Elon Musk, Hinton added: "It ought to be that when you get these wonderful new abilities, it's going to be good for people...we need a political system where it's going to be good for people when we get better at doing things rather than bad for people."

Outlook

AI, primarily in the form of deep-learning algorithms running on powerful graphics processing chips, is already widely used in enterprises, which will become increasingly data-driven as computing resources continue their historic price/performance/availability trends and the algorithms become ever more sophisticated.

Opinion is currently divided on the effect that widespread AI adoption will have on the job market and workplace culture, but what's certain is that AI will continue to develop rapidly and that possible economic and social upheaval needs to be on the political agenda.

As far as AI is concerned, we are definitely living in the proverbial 'interesting times'.