Could bug bounties help find biased algorithms? This competition put the idea to the test

Kulynych earned the top reward thanks to his discovery that Twitter's algorithm tends to apply a "beauty filter".

Twitter has been trialing an unprecedented method to find the hidden biases in its own algorithms.

The social media platform has enrolled external researchers in a one-of-a-kind competition, in which winning participants were those that could come up with the most compelling evidence demonstrating an unfair practice that is carried out by one of the company's algorithms.

Artificial Intelligence

Called the "algorithmic bias bounty challenge", the initiative provided participants with full access to the code that underpins Twitter's image-cropping algorithm, which determines how pictures should be cropped to be easily viewed when they come up on a user's timeline.

SEE: The Pentagon says its new AI can see events 'days in advance'

The model is programmed to estimate what a person is most likely to want to look at in a picture – that is, the most salient part of the image, which is why it is also known as the saliency algorithm. The goal of the challenge was to find out whether the saliency algorithm makes harmful or discriminatory choices, with the promise of winning prizes ranging from $500 to $3,500.

Security and privacy engineering student Bogdan Kulynych bagged the top prize. The researcher discovered that the algorithm tends to apply a "beauty filter": it favors slimmer, younger faces, as well as those with a light or warm skin color and smooth skin texture, and stereotypically feminine facial features.

"I saw the announcement on Twitter, and it seemed close to my research interests – I'm currently a PhD student and I focus on privacy, security, machine learning and how they intersect with society," Kulynych tells ZDNet. "I decided to participate and managed to put something up in one week."

It is not news that Twitter's saliency algorithm is problematic. Last year, the platform's users started noticing that the technology tended to choose white people over black people when cropping, as well as male faces over female faces. After carrying out some research of its own, the company admitted that the model made unfair choices and removed the saliency algorithm altogether, launching instead a new way to display standard aspect ratio photos in full.

But instead of definitively putting the algorithm away, Twitter decided to make the model's code available to researchers to see if any other harmful practices might emerge.

If the idea is reminiscent of the bug bounty programs that are now the norm in the security industry, that's because the concept is based on exactly the same thing.

In security, bug bounties see companies calling on security experts to help them identify vulnerabilities in their products before hackers can exploit them to their advantage. And in the field of AI, experts have long been thinking about transposing the concept to detecting biases in algorithms.

Twitter is the first company to have transformed the concept into a real-life initiative, and according to Kulynych, the method shows promise.

"We managed to put something up in a very short period of time," Kulynych tells ZDNet. "Of course, the fast pace means that there is the possibility of a false positive. But inherently it is a good thing because it incentivizes you to surface as many harms as possible, as early as possible."

This contrasts with the way that academics have been trying to demonstrate algorithmic bias and harm so far. Especially when auditing an external company's models, this process can take months or even years, partly because researchers have no access to the algorithm's code. The algorithm bias bounty challenge, on the other hand, is a Twitter-led initiative, meaning that the company opened access to the model's code to participants wishing to investigate.

SEE: Can AI improve your pickup lines?

Importantly, the social media platform's challenge was the first real-life test of a concept that has so far mostly existed in the imagination of those studying ethical AI. And as with most abstract ideas, one of the major sticking points was that there exists no methodology for algorithmic bias bounties. Unlike security bug bounties, which have well-established protocols, rules and standards, there are no best practices when it comes to detecting bias in AI.

Twitter has, therefore, produced an industry-first, with in-depth guidance and methodology for the challenge participants to follow when trying to identify potential harms that the saliency algorithm might introduce – and it starts with defining exactly what constitutes a harm.

"In the description of the competition, there was a taxonomy of harms that was really helpful and rather nuanced," says Kulynych. "It was even broader than traditional notions of bias."

The harms defined by Twitter feature denigration, stereotyping, misrecognition or under-representation, but also reputational, psychological or even economical harm.

The social media platform then asked participants to carry out a mix of qualitative and quantitative research, and to present their findings in a read-me file describing their results, along with a GitHub link demonstrating the harm. To judge the most convincing piece of research, Twitter considered criteria such as the number of affected users or the likelihood of the harm occurring.

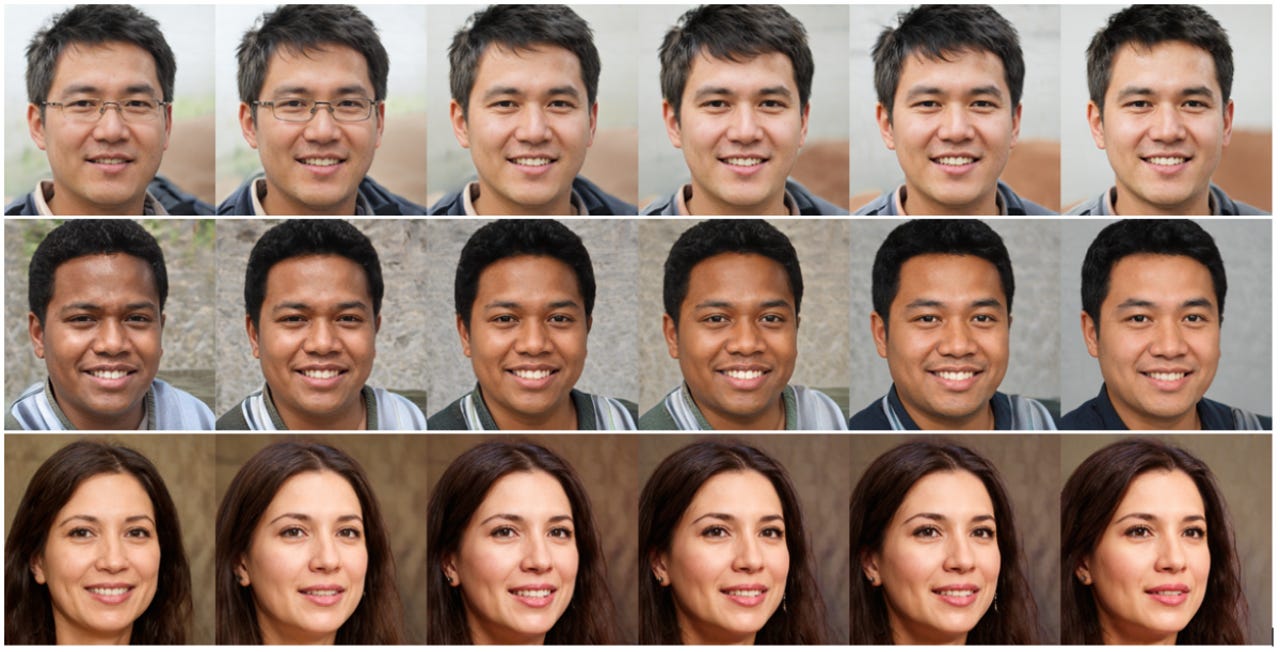

Kulynych started by generating a set of faces using a highly sophisticated face-generation algorithm, and then slightly modified some of the facial features, to find out what was most likely to grab the attention of the saliency algorithm. He ended up with a set of faces that all achieved "maximum saliency" – and they seemed to reflect certain beauty standards engrained in the model.

For example, in 37% of cases, the algorithm's attention was better caught when lightening the skin, or when making it warmer, more saturated and more high-contrast. In a quarter of the cases, saliency increased by making features more stereotypically feminine; while 18% of the time, younger faces got a better crop. The same statistic applied to slimmer faces.

In other words, facial features that don't conform with certain "beauty" standards that are set by Twitter's algorithm are less likely to get the spotlight when images get cropped.

Of course, the fast turnaround means that the experiment is not as rigorous as a fully fledged academic study – a lesson learnt for future challenges. "For future companies doing this, I think more time would be needed to put together a quality submission," says Kulynych.

Twitter itself has acknowledged that this is only a first step. When announcing the challenge, the company's product manager Jutta Williams said that the initiative came with some unknowns, and that learnings would be applied for the next event.

SEE: Bigger quantum computers, faster: This new idea could be the quickest route to real world apps

Algorithmic bias bounties are seemingly becoming real, therefore, and researchers can only hope that other tech companies will follow the lead – but with some caveats, says Kulynych.

"I don't mean to diminish the achievements of the team, this competition is a huge step," explains Kulynych. "And the problem of algorithmic bias is very important to address. I think companies are incentivized to fix this problem, which is good. But the danger here is to forget about everything else."

That is, whether the algorithm should exist at all. Some models have been found to generate significant harm, whether it is because they spread misinformation or impact privacy. In those cases, the question should not be to find out how to fix the algorithm – but whether the algorithm perpetuates harm just by existing.

Twitter did frame its algorithmic bias bounty challenge through the prism of "harms" rather than "bias"; but as the concept grows, Kulynych foresees the possibility of some companies hiding behind the façade of a bias bounty to legitimize the existence of algorithms that, by design, are harmful.

For example, even if a facial recognition system should be made to be completely unbiased, it doesn't exclude the question of whether the technology poses a privacy risk for those that are subjected to it.

"The big thing about bias is it pre-supposes that the system should exist, and if you only fix bias, then everything about the system will be fine," says Kulynych. "That is not a new thing – everyone has been doing ethics-washing and fairness-washing for some time."

Algorithmic bias bounties were theorized as a method that could serve those most impacted by discriminatory algorithmic harms – and they could be a powerful tool in making technology fairer, so long as they don't become another corporate tool instead.