Dana-Farber Cancer Institute finds main GPT-4 concerns include falsehoods, high costs

In a landmark first-of-its kind study detailed this week in the prestigious New England Journal of Medicine, researchers at the renowned Dana-Farber Cancer Institute in Boston describe several months of implementing OpenAI's GPT-4 for operations.

Also: How GenAI got much better at medical questions - thanks to RAG

The researchers found that users' biggest concern was inaccurate responses from the chatbot, and one of the biggest issues to overcome was the high cost of posing questions to the chatbot.

The study's results were related by lead author Renato Umeton and colleagues of the Dana-Farber Generative AI Governance Committee in a report in NEJM-AI, an imprint of the journal.

"Dana-Farber personnel are now using GPT4DFCI to streamline work and conduct research," wrote Umeton and team, "To our knowledge, it was the first LLM provisioned for general use by the workforce of an academic medical center or hospital."

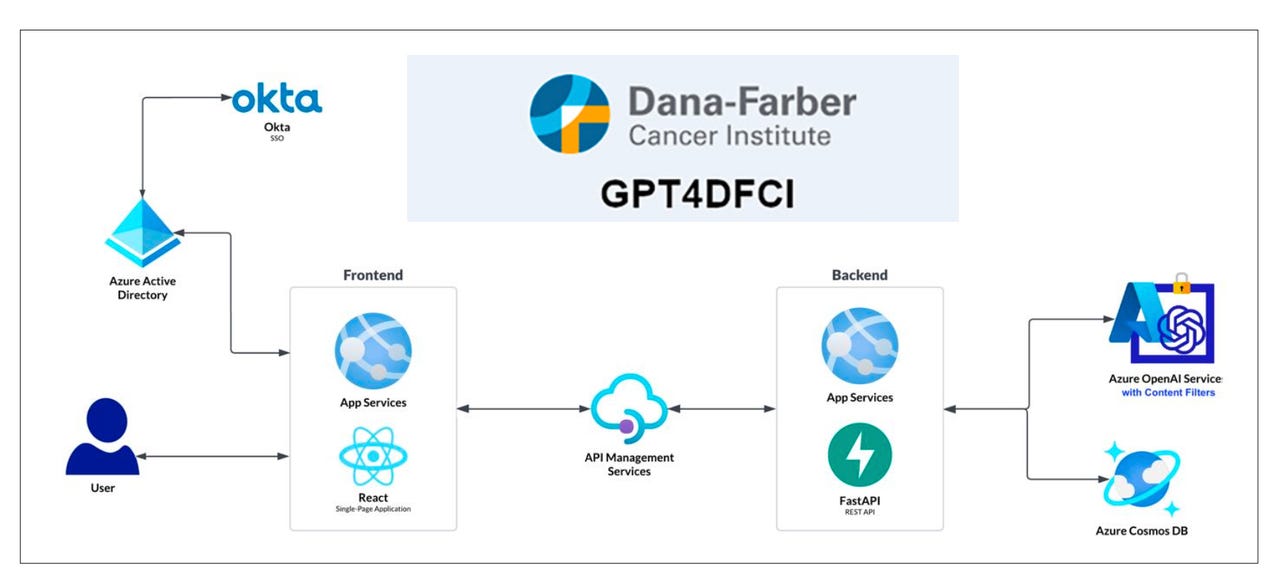

The team combined five different model sizes of GPT-4, including GPT-4 Turbo, into "GPT4DFCI," running in Microsoft's Azure OpenAI service. GPT4DFCI is "designed with a simple, ChatGPT-like user interface" and is "private, secure, and HIPAA-compliant and HIPAA-auditable in its implementation."

Over a period of several months, the team rolled out the system to Dana-Farber and instructed the 12,500 staff to warn about and "explain the risks of public LLMs and clarify relevant institute policies, including that PHI [personally health information], any part of a medical record, or confidential institute information may not be entered into public AI platforms."

Also: 3 ways AI is revolutionizing how health organizations serve patients. Can LLMs like ChatGPT help?

The study engineers recorded survey responses from users about what they used the tool for and what concerns they had. "The most reported 'primary uses' of AI were 'Extracting or searching for information in notes, reports, or other documents/files' and 'Answering general knowledge questions'," Umeton and team related.

"The operating costs of Azure OpenAI Service can add up quickly," was among the risks, they wrote. "We expect the issue of cost to become a major consideration at our institution as we begin enabling much higher-throughput research use cases."

In conclusion, Umeton and team wrote that "[i]t is too early to judge whether GPT4DFCI will improve our patients' outcomes and experiences, the well-being of our staff, the equity of our clinical care and research, and our cost-effectiveness. However, by providing our workforce community with opportunities to test LLMs in compliance with our policies and applicable laws, in a fully auditable manner, we believe we can appropriately manage risk while advancing our discovery and clinical missions in service to patients."

The code for GPT4DFCI is posted on GitHub.