Dissecting C3.ai’s secret sauce: less about AI, more about fixing Hadoop

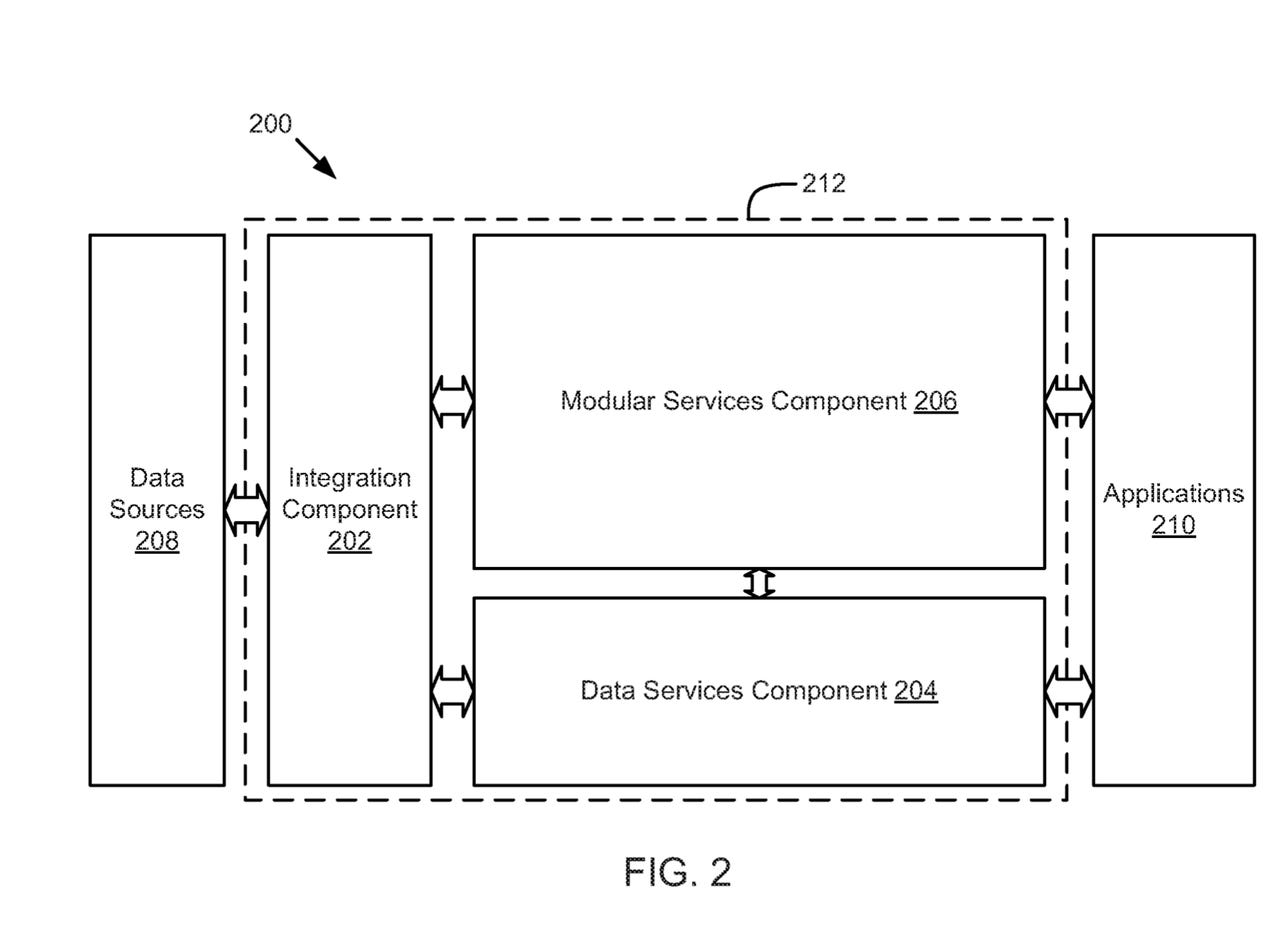

From C3.ai's U.S. patent number 10,824,634, awarded this month. The diagram shows what the company calls a system of integration. A dotted line represents an enclosing wrapper of types that can be referenced to simplify the development of applications that join together resources, such as a data integration unit and a machine learning unit and a MapReduce component.

C3.ai, the software company founded by software industry legend Tom Siebel, which on Friday filed to go public, describes its purpose in life as applying artificial intelligence to sales and marketing. What it is actually doing appears to be much more fixing the sins of infrastructure software such as Hadoop, and its commercial implementations by Cloudera and others.

ZDNet examined what C3.ai calls its "secret sauce," the artificial intelligence suite that it says speeds development of CRM.

It turns out, the secret sauce is really more about platform-as-a-service, rather than AI per se, which is funny, given that machine learning, a form of AI, is mentioned fifty-five times in the C3 prospectus, while platform is mentioned only once, in the company's self-description: "We believe the C3 AI Suite is the only end-to-end Platform-as-a-Service allowing customers to design, develop, provision, and operate Enterprise AI applications at scale."

What C3.ai has invented is a set of building blocks for putting together a system to analyze data coming from a variety of signals, including traditional databases, but also Internet signals such as social media, and, perhaps most important, sensors, including the kinds of sensors industrial companies might build into equipment in the field that they want to monitor.

The building blocks C3.ai makes are tools that developers can access programmatically, and consume on a subscription basis, with some additional metered use.

The actual artificial intelligence component, at least based on C3.ai's published materials, is fairly routine and nothing special.

The secret sauce is described in two patents that are referenced in the IPO prospectus, U.S. patents number 10,817,530 and number 10,824,634, both of which were granted this year. Both patents describe "Systems, methods, and devices for an enterprise Internet-of-Things application development platform" or simply a "platform."

"Next-generation IoT applications require a new enterprise software platform," both documents assert.

As the patents both lay out, they are specifically PaaS for the aggregation of data coming from sensors:

The IoT Platform disclosed herein is a platform as a service (PaaS) for the design, development, deployment, and operation of next generation cyberphysical software applications and business processes. The applications apply advanced data aggregation methods, data persistence methods, data analytics, and machine learning methods, embedded in a unique model driven architecture type system embodiment to recommend actions based on real-time and near real-time analysis of petabyte-scale data sets, numerous enterprise and extraprise data sources, and telemetry data from millions to billions of endpoints.

The patents lay out in detail the failures of enterprise open-source infrastructure such as Hadoop. The patents note the failure of companies to adopt Hadoop at scale, as indicated by Gartner research. And companies that re-package Hadoop, such as Cloudera, "have failed to integrate their Hadoop components into a cohesive software development environment," C3.ai claims.

The result is that "to date, there is no successful large-scale enterprise IoT application deployments using the Apache Hadoop." It goes on to say that technologies including Cassandra, CloudStack, HDFS, Continum, Cordova, Pivot, Spark, Storm and ZooKeeper are components that should be put together but haven't been.

"The many market claims aside, a close examination suggests that there are few examples, if any, of enterprise production-scale, elastic cloud, big data, and machine learning IoT applications that have been successfully deployed in any vertical market using these types of components."

And so, C3.ai is creating "a new kind of technology stack."

C3.ai's paradigmatic example is shown in the diagram of a multi-dimensional, non-linear classifier. It's pretty standard machine learning stuff, achieved, in this example, using a familiar approach called gradient-boosted decision trees.

That technology stack, based on the patents, includes heavy use of domain-specific languages, for the purposes of assigning programming object types to sensor data and other resources.

The point is to simplify how massive data sources, and the analytics programs that operate on them, are put together. Using types, and using a model, developers can avoid stitching together all the parts of the program via API calls.

"A type is the definition of a potentially complex object that the system understands," says C3.ai.

In other words, it's kind of like wrapping object-oriented class interfaces around a lot of infrastructure components, so that programmers can make calls to big data in a simpler way. Addressing one type may set off a chain of processes that fetch data from many sensor endpoints, possibly from data stores such as a content management system, combine them, and pass them through an analysis program, for example.

This typing system, C3.ai claims, is made rigorous by the company having devised models of the business logic of specific industries. Presumably, those industry-specific models produce the types that are used by the domain-specific language to address all the resources that C3.ai has.

All this type work lets C3.ai run data from sensors through a bunch of components for analysis. Something that is referred to as a "continuous data processing component" is basically a wrapper to MapReduce. That MapReduce program can feed data into another component, a machine learning system, to analyze data repeatedly. That can be handy because some sensor data may need to be put iteratively through multiple passes of a machine learning program.

Given all this emphasis on infrastructure, it is perhaps not totally surprising that what turns up as AI in the patents are somewhat mundane forms of machine learning.

The patents refer to the AI as happening within a "machine learning component." That component uses some "state-of-the-art" machine learning libraries, it says, "including public libraries such as those built upon the Apache Spark, R, and Python systems." It includes things like IPython Notebook, the popular sci-kit learn library, and packages in R for such standard machine learning approaches as gradient boosting.

C3.ai says these libraries are complemented by "custom-built, highly optimized and parallelized implementations of many standard machine learning algorithms, such as generalized linear models, orthogonal matching pursuit, and latent variable clustering models."

C3.ai's IPO prospectus gives a graphical representation of the platform-as-a-service the company has built to analyze data from sensors throughout IoT infrastructure.

All those things are machine learning techniques that have been around for ages. In fact, the paradigmatic example of machine learning provided by C3.ai sounds, on its face, like something out of a machine learning textbook.

The company discusses using machine learning to tell if someone is committing electricity theft by looking at data from electric meters. Presumably, it's the kind of application that a large utility customer would need help with.

The problem is one of creating a classifier that can take in several different data points from the meter and produce a value for whether there is theft going on.

The description sounds daunting,

Detecting electricity theft is a highly non-trivial task, and there are many separate features that may increase or decrease the likelihood that a particular meter is exhibiting the signs of a user stealing energy […] Each meter can then be viewed as a point lying in "n-dimensional" space […]

This, however, is no different from any machine learning description. In the example problem, electricity theft, the solution is to apply the standard technique of gradient boosting to a set of 756 features to divide the data into hyper-planes of positive or negative examples, theft or no theft.

As C3.ai indicates, the real art to this stuff is applying it at high volume. The company describes one such classifier, a gradient-boosted regression tree, as having "a weighted combination of 70 trees, each with a maximum depth of 5 nodes."

In other words, in the world of applied AI, C3.ai is dealing with tons of data. The company has gained valuable experience in how to take routine machine learning models, such as gradient boosting, and apply them with the kind of care needed to handle complexity.

That's no small accomplishment, though it may not be breaking any boundaries in the fundamental development of machine learning itself.

Still, applying AI at scale promises to be a very vibrant market.

Siebel, in an interview a week ago with ZDNet's Larry Dignan, said that customer relationship management, including both marketing and sales automation, will be one of the biggest markets for AI in the coming years, according to data from consulting firm McKinsey & Co.

"They predict the largest impact on AI will be on sales and marketing and customer service, delivering up to $3 trillion of value per year," said Siebel.