Former Google VP: Machines emotionally intelligent in 2016

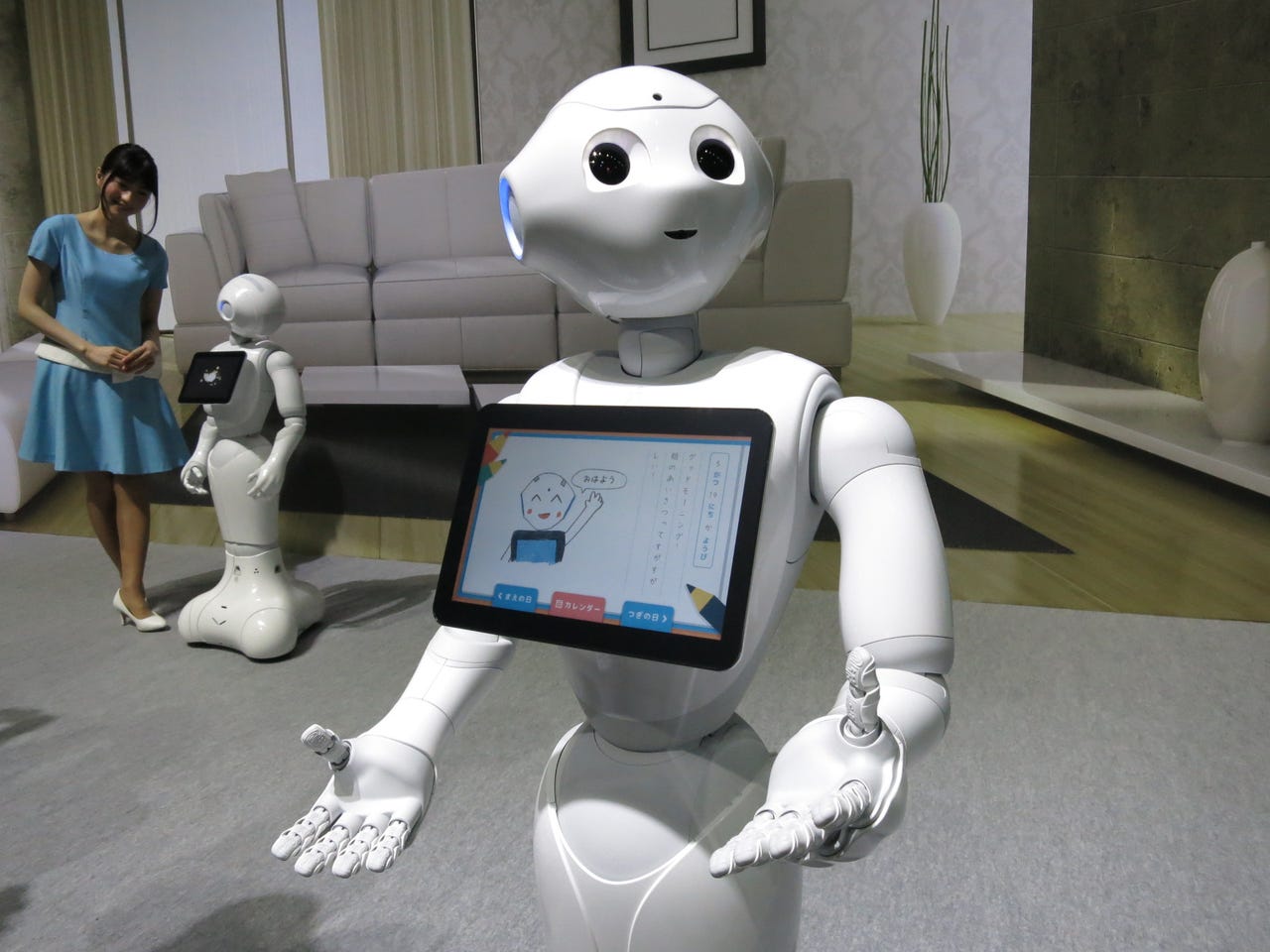

Pepper, the emotionally intelligent humanoid from Aldebaran

Andrew Moore, the Dean of the Carnegie Mellon School of Computer Science and a former Vice President at Google, just told me something exciting. Moore predicts that 2016 will see a rapid proliferation of research on machine emotional understanding in machines. Robots, smart phones, and computers will very quickly start to understand how we're feeling and will be able to respond accordingly.

Robotics

"There will be immediate positive uses," he explains over the phone. "Monitoring a patient in the ER with an emotionally intelligent computer will enable doctors to sense discomfort when the patient is incapable of communicating. Measuring student engagement by looking at a students' emotional reaction will help teachers be more effective. Those are clear wins."

[More about robots in education? Suggested reading: Telepresence robots beam psychologists into schools]

But, Moore cautions, the transition to emotionally aware gadgets may be bumpy.

"When it comes to things like advertising, this may feel uncomfortable. I have a background in internet advertising and if I wasn't busy being a dean I would be launching a startup to enable your tablet to watch you while you read web pages in order to see if you're reacting positively or negatively."

It's not difficult to see how valuable that information would be to advertisers, nor is it likely that the technology will advance without heavy scrutiny and criticism.

Because humans give off a number of discernible cues about how they're feeling, both consciously and unconsciously, research in this area has taken several parallel paths. Voice patterns can reveal stress and excitement and the movement of facial muscles provides a revealing map of a person's inner state. One of the biggest breakthroughs for emotional sensing by machines is actually rather mundane.

"Cameras are now higher resolution. High res cameras can track slight movements on the face and even individual hairs."

Roboticists and computer scientists have applied advances in machine vision to an existing body of research from the field of psychology on emotional cues. At present, most emotionally intelligent machines are picking up on the same types of emotional cues that humans pick up on, such as body language and facial movements. But there is the tantalizing possibility that machine learning could be employed to enable machines to figure out even better strategies for interpreting emotion by measuring cues that we can't pick up on, or at least aren't aware of. Eventually this could result in devices that are more emotionally perceptive than humans.

We've already seen some impressive examples of emotional intelligence in machines. Aldebaran's Pepper robot, which debuted as an in-store clerk at Softbank stores in Japan before going on sale to consumers, is sophisticated enough to joke with people and gauge their reaction. Based on those inputs, Pepper uses machine learning algorithms to refine its social behavior.

At your service: 8 personal assistant robots coming home soon

At the University of Pittsburgh, psychology researcher Jeff Cohn is teaching machines to read facial expressions and prosody, the sounds used when speaking, to detect whether treatments for depression are working. And at Carnegie Mellon, where Moore oversees the School of Computer Science, Professor Justine Cassell has been doing studies in which children interact with animated characters in an educational setting. Not surprisingly, she's found that engagement and learning outcomes are much higher when the simulated character reacts effectively to a child's emotional state.

"Our numbers indicate that faculty and students are really into this field," says Moore. "We had one faculty member working on this three years ago, now there are six. Computer vision has itself proved itself, and emotional intelligence in machines is going to be the next phase."

With devices like Jibo and Amazon's Echo making their way into homes, it's not far fetched to believe that your table-top personal assistant will soon be able to cater to your mood, playing music, offering reminders, and making suggestions based on how you feel.