Getting ready for NVRAM: Intel's 3D Xpoint launches soon

special feature

3D Xpoint stumbled out of the starting gate a couple of years ago, and has seen most of its limited usage in costly Optane SSDs. But for most of us, the real win will be using it to dramatically expand server - and eventually - PC memory capacities.

SSDs were a way to on improve disks with truly stunning - million dollar storage array level - performance, in tiny, cost-effective packages.

NVRAM is different. It won't improve DRAM performance. In fact, it is slower than DRAM.

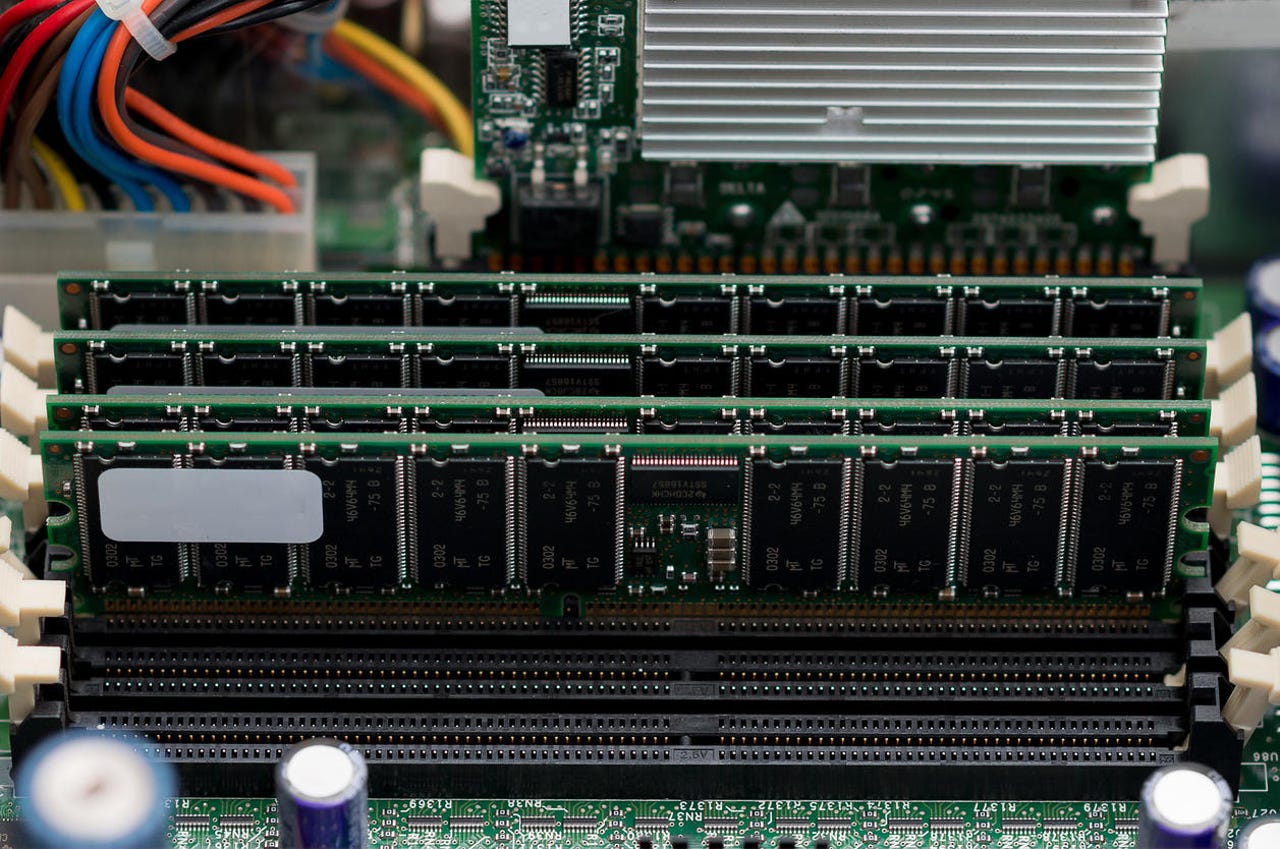

But 3D Xpoint is much denser than DRAM, which means that a NVDIMM will be able to mount a lot more capacity than DRAM.

How much? 128GB and even 256GB DIMMs will be available. You could soon be sporting 4TB of RAM for your favorite in-memory database.

And since it doesn't need power-sucking refreshes, it can add that capacity without pushing the thermal envelope of current server and notebook designs.

I learned more about NVRAM readiness at this month's Non-Volatile Memory Workshop at UC San Diego. I spoke to Jim Fister, who is leading the SNIA effort to get system software ready for NVRAM.

What's old is new again

Back in the 1950s and 1960s, the most common RAM - magnetic core memory - was non-volatile. Well into the 1980s there were still ancient DEC PDP-8s (12 bit minicomputers) equipped with core RAM, running remote lumber mills with flaky power. After a power outage the PDP-8 would resume as if nothing had happened.

DRAM swept the memory race in the 1970s because it was denser and cheaper. Ever since, power cycling a system has been the easiest way to deal occasional software faults. Now software has to relearn what to do when memory persists.

Think of NVRAM as a new capability in the existing memory layer. What could you do differently with persistence?

Read More

- STT's breakthrough NVRAM tech promises huge speed and endurance gains

- Micron Technology weathers memory market, sees rebound for data center, mobile, 5G, IoT demand

- Flash storage: A cheat sheet TechRepublic

One obvious area is crash recovery: rapid database restarts. Another - given the large capacity of NVDIMMs - is enabling multiple programs to access the same pool of memory. You can do that today with SSDs, but doing it closer to the CPU eliminates orders of magnitude of latency.

A less obvious option - that should prove attractive to IT organizations - is making file system metadata updates a memory load-store operation instead of a block write to disk or SSD. Much faster, and much less overhead.

Databases are well along in adopting NVRAM. Persistence is the heart of what DBMSs do, and improved database performance and reliability will be where most of us first see the advantages of NVRAM.

The Storage Bits take

NVRAM is a fundamental change in system architecture. It will take years for vendors to figure out how to maximize its utility.

But the porting is underway, with open source tools such as PMDK, enabling developers to explore how best to use persistence. Cloud vendors will lead the way, but enterprise IT professionals who want to stay competitive won't be far behind.

Courteous comments welcome, of course.