Google, Nvidia tout advances in AI training with MLPerf benchmark results

The second round of MLPerf benchmark results is in, offering a new, objective measurement of the tools used to run AI training workloads. With submissions from Nvidia, Google and Intel, the results showed how quickly AI infrastructure is improving, both for data centers and in the cloud.

MLPerf is a broad benchmark suite for measuring the performance of machine learning (ML) software frameworks (such as TensorFlow, PyTorch, and MXNet), ML hardware platforms (including Google TPUs, Intel CPUs, and Nvidia GPUs) and ML cloud platforms. Several companies, as well as researchers from institutions like Harvard, Stanford and the University of California Berkeley, first agreed to support the benchmarks last year. The goal is to give developers and enterprise IT teams information to help them evaluate existing offerings and focus on future development.

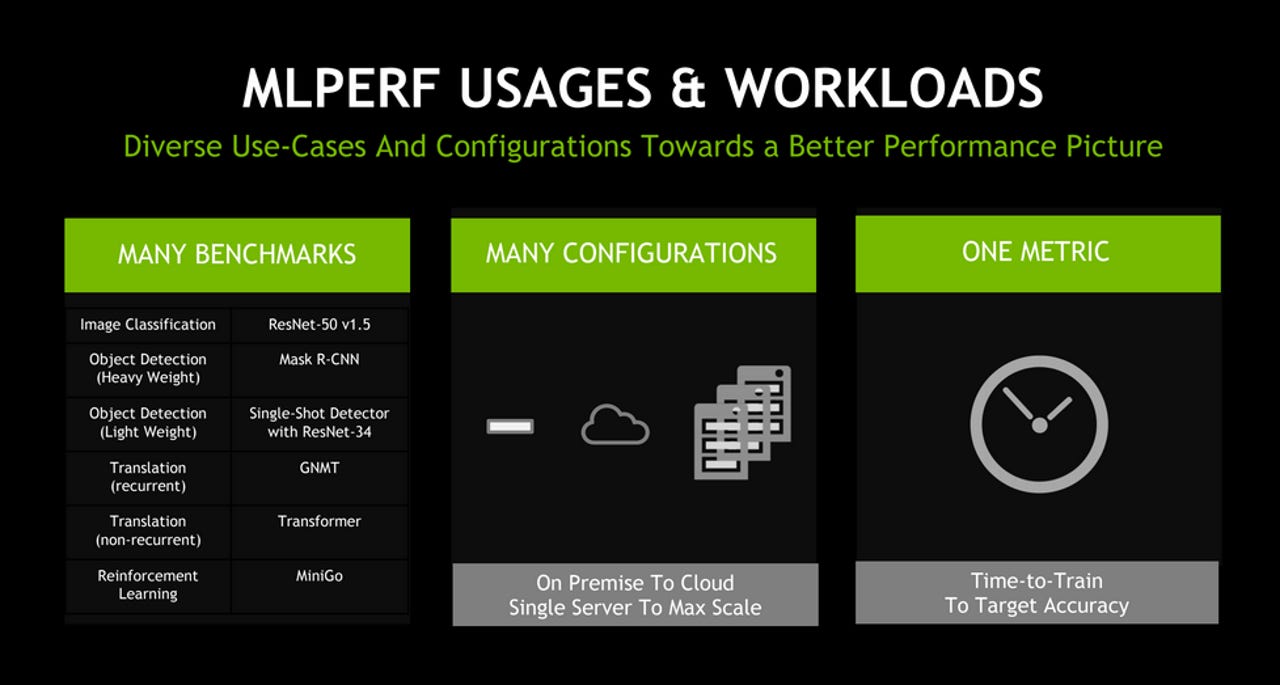

Back in December, MLPerf published its first batch of results on training ML models. The metric is the time required to train a model to a target level of quality. The benchmark suite consists of six categories: image classification, object detection (lightweight), object detection (heavyweight), translation (recurrent), translation (non-recurrent) and reinforcement learning.

Nvidia was the only vendor to submit results in all six categories. The GPU maker set eight records in training performance, including three in overall performance at scale and five on a per-accelerator basis.

On an at-scale basis for all six categories, Nvidia used its DGX SuperPod to train each MLPerf benchmark in under 20 minutes. For instance, training an image classification model using Resnet-50 v1.5 took just 80 seconds. As recently as 2017, when Nvidia launched the DGX-1 server, that training would have taken about eight hours.

The progress made in just a few short years is "staggering," Paresh Kharya, director of Accelerated Computing for Nvidia, told reporters this week. The results are a "testament to how fast this industry is moving," he said. Moreover, it's the sort of speed that will help bring about new AI applications.

"Leadership in AI requires leadership in AI infrastructure that... researchers need to keep moving forward," Kharya said.

Nvidia emphasized that its AI platform performed the best on heavyweight object detection and reinforcement learning -- the hardest AI problems as measured by total time to train.

Heavyweight object detection is used in critical applications like autonomous driving. It helps provide precise locations of pedestrians and other objects to self-driving cars. Meanwhile, reinforcement learning is used for things like training robots, or for optimizing traffic light patterns in smart cities.

Google Cloud, meanwhile, entered five categories and set three records for performance at scale with its Cloud TPU v3 Pods -- racks of Google's Tensor Processing Units (TPUs). Each of the winning runs took less than two minutes of compute time.

The results make Google the first public cloud provider to outperform on-premise systems running large-scale ML training workloads.

"There's a revolution in machine learning," Google Cloud's Zak Stone said to ZDNet, noting how breakthroughs in deep learning and neural networks are enabling a wide range of AI capabilities like language processing or object detection.

"All these workloads are performance-critical," he said. "They require so much compute, it really matters how fast your system is to train a model. There's a huge difference between waiting for a month versus a couple of days."

In the non-recurrent translation and lightweight object detection categories, the TPU v3 Pods trained models over 84 percent faster than Nvidia's systems.

While the winning submissions ran on full TPU v3 Pods, Google customers can choose what size "slice" of a Pod best fits their performance needs and price point. Google made its Cloud TPU Pods publicly available in beta earlier this year. Some customers using Google's TPUs or Pods include openAI, Lyft, eBay and Recursion Pharmaceuticals.