Is AMD being hypocritical about benchmark ethics?

On Tuesday 2/27/2006, AMD's chief sales and marketing officer Henri Richard swung hard at Intel in front of the media with complaints of unethical usage of outdated benchmarking standards such as SPECint_2000.

Note that SPECint_2000 wasn't officially retired until last week on Feb 24th of 2007 so it was still technically sanctioned to use that benchmark before its retirement and that it isn't Intel conducting these benchmarks but the server makers such as Dell, Fujitsu, and HP. In either case, it is the most recent Intel score versus AMD that just happens to be on an older benchmarking standard though it can be argued that SPECint_2000 was less relevant than what current applications call for.

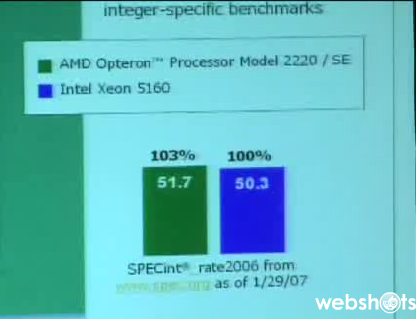

Richard aggressively criticized what he characterized as Intel's unethical behavior while promising that AMD will take the high road and not resort to dirty tactics. In his video interview, David Berlind asked Richard how AMD intends to play this game and Richard responded that "Two wrongs don't make a right and you can expect us to continue to stay true". Later on in the interview Berlind highlighted one of the benchmarks that AMD was showing at the conference showing how AMD's best dual core Opteron was actually faster than Intel's best dual core Xeon 5160. See screen capture of Berlind's video interview below.

So here we have AMD’s Richard screaming about Intel’s use of older stats from the previous SPECint standard which shows Intel killing AMD by a large margin, then promises not to do the same thing, then goes right on ahead and offers one month old stats showing AMD’s 3% lead on SPECint_rate2006. Would that be a problem if nothing has changed since that time? No, nothing wrong at all. But I checked with CPU analyst David Kanter of Real World technologies and here’s what he has to say:

In January, AMD lead Intel by about 3% in SPECint_rate 2006; currently, Intel has pulled ahead by around 6%.

So right now, Intel beats AMD on SPECint_rate for both peak and base.

SPECint_rate2006 best scores as of Feb 2007

Dual core processor Base Peak Intel XEON 5160 53.2 55.2 AMD Opteron Model 2220 46.1 51.7

So we have AMD screaming about Intel’s misleading benchmarks, promises not to do same thing, then appears to turn around gives us outdated scores from a month earlier showing a small AMD advantage when the updated scores actually showed a larger advantage for Intel. I did a little research and it appears that the updated base scores from Dell were published on February 26th 2007 which is only one day before the presentation so it would be reasonable to let that slide. But the updated Intel "Peak" scores from Fujitsu Siemens were published by SPEC.org on February 8 2007 which was nearly three weeks before this AMD presentation at the W Hotel on February 27th 2007 so it's a little hard to believe that AMD wouldn't have known about it yet AMD chose to use a one month old slide showing us outdated inferior Intel results.

[UPDATE 3/3/2007 - AMD has notified me via email late Friday that they didn't show the updated Intel scores that were actually 9% higher because their legal team had not approved the updated Intel scores yet. Since SPEC.org posted scores are about as independent and legitimate as they come and the certification process is extremely rigorous, it’s a bit disingenuous to use your own legal department to block your opponent’s best scores. The AMD spokesperson told me that they will expedite this legal review process in the future and that “Thanks to you, we will add in a ‘day of’ check, even when presenting a benchmark that was updated only a few weeks earlier.” I will follow up with AMD on Monday because they want to poke some holes in what Intel presented Wall Street last week. If it turns out that Intel suppressed better AMD scores in the same way, I'll post that next week.]

But this isn't the only problem with AMD's presentation of benchmarks. While it might seem reasonable to be comparing the "best" AMD dual-core processor against Intel's best dual-core processor, it completely ignores the fact that Intel customers can choose to save a lot of money with Intel quad-core processors such as the XEON X5355 processor. Instead of buying two dual-core CPUs, smart shoppers will buy a single quad-core CPU and save tons of money on enterprise server software licensing which is often 5 to 10 times more expensive than the entire server. While it's possible to save half on the server and slash power consumption with fewer quad core CPUs, it's not unusual to see enterprise software licenses costing in excess of $50,000 per CPU socket! AMD continues to argue that Intel's quad-core chip somehow isn't "real" because it uses two dual-core dies instead of a single quad-core die.

The problem for AMD is that the benchmarks on Intel's quad-core annihilates any dual-core CPUs so there's nothing "fake" about the performance. It also happens to be the case that no enterprise software makers charge their software based on die count; they base it on CPU socket count so the fact that Intel has quad-core CPUs and AMD doesn't gives Intel a huge advantage for the first half of 2007. AMD will probably take back a small edge in the two to four socket server market when their single-die Barcelona by middle of this year but Intel's 45nm Penryn will make sure the honeymoon in Barcelona won't last more than 6 months. You can see a full AMD versus Intel roadmap here.

It is a well known fact that all companies hardware or software will present their best foot forward and favor the benchmarks that makes them look good. But leaving out your competitors up-to-date data to make it look like you're ahead in the same benchmark is very disturbing and there is a world of difference between cherry picking benchmarks versus cherry picking data. The only thing worse than that is hypocrisy.

[poll id=17]

.