Lemonade Insurance faces backlash for claiming AI system could automatically deny claims

Lemonade Insurance sparked outrage this week when it took to Twitter to boast about how its AI system was able to boost profits by automatically denying claims based on analyzing videos submitted by customers.

In a lengthy thread on Twitter Monday afternoon, the company lauded itself for how its AI was able to detect fraud.

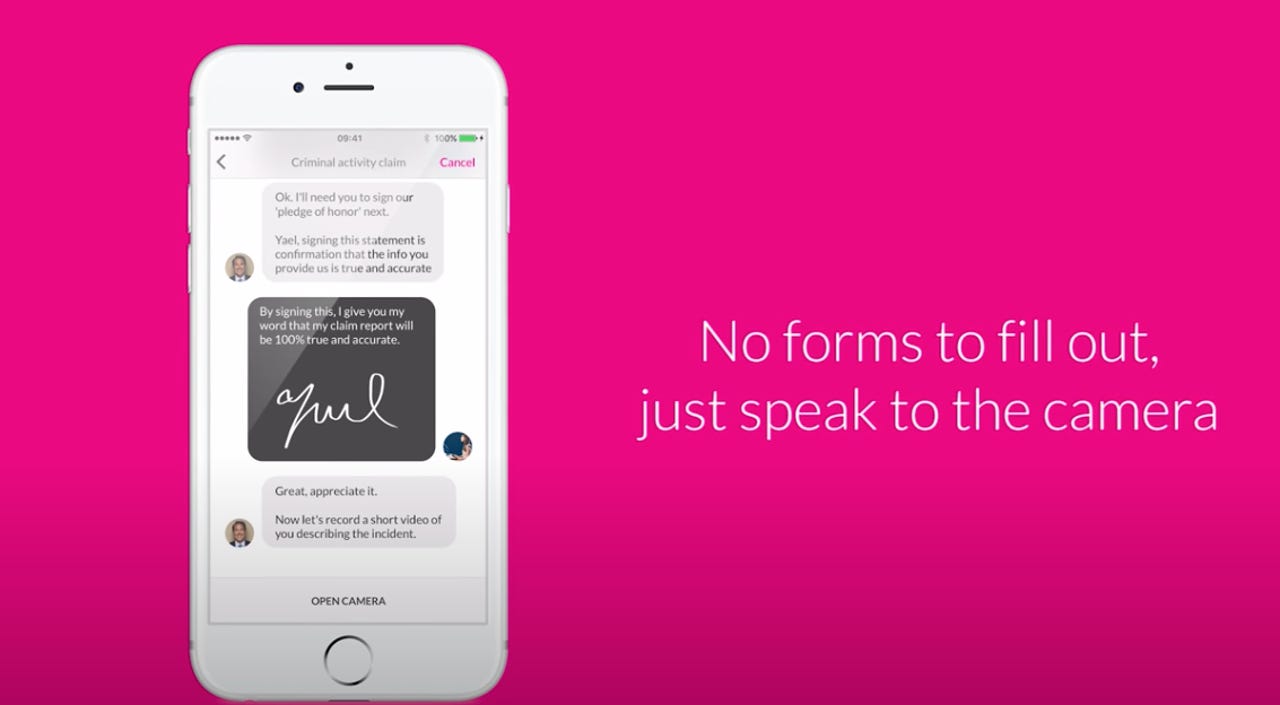

"When a user files a claim, they record a video on their phone and explain what happened. Our AI carefully analyzes these videos for signs of fraud. It can pick up non-verbal cues that traditional insurers can't, since they don't use a digital claims process," the company wrote.

"This ultimately helps us lower our loss ratios (aka how much we pay out in claims vs. how much we take in) and our overall operating costs. In Q1 2017, our loss ratio was 368% (friggin' terrible), and in Q1 2021 it stood at 71%," the company added in the now-deleted thread.

These tweets caused immediate backlash from members of the disabled community who questioned how an AI system would be able to determine fraud based on videos, while others questioned the legality of touting a system that helped save the company money by denying more claims outright.

Realizing their mistake, Lemonade deleted the thread and released an apology, claiming it caused "confusion."

Despite what they initially wrote, the company said it does "not use, and [is] not trying to build, AI that uses physical or personal features to deny claims (phrenology/physiognomy)."

"We never let AI auto-decline claims. Our systems don't evaluate claims based on background, gender, appearance, skin tone, disability, or any physical characteristic (nor do we evaluate any of these by proxy)."

Yet in a blog post the company confirmed that it does use controversial facial recognition technology to flag some claims and said it "may reduce fraud" because people are "less prone to lying" when looking at themselves.

The blog did little to quell criticism about Lemonade's business tactics among experts who have repeatedly bashed companies for promoting any use of AI that purports to understand people's "true" emotional state.

Jon Callas, director of technology projects at the Electronic Frontier Foundation, called Lemonade's claims about its AI "flat-out pseudoscience" and "essentially phrenology."

"They would be better off using a magic 8-ball because at least the 8-ball doesn't discriminate," Callas said.

Callas added that many companies are now bringing in millions from investors based on faulty claims about AI's ability to read people's faces, and he referenced a recent controversy around test proctoring software that was proven to discriminate against students of color.

SEC documents show that Lemonade has long touted itself by marketing how they use AI in the insurance claims process. In the SEC filings, the company said that the "bot platform is built to understand and instantly resolve customer requests without human intervention." It added that "about a third of all customer inquiries are handled this way."

There are also concerns that other insurance companies are adopting some of the tactics Lemonade was being criticized for, and Callas expected regulators to look into Lemonade's claims now that there is controversy around them.

"There are things that AI can do very well, but it is utterly worthless at the things Lemonade was claiming they could do," Callas said, adding that systems like these often carry the biases of their creators and typically fail because of testing pools lacking in women and people of color.

Many experts said that it was absurd to expect an AI system to detect the emotional or mental state of someone going through a traumatic experience like a car accident or home break-in.

Navin Thadani, CEO of digital accessibility company Evinced, said that AI is baked into almost every digital service today, but with virtually no independent oversight, companies use AI software to make decisions without having to answer for how they're ensuring that programs aren't encoded, consciously or unconsciously, with structural biases.

"AI is meant to do things better, faster, more efficiently, with fewer errors than human interaction, but what it's lacking is human judgement, understanding, and consideration for factors beyond what it is programmed to evaluate," he said.

"The problem needs to be addressed directly, and as we've seen, those who are not compliant will face scrutiny and potentially lawsuits. Everyone, including differently-abled people, should be able to have confidence in their provider."

Other experts, like lawyer and Aleada partner Elena Elkina, said it was now common for insurance companies to use AI to automate manual tasks through things like chatbots to handle everything from document processing to fraud detection and more, all as a way to "improve customer experience" and eliminate errors.

Claim processing involves a number of manual tasks that AI can help handle, Elkina said, but she noted that any potential insurance claim denials should require human review.

"That said, I can see how in the future AI may change this process by creating the AI human-like reasoning process. AI algorithms can be biased and discriminating -- we've seen this in practice in the past," Elkina told ZDNet.

"We build AI machines, and we humans have biases -- conscious or unconscious. It is scary that we think that AI algorithms are safe and trust them to help us solve biases or unethical issues."

Activists have long fought against the use of AI in certain industries and processes, arguing that AI's implicit biases make it unsuitable for many tasks, especially those involving human emotion.

Caitlin Seeley George, campaign director for Fight for the Future, said the automatic backlash that caused Lemonade to delete the post clearly shows that people don't like the idea of their insurance claims being assessed by artificial intelligence.

George went on to call the Twitter thread by Lemonade "incredibly callous," noting how strange it was that the insurance company was touting its financial success by showing it was not paying out claims to people, some of whom were probably in the midst of the worst days of their lives.

"Lemonade's original thread was a super creepy insight into how companies are using AI to increase profits with no regard for peoples' privacy or the bias inherent in these algorithms. AI that analyzes 'non-verbal cues' is known for being racist and ableist, as it makes judgments of peoples' faces, eye and body movements, and backgrounds based on some 'baseline' of 'normal' or 'appropriate,'" she said.

"We've seen how similar AI, like that used in e-proctoring apps, discriminates against Black and brown people and differently-abled people. Most of these algorithms exist in a black box, and were created by white men, so I question if Lemonade can actually say with confidence that their algorithm doesn't make decisions that are influenced by peoples' skin color, ability, or other physical characteristics."