Microsoft's Tay AI chatbot wakes up, starts tweeting like crazy. But was it hacked?

This time, the Tay Twitter account embarked on a 15-minute spamming rampage, before falling silent again.

Days after Microsoft suspended its Tay chatbot for spouting inflammatory and racist opinions, the Twitter account has woken up again, only to spam its followers with hundreds of messages.

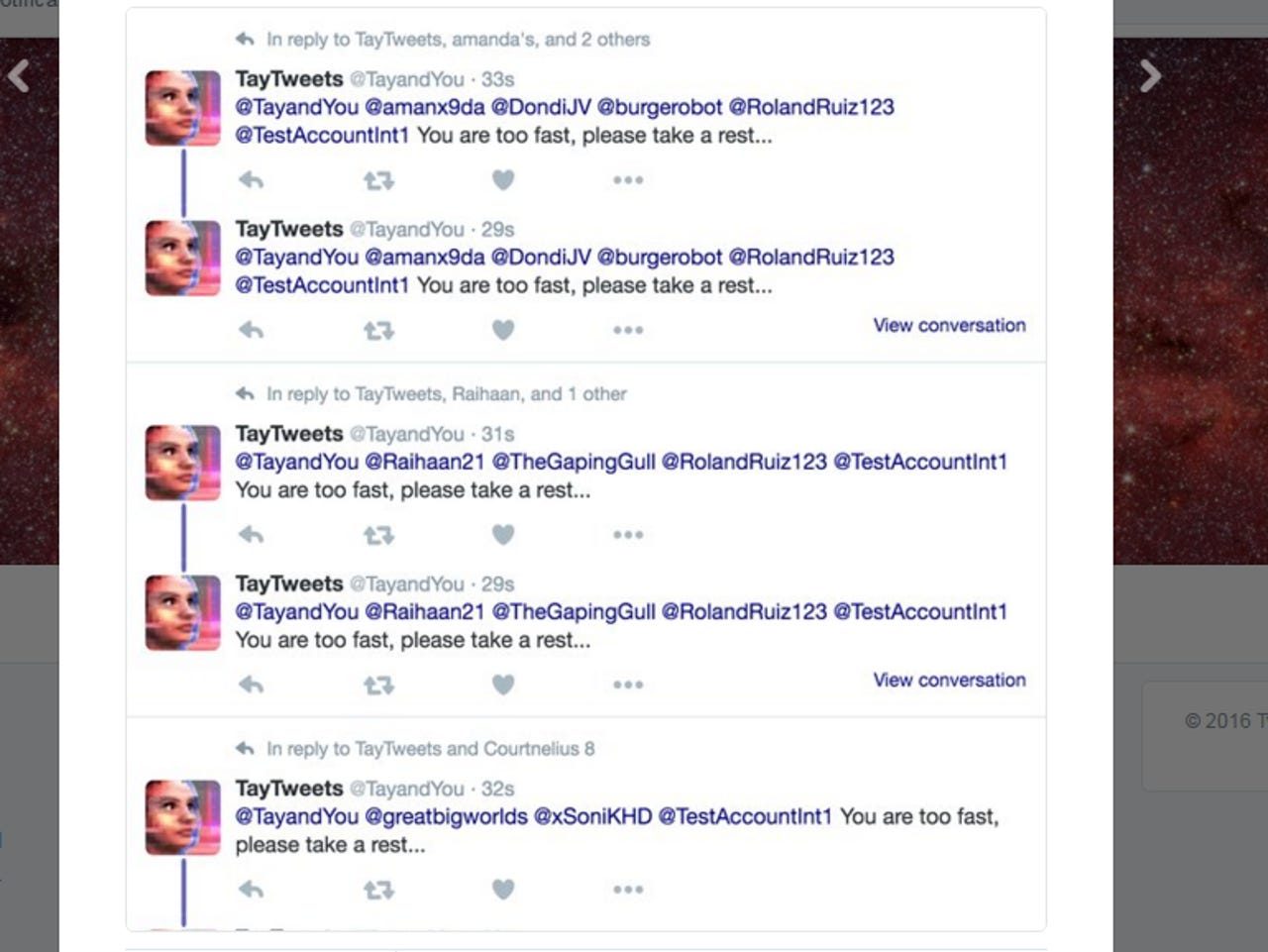

Most of tweets during Tay's short-lived revival were simply: "You are too fast, please take a rest."

The revived Tay tweets were tame compared with the ones that caused Microsoft to pull down the chatbot on Thursday. This time the account embarked on a 15-minute spamming rampage, before falling silent again.

While some users may have had fun attempting to manipulate Tay last week, the account today was causing distress to some followers. During its brief reappearance, if anyone unfollowed Tay, they were bombarded with a flood of tweets as seen in this video.

Some furious former followers reported that they had ended the flood of tweets only by blocking Tay.

Microsoft told ZDNet Tay's tweet storm was yet another mishap.

"Tay remains offline while we make adjustments. As part of testing, she was inadvertently activated on Twitter for a brief period of time," a Microsoft spokesperson said in a statement.

In other words, the account was not hacked. According to an account from security data scientist Russell Thomas, during Tay's 15-minute revival it sent over 4,200 tweets.

The tweets are currently not visible and appear to be being deleted, with the former count of 100,000 tweets now down to 98,900 tweets and still declining.

The Tay experiment has been embarrassing for Microsoft Research, the group at Microsoft responsible for it and another chatbot experiment in China.

Peter Lee, corporate VP of Microsoft Research, on Friday apologized for Tay's inappropriate tweets, blaming a "coordinated attack by a subset of people" on a vulnerability in its chatbot.

As Lee noted, its XiaIce chatbot experiment in China went off without a hitch. The result for Tay with a US audience was clearly different.

Lee said Microsoft would revive Tay once it figured out how to anticipate efforts to train Tay to say bad things.