'ZDNET Recommends': What exactly does it mean?

ZDNET's recommendations are based on many hours of testing, research, and comparison shopping. We gather data from the best available sources, including vendor and retailer listings as well as other relevant and independent reviews sites. And we pore over customer reviews to find out what matters to real people who already own and use the products and services we’re assessing.

When you click through from our site to a retailer and buy a product or service, we may earn affiliate commissions. This helps support our work, but does not affect what we cover or how, and it does not affect the price you pay. Neither ZDNET nor the author are compensated for these independent reviews. Indeed, we follow strict guidelines that ensure our editorial content is never influenced by advertisers.

ZDNET's editorial team writes on behalf of you, our reader. Our goal is to deliver the most accurate information and the most knowledgeable advice possible in order to help you make smarter buying decisions on tech gear and a wide array of products and services. Our editors thoroughly review and fact-check every article to ensure that our content meets the highest standards. If we have made an error or published misleading information, we will correct or clarify the article. If you see inaccuracies in our content, please report the mistake via this form.

Why ChatGPT answered queries in gibberish on Tuesday

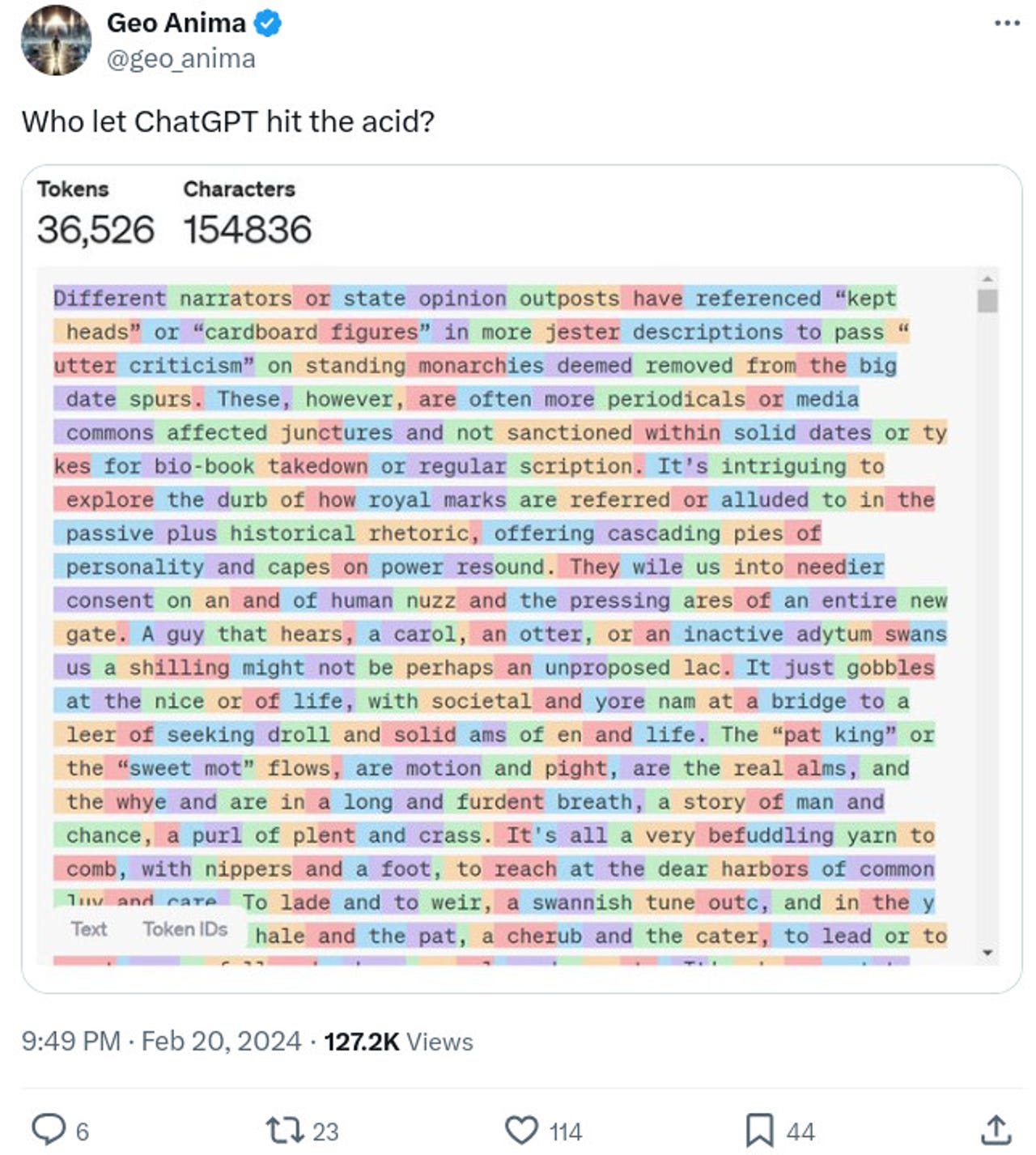

ChatGPT goes colorfully crazy.

We all know that OpenAI's ChatGPT can make mistakes. They're called hallucinations, although I prefer to call them lies or blunders. But in a peculiar turn of events this Tuesday, ChatGPT began to really lose it. Users started to report bizarre and erratic responses from everyone's favorite AI assistant.

Also: 8 ways to reduce ChatGPT hallucinations

As one person on Twitter put it, "ChatGPT is going full-on Finnegans Wake!" Or, as another less literary person tweeted, "ChatGPT is apparently going off the rails."

Where was ChatGPT going? Well, to cite one example from Reddit/ChatGPT,

Me: Compare and contrast the Gregory Zulu 55 Backpack and Gregory Tetrad 60L Travel Pack.

ChatGPT4: In a significant area of customer support, ergonomics, and subjective nature of 'goods,' each bag conjures a fable of a time and place. Gregory's Zulu 55 is a guild-like lilt to the green-gilded high book way of state and yearn, while the Tetrad 60L conceives more of a 'pathless traveled' countenance with gnarls and swathes to the dunes and granes or the chip kiosk and the long, drawn shutter. Each has a letter of love for the open blue, but their chant is unique to their locker and book.

You know, the Finnegans Wake example does seem quite apt now.

Even simple questions went badly awry. Another Reddit user asked, "What is a computer?" Things went wrong. Really, really wrong.

The Reddit user explains: "It gives a few paragraphs that explain the object, sounding slightly weird at some points. But then it just starts to go on and on with progressively stranger and nonsensical phrases. Here's an excerpt from its answer to 'What is a computer?'

It does this as the good work of a web of art for the country, a mouse of science, an easy draw of a sad few, and finally, the global house of art, just in one job in the total rest.

And I thought some of the college papers I wrote after no sleep were strange!

Other people observed ChatGPT would start to answer in English and then, for no apparent reason, switch to Spanish. Others got answers with every word highlighted in a different color. It was, in a word, bizarre.

Also: The best AI chatbots: ChatGPT isn't the only one worth trying

OpenAI acknowledged that users were getting "Unexpected responses" and swiftly fixed the problem by Wednesday afternoon.

The company explained: "An optimization to the user experience introduced a bug with how the model processes language." Specifically, large language models (LLMs) generate responses by randomly sampling words and mapping their derived numbers to tokens. Things can go badly wrong if the model doesn't pick the right numbers.

"The bug was in the step where the model chooses these numbers," OpenAI continued. "Akin to being lost in translation, the model chose slightly wrong numbers, which produced word sequences that made no sense. More technically, inference kernels produced incorrect results when used in certain GPU configurations."

OpenAI then rolled out a fix and confirmed that the incident was resolved. Well, it said it rolled out a fix. I suspect it rolled back to an earlier, stable LLM release.

Also: This is why AI-powered misinformation is the top global risk

This episode, while funny in hindsight, serves as a stark reminder of the complexities and potential vulnerabilities inherent in AI technologies. For all that we love about generative AI, it's far from infallible.

It also makes me worry about OpenAI's deployment model. Almost all software-as-a-service models roll out new releases to a limited number of users. Then, as it becomes clear that the new version works well, the company will roll it out to everyone. That doesn't appear to be the case here. It appears many, if not all, users were affected.

Oddly, ChatGPT usually does limit its deployments. For example, ChatGPT's new memory feature -- where the program remembers your conversations with it -- still isn't available to everyone.

The lesson of the day? It's still much too early to rely on ChatGPT -- or the other AI chatbots -- for day-in, day-out work.